- First, we present an end-to-end script using the Universal Scraper API.

- Then, we demonstrate how to transition to the dedicated Scraper APIs for improved accuracy and ease of use.

Initial Method via the Universal Scraper API

Using the Universal Scraper API, you’d typically extract product URLs from a product listing, visit each page via the URL, and save individual product data into a CSV file:Universal Scraper API

Parsing Logic and Extracting Product Data

Once the HTML content is obtained, the next step is to parse the webpage and extract the product information you need: title, price, link, and availability.Parsing logic

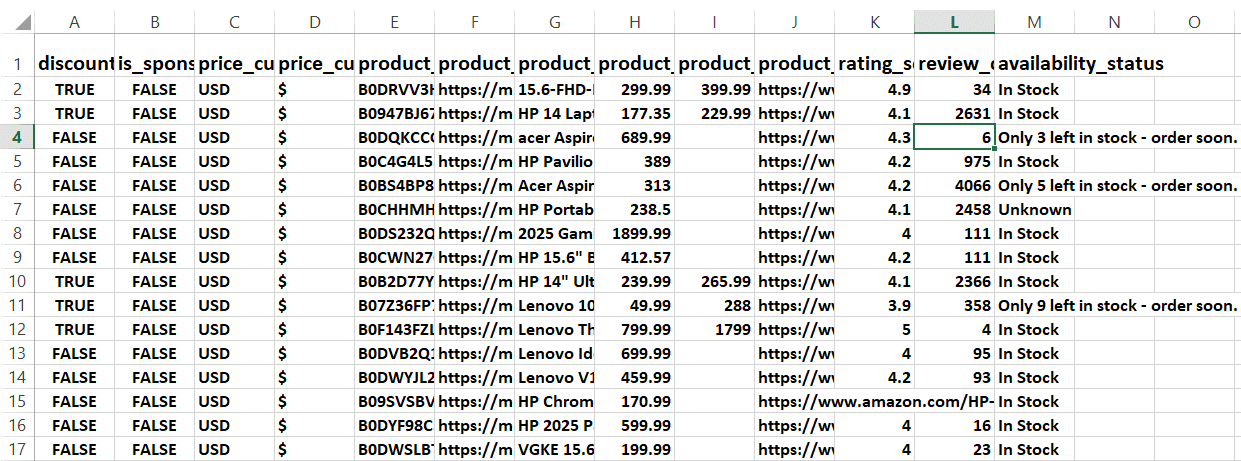

Store the data in a CSV file

Once all data is collected and structured, the following function saves the data into a CSV file:Store in a CSV file

Here’s Everything Together

Here’s the complete Python script combining all the logic we explained above.Complete code

Transition to the Amazon Scraper API

The Scraper API offers a more streamlined experience than the Universal Scraper API. It eliminates the parsing stage and instantly delivers ready-to-use data at your fingertips. You can extract product data from Amazon using the following Scraper APIs:- Amazon Product Discovery API: Retrieves a list of products based on a search term. Here’s the endpoint:

https://ecommerce.api.zenrows.com/v1/targets/amazon/discovery/<search_term>

- Product Information API: Fetches detailed information from individual product pages. See the endpoint below:

https://ecommerce.api.zenrows.com/v1/targets/amazon/products/<ASIN>

- Retrieve Amazon search results using the Product Discovery endpoint.

- Extract each product’s ASIN from the JSON response.

- Use the ASIN to fetch product details (e.g., Availability) from the Product Information endpoint.

- Update the initial search results with each product’s availability data obtained from the Product Information endpoint.

Store the data

You can store the combined data in a CSV or dedicated database. The code below writes each product detail to a new CSV row and stores the file asproducts.csv: