This guide takes you through the step-by-step migration process from the Universal Scraper API to the new dedicated Google Search Results API:

- We’ll first show an end-to-end code demonstrating how you’ve been doing it with the Universal Scraper API.

- Next, we’ll show you how to upgrade to the new Google Search API.

This guide helps you transition to the Search API in just a few steps.

Previous Method via the Universal Scraper API

Previously, you’ve handled requests targeting Google’s search results page using the Universal Scraper API. This approach involves:

- Manually parsing the search result page with an HTML parser like Beautiful Soup

- Exporting the data to a CSV

- Dealing with fragile, complex selectors that change frequently - a common challenge when scraping Google.

First, you’d request the HTML content of the search result page:

# pip install requests

import requests

def get_html(url):

apikey = "YOUR_ZENROWS_API_KEY"

params = {

"url": url,

"apikey": apikey,

"js_render": "true",

"premium_proxy": "true",

"wait": "3000",

}

response = requests.get("https://api.zenrows.com/v1/", params=params)

if response.status_code == 200:

return response.text

else:

print(

f"Request failed with status code {response.status_code}: {response.text}"

)

return None

Parsing Logic and Search Result Data Extraction

The next step involves identifying and extracting titles, links, snippets, and displayed links from deeply nested elements. This is where things get tricky.

Google’s HTML structure is not only dense but also highly dynamic, which makes selector-based scraping brittle. Here’s an example:

# pip install beautifulsoup4

from bs4 import BeautifulSoup

def scraper(url):

html_content = get_html(url)

soup = BeautifulSoup(html_content, "html.parser")

serps = soup.find_all("div", class_="N54PNb")

results = []

for content in serps:

data = {

"title": content.find("h3", class_="LC20lb").get_text(),

"link": content.find("a")["href"],

"displayed_link": content.find("cite").get_text(),

"snippet": content.find(class_="VwiC3b").get_text(),

}

results.append(data)

return results

The above selectors (e.g., N54PNb, LC20lb, VwiC3b) are not stable. They may change often and break your scraper overnight. Maintaining such selectors is only viable if you’re scraping Google daily and are ready to troubleshoot frequently.

Store the Data

The last step after scraping the result page is to store the data:

import csv

def save_to_csv(data, filename="serp_results.csv"):

with open(filename, mode="w", newline="", encoding="utf-8") as file:

writer = csv.DictWriter(

file,

fieldnames=[

"title",

"link",

"displayed_link",

"snippet",

],

)

writer.writeheader()

writer.writerows(data)

url = "https://www.google.com/search?q=nintendo/"

data = scraper(url)

save_to_csv(data)

print("Data stored to serp_results.csv")

Putting Everything Together

Combining all these steps gives the following complete code:

# pip install requests beautifulsoup

import requests

from bs4 import BeautifulSoup

import csv

def get_html(url):

apikey = "YOUR_ZENROWS_API_KEY"

params = {

"url": url,

"apikey": apikey,

"js_render": "true",

"premium_proxy": "true",

"wait": "3000",

}

response = requests.get("https://api.zenrows.com/v1/", params=params)

if response.status_code == 200:

return response.text

else:

print(

f"Request failed with status code {response.status_code}: {response.text}"

)

return None

def scraper(url):

html_content = get_html(url)

soup = BeautifulSoup(html_content, "html.parser")

serps = soup.find_all("div", class_="N54PNb")

results = []

for content in serps:

data = {

"title": content.find("h3", class_="LC20lb").get_text(),

"link": content.find("a")["href"],

"displayed_link": content.find("cite").get_text(),

"snippet": content.find(class_="VwiC3b").get_text(),

}

results.append(data)

return results

def save_to_csv(data, filename="serp_results.csv"):

with open(filename, mode="w", newline="", encoding="utf-8") as file:

writer = csv.DictWriter(

file,

fieldnames=[

"title",

"link",

"displayed_link",

"snippet",

],

)

writer.writeheader()

writer.writerows(data)

url = "https://www.google.com/search?q=nintendo/"

data = scraper(url)

save_to_csv(data)

print("Data stored to serp_results.csv")

Transition the Search to the Google Search Results API

The Google Search Results API automatically handles the parsing stage, providing the data you need out of the box, with no selector maintenance required.

To use the Search API, you only need its endpoint and a query term. The API then returns a JSON data containing the query results, including pagination details like the next search result page URL.

See the Google Search API endpoint below:

https://serp.api.zenrows.com/v1/targets/google/search/

- Send a query (e.g., Nintendo) through the Google Search Result API.

- Retrieve the search result in JSON format.

- Get the organic search results from the JSON data.

Here’s the code to achieve the above steps:

# pip install requests and urllib.parse

import requests

import urllib.parse

# Define the search query

query = "Nintendo"

encoded_query = urllib.parse.quote(query)

# Set up API endpoint and parameters

api_endpoint = f"https://serp.api.zenrows.com/v1/targets/google/search/{encoded_query}"

# function to get the JSON search result from the API

def scraper():

apikey = "YOUR_ZENROWS_API_KEY"

params = {

"apikey": apikey,

}

response = requests.get(

f"{api_endpoint}",

params=params,

)

return response.json()

# get the organic search result from the JSON

serp_results = scraper().get("organic_results")

print(serp_results)

[

{

"displayed_link": "https://www.nintendo.com \u203a ...",

"link": "https://www.nintendo.com/us/",

"snippet": "Visit the official Nintendo site...",

"title": "Nintendo - Official Site: Consoles, Games, News, and More"

},

{

"displayed_link": "https://my.nintendo.com \u203a ...",

"link": "https://my.nintendo.com/?lang=en-US",

"snippet": "My Nintendo makes playing games and interacting...",

"title": "My Nintendo"

},

...

]

Store the Data

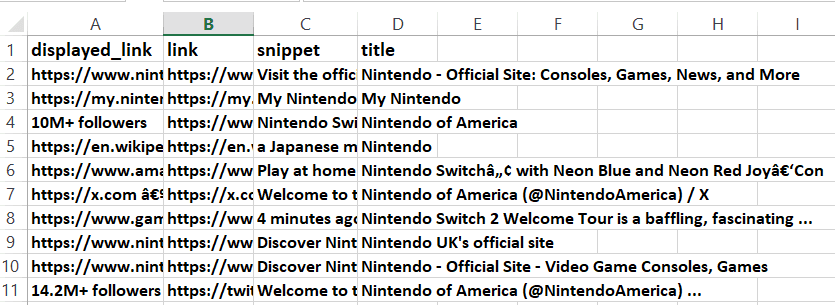

You can store the organic search results retrieved above in a CSV file or a local or remote database.

The following code exports the scraped data to a CSV file by creating each result in a new row under the relevant columns:

# save the data to CSV

csv_filename = "serp_results.csv"

with open(csv_filename, mode="w", newline="", encoding="utf-8") as file:

writer = csv.DictWriter(file, fieldnames=serp_results[0].keys())

writer.writeheader()

writer.writerows(serp_results)

# pip install requests, urllib.parse and csv

import requests

import csv

import urllib.parse

# Define the search query

query = "Nintendo"

encoded_query = urllib.parse.quote(query)

# Set up API endpoint and parameters

api_endpoint = f"https://serp.api.zenrows.com/v1/targets/google/search/{encoded_query}"

# function to get the JSON search result from the API

def scraper():

apikey = "YOUR_ZENROWS_API_KEY"

params = {

"apikey": apikey,

}

response = requests.get(

f"{api_endpoint}",

params=params,

)

return response.json()

# get the organic search result from the JSON

serp_results = scraper().get("organic_results")

# save the data to CSV

csv_filename = "serp_results_api.csv"

with open(csv_filename, mode="w", newline="", encoding="utf-8") as file:

writer = csv.DictWriter(file, fieldnames=serp_results[0].keys())

writer.writeheader()

writer.writerows(serp_results)

Scaling Up Your Scraping

Once you have your first results, it is easy to scale up. You can follow subsequent result pages by extracting the next page query from the pagination field in the JSON response.

For example, if the JSON includes a pagination object like this:

{

"...": "...",

"pagination": {

"next_page": "https://www.google.com/search?q=Nintendo&sca_...&start=10&sa..."

}

}

Conclusion

By migrating to the Google Search Results API, you’ve eliminated the need to manage fragile selectors and parse complex HTML, making your scraping setup far more reliable and efficient.

Instead of reacting to frequent front-end changes, you now receive clean, structured JSON that saves time, reduces maintenance, and allows you to focus on building valuable tools like SEO dashboards, rank trackers, or competitor monitors. With ZenRows handling the heavy lifting, your team can stay productive and scale confidently.