Switching from the Universal Scraper API to dedicated Zillow Scraper APIs simplifies data extraction while reducing development overhead and ongoing maintenance. This guide provides a step-by-step migration path from the Universal Scraper API to using specialized APIs that deliver clean, structured data with minimal code.

In this guide, you’ll learn:

- How to extract property data using the Universal Scraper API.

- How to transition to the dedicated Zillow Scraper APIs.

- The advantages of using Zillow Scraper APIs.

Initial Method via the Universal Scraper API

When extracting property data from Zillow using the Universal Scraper API, you need to make HTTP requests to specific property URLs and manually process the returned HTML.

Retrieving Property Data

To extract data from a Zillow property page, you need to set up proper parameters for the Universal Scraper API:

# pip install requests

import requests

url = 'https://www.zillow.com/homedetails/177-Benedict-Rd-Staten-Island-NY-10304/32294383_zpid/'

apikey = 'YOUR_ZENROWS_API_KEY'

params = {

'url': url,

'apikey': apikey,

'js_render': 'true', # Enable JavaScript rendering

'premium_proxy': 'true', # Use premium proxy feature

}

response = requests.get('https://api.zenrows.com/v1/', params=params)

# Check if the request was successful

if response.status_code == 200:

print(response.text) # Return the raw HTML content

else:

print(f"Request failed with status code {response.status_code}: {response.text}")

Parsing the Returned Data

Once the raw HTML is retrieved, you’ll need to parse the page using BeautifulSoup to extract relevant information.

from bs4 import BeautifulSoup

def parse_property_html(html):

if not html:

print("No HTML to parse")

return None

try:

soup = BeautifulSoup(html, "html.parser")

# Address

address_tag = soup.select_one("div[class*='AddressWrapper']")

address = address_tag.get_text(strip=True) if address_tag else "N/A"

# Price

price_tag = soup.select_one("span[data-testid='price']")

price = price_tag.get_text(strip=True) if price_tag else "N/A"

# Bedrooms

bedrooms_tag = soup.select("span[class*='StyledValueText']")

bedrooms = bedrooms_tag[0].get_text(strip=True) if len(bedrooms_tag) > 0 else "N/A"

# Bathrooms

bathrooms_tag = soup.select("span[class*='StyledValueText']")

bathrooms = bathrooms_tag[1].get_text(strip=True) if len(bathrooms_tag) > 1 else "N/A"

# Square feet

sqft_tag = soup.select("span[class*='StyledValueText']")

square_feet = sqft_tag[2].get_text(strip=True) if len(sqft_tag) > 2 else "N/A"

return {

"url": property_url,

"address": address,

"price": price,

"bedrooms": bedrooms,

"bathrooms": bathrooms,

"square_feet": square_feet,

}

except Exception as e:

print(f"Error parsing HTML: {e}")

return None

The CSS selectors in this example are unstable and may break without warning. They require constant maintenance and monitoring to keep your scraper functional.

Storing the Data in a CSV File

Once parsed, the data can be stored for later analysis:

Storing Data in a CSV File

import csv

# ...

def save_to_csv(data, filename="zillow_property.csv"):

if not data:

print("No data to save")

return

try:

# Save to CSV format

with open(filename, mode="w", newline="", encoding="utf-8") as file:

writer = csv.DictWriter(file, fieldnames=data.keys())

writer.writeheader()

writer.writerow(data)

print(f"Data saved to {filename}")

except Exception as e:

print(f"Error saving data to CSV: {e}")

Putting Everything Together

Here’s the complete Python script that fetches, processes, and stores Zillow property data using the Universal Scraper API:

import requests

import csv

from bs4 import BeautifulSoup

property_url = "https://www.zillow.com/homedetails/177-Benedict-Rd-Staten-Island-NY-10304/32294383_zpid/"

apikey = "YOUR_ZENROWS_API_KEY"

# Step 1: Retrieving Property Data

def get_property_data(property_url):

params = {

"url": property_url,

"apikey": apikey,

"js_render": "true", # Enable JavaScript rendering

"premium_proxy": "true", # Use premium proxy feature

}

response = requests.get("https://api.zenrows.com/v1/", params=params)

if response.status_code == 200:

return response.text # Return the raw HTML content

else:

print(f"Request failed with status code {response.status_code}: {response.text}")

return None

# Step 2: Parsing the Returned Data

def parse_property_html(html):

if not html:

print("No HTML to parse")

return None

try:

soup = BeautifulSoup(html, "html.parser")

# Address

address_tag = soup.select_one("div[class*='AddressWrapper']")

address = address_tag.get_text(strip=True) if address_tag else "N/A"

# Price

price_tag = soup.select_one("span[data-testid='price']")

price = price_tag.get_text(strip=True) if price_tag else "N/A"

# Bedrooms

bedrooms_tag = soup.select("span[class*='StyledValueText']")

bedrooms = bedrooms_tag[0].get_text(strip=True) if len(bedrooms_tag) > 0 else "N/A"

# Bathrooms

bathrooms_tag = soup.select("span[class*='StyledValueText']")

bathrooms = bathrooms_tag[1].get_text(strip=True) if len(bathrooms_tag) > 1 else "N/A"

# Square feet

sqft_tag = soup.select("span[class*='StyledValueText']")

square_feet = sqft_tag[2].get_text(strip=True) if len(sqft_tag) > 2 else "N/A"

return {

"url": property_url,

"address": address,

"price": price,

"bedrooms": bedrooms,

"bathrooms": bathrooms,

"square_feet": square_feet,

}

except Exception as e:

print(f"Error parsing HTML: {e}")

return None

# Step 3: Storing the Data in a CSV File

def save_to_csv(data, filename="zillow_property.csv"):

if not data:

print("No data to save")

return

try:

with open(filename, mode="w", newline="", encoding="utf-8") as file:

writer = csv.DictWriter(file, fieldnames=data.keys())

writer.writeheader()

writer.writerow(data)

print(f"Data saved to {filename}")

except Exception as e:

print(f"Error saving data to CSV: {e}")

# Everything Together: Full Workflow

html_response = get_property_data(property_url) # Step 1: Fetch the raw property HTML via the API

parsed_data = parse_property_html(html_response) # Step 2: Parse the raw HTML into a structured format

save_to_csv(parsed_data) # Step 3: Save the structured data into a CSV file

Transitioning to the Zillow Scraper APIs

The dedicated Zillow Scraper APIs provide structured, ready-to-use real estate data through two specialized endpoints, the Zillow Property Data API and the Zillow Discovery API. These APIs offer several advantages over the Universal Scraper API:

- No need to maintain selectors or parsing logic: The Zillow APIs return structured data, so you don’t need to use BeautifulSoup, XPath, or fragile CSS selectors.

- No need to maintain parameters: Unlike the Universal Scraper API, you don’t need to manage parameters such as

js_render, premium_proxy, or others.

- Simplified integration: Purpose-built endpoints for Zillow data that require minimal code to implement.

- Reliable and accurate: Specialized extraction logic that consistently delivers property data.

- Fixed pricing for predictable scaling: Clear cost structure that makes budgeting for large-scale scraping easier.

Using the Zillow Property Data API

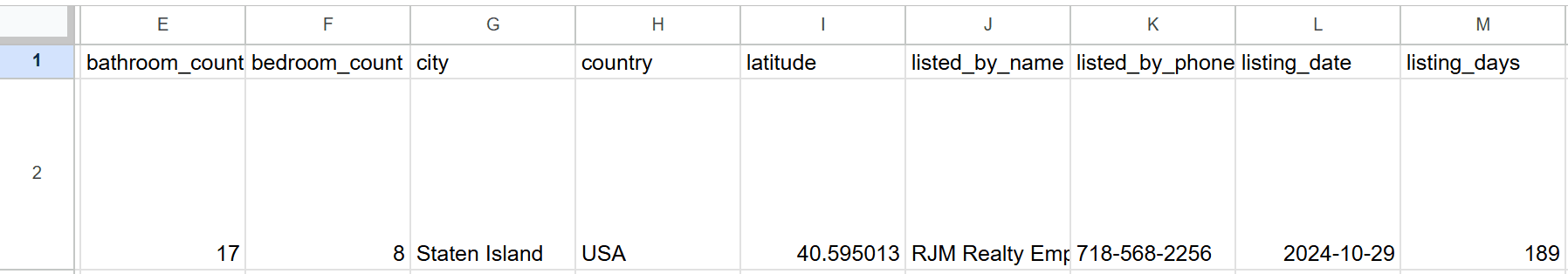

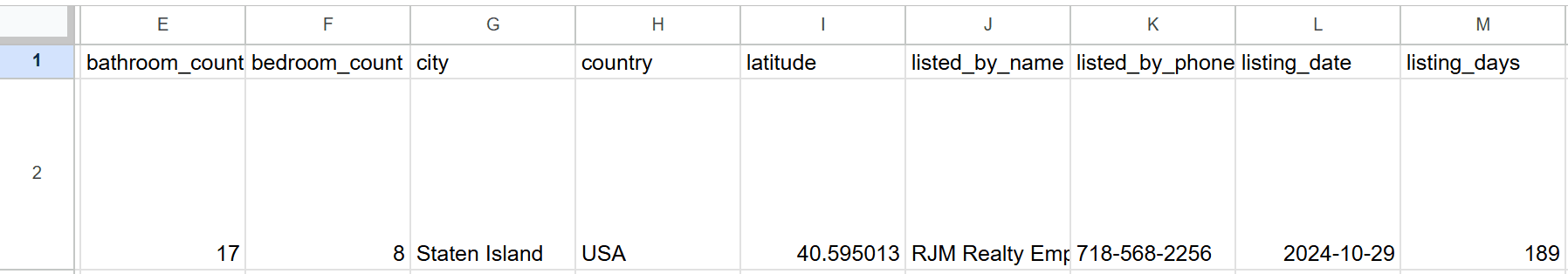

The Zillow Property Data API returns valuable data points such as precise location coordinates, address, price, tax rates, property dimensions, agent details, etc., all in a standardized JSON format that’s immediately usable in your applications.

Here’s the updated code using the Zillow Property Data API:

# pip install requests

import requests

import csv

# example Zillow property ZPID

zpid = "32294383"

api_endpoint = "https://realestate.api.zenrows.com/v1/targets/zillow/properties/"

# get the property data

def get_property_data(zpid):

url = api_endpoint + zpid

params = {

"apikey": "YOUR_ZENROWS_API_KEY",

}

response = requests.get(url, params=params)

if response.status_code == 200:

return response.json()

else:

print(f"Request failed with status code {response.status_code}: {response.text}")

return None

# save the property data to CSV

def save_property_to_csv(property_data, filename="zillow_property.csv"):

if not property_data:

print("No data to save")

return

# the API returns clean, structured data that can be saved directly

with open(filename, mode="w", newline="", encoding="utf-8") as file:

# get all fields from the API response

fieldnames = property_data.keys()

writer = csv.DictWriter(file, fieldnames=fieldnames)

writer.writeheader()

writer.writerow(property_data)

print(f"Property data saved to {filename}")

# process and save to CSV

property_data = get_property_data(zpid)

save_property_to_csv(property_data)

Congratulations! 🎉 You’ve successfully upgraded to using an API that delivers clean, structured property data ready for immediate use.

Now, let’s explore how the Zillow Discovery API can help you search for properties and retrieve multiple listings with similar ease.

Congratulations! 🎉 You’ve successfully upgraded to using an API that delivers clean, structured property data ready for immediate use.

Now, let’s explore how the Zillow Discovery API can help you search for properties and retrieve multiple listings with similar ease.

Using the Zillow Discovery API

The Zillow Discovery API enables property searching with results that include essential details like property addresses, prices with currency symbols, bedroom/bathroom counts, listing status, property types, direct links to property pages, etc.

The API also handles pagination, making it easy to navigate through multiple pages of results.

# pip install requests

import requests

import csv

# Search properties by URL

url = 'https://www.zillow.com/new-york-ny/'

params = {

'apikey': "YOUR_ZENROWS_API_KEY",

'url': url,

}

response = requests.get('https://realestate.api.zenrows.com/v1/targets/zillow/discovery/', params=params)

if response.status_code == 200:

data = response.json()

properties = data.get("property_list", [])

if properties:

with open("zillow_search_results.csv", mode="w", newline="", encoding="utf-8") as file:

writer = csv.DictWriter(file, fieldnames=properties[0].keys())

writer.writeheader()

writer.writerows(properties)

print(f"{len(properties)} properties saved to zillow_search_results.csv")

else:

print("No properties found in search results")

else:

print(f"Request failed with status code {response.status_code}: {response.text}")

Conclusion

The benefits of migrating from the Universal Scraper API to the dedicated Zillow Scraper API extend beyond simplified code. It offers maintenance-free operation as ZenRows handles all Zillow website changes, provides more reliable performance with consistent response times, and enhances data coverage with specialized fields not available through the Universal Scraper API, where you need to maintain parameters.

By following this guide, you have successfully upgraded to using APIs that deliver clean, structured property data ready for immediate use, allowing you to build scalable real estate data applications without worrying about the complexities of web scraping or HTML parsing.  Congratulations! 🎉 You’ve successfully upgraded to using an API that delivers clean, structured property data ready for immediate use.

Now, let’s explore how the Zillow Discovery API can help you search for properties and retrieve multiple listings with similar ease.

Congratulations! 🎉 You’ve successfully upgraded to using an API that delivers clean, structured property data ready for immediate use.

Now, let’s explore how the Zillow Discovery API can help you search for properties and retrieve multiple listings with similar ease.