How to Use ZenRows with Node.js

Before starting, ensure you have Node.js 18+ installed on your machine. Using an IDE like Visual Studio Code or IntelliJ IDEA will also enhance your coding experience. We’ll create a Node.js script namedscraper.js inside a /scraper directory. If you need help setting up your environment, check out our Node.js scraping guide for detailed instructions on preparing everything.

Install Node.js’s Axios Library

To interact with the ZenRows API in a Node.js environment, you can use the popular HTTP client library, Axios. Axios simplifies making HTTP requests and handling responses, making it an ideal choice for integrating with web services such as ZenRows. To installaxios in your Node.js project, run the following command in your terminal:

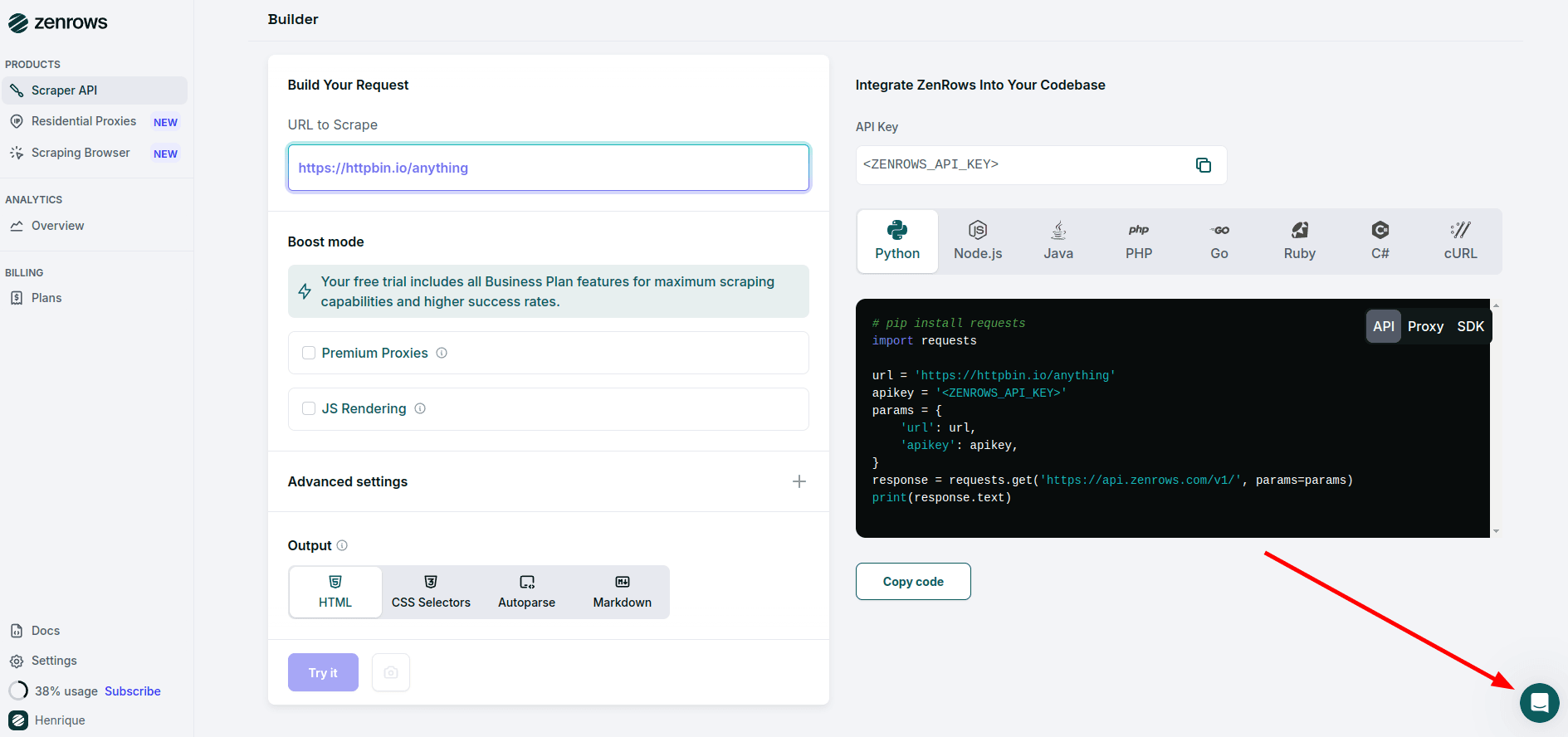

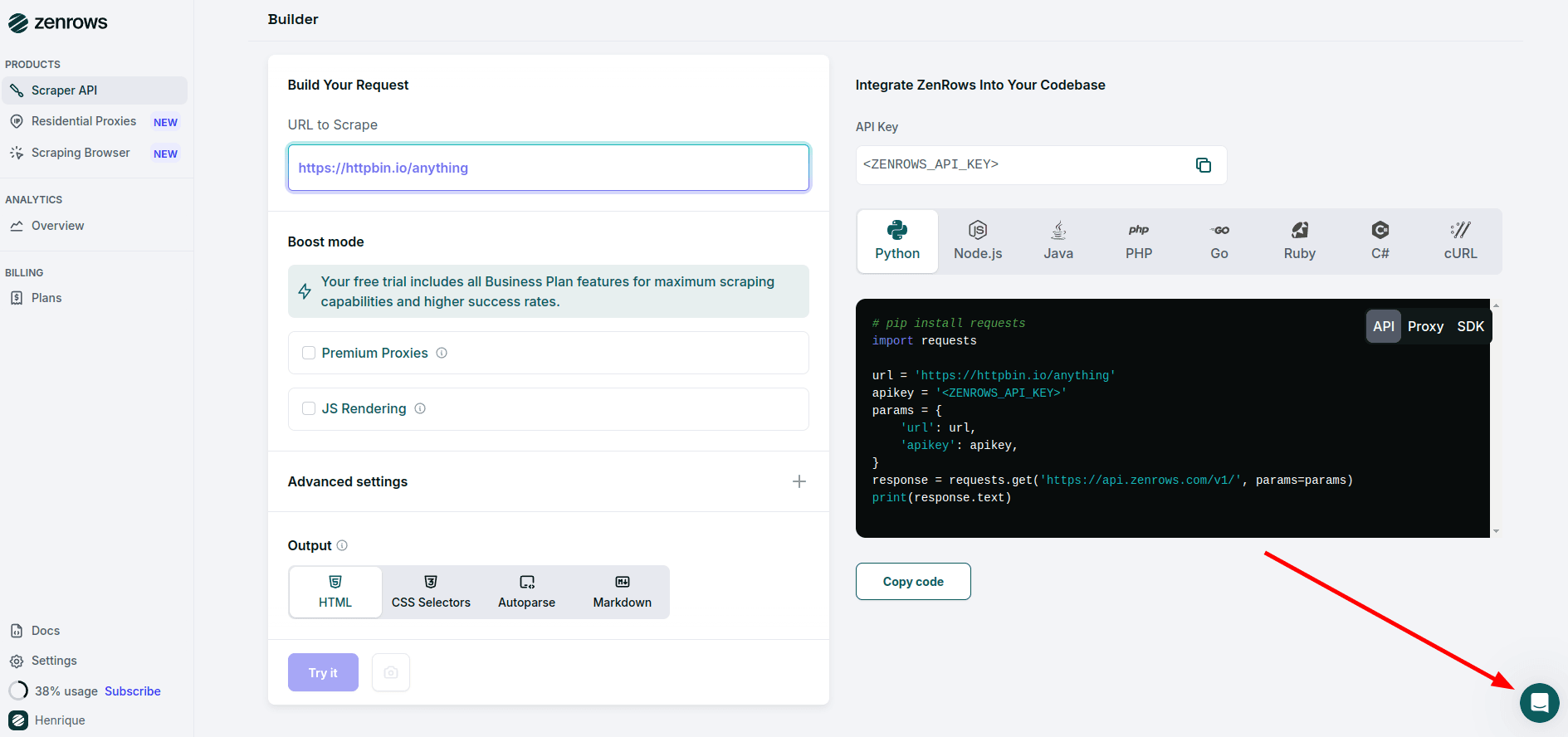

Perform Your First API Request

In this step, you will send your first request to ZenRows using the Axios library to scrape content from a simple URL. We will use the HTTPBin.io/get endpoint to demonstrate how ZenRows processes the request and returns the data. Here’s an example:scraper.js

YOUR_ZENROWS_API_KEY with your actual API key and run the script:

Scrape More Complex Web Pages

While scraping simple sites like HTTPBin is straightforward, many websites, especially those with dynamic content or strict anti-scraping measures, require additional features. ZenRows allows you to bypass these defenses by enabling JavaScript Rendering and using Premium Proxies. For example, if you try to scrape a page like G2’s Jira reviews without any extra configurations, you’ll encounter an error:scraper.js

Troubleshooting

Request failures can happen for various reasons. While some issues can be resolved by adjusting ZenRows parameters, others are beyond your control, such as the target server being temporarily down. Below are some quick troubleshooting steps you can take:Retry the Request

Network issues or temporary failures can cause your request to fail. Implementing retry logic can solve this by automatically repeating the request. Learn how to add retries in our Node.js Axios retry guide.Example of retry logic using requests:

scraper.js

Verify the Site is Accessible in Your Country

Sometimes, the target site might be region-restricted and only available to some proxies. ZenRows automatically selects the best proxy, but if the site is available only in specific regions, specify a geolocation using If the target site requires access from a specific region, adding the

proxy_country.Here’s how to choose a proxy in the US:proxy_country parameter will help.Check if the Site is Publicly Accessible

Some websites may require a session, so verifying if the site can be accessed without logging in is a good idea. Open the target page in an incognito browser to check this.You must handle session management in your requests if authentication credentials are required. You can learn how to scrape a website that requires authentication in our guide: Web scraping with Python.

Get Help From ZenRows Experts

If the issue persists despite following these tips, our support team is available to assist you. Use the Playground page or contact us via email to get personalized help from ZenRows experts.

Frequently Asked Questions (FAQ)

How can I bypass CloudFlare and other protections?

How can I bypass CloudFlare and other protections?

To successfully bypass CloudFlare or similar security mechanisms, you’ll need to enable both

js_render and premium_proxy in your requests. These features simulate a full browser environment and use high-quality residential proxies to avoid detection.You can also enhance your request by adding options like wait or wait_for to ensure the page fully loads before extracting data, improving accuracy.How can I ensure my requests don't fail?

How can I ensure my requests don't fail?

You can configure retry logic to handle failed HTTP requests. Learn more in our guide on retrying requests.

How do I extract specific content from a page?

How do I extract specific content from a page?

You can use the

css_extractor parameter to directly extract content from a page using CSS selectors. Find out more in our tutorial on data parsing.Can I integrate ZenRows with Node.js and Cheerio?

Can I integrate ZenRows with Node.js and Cheerio?

Yes! You can integrate ZenRows with Node.js and Cheerio for efficient HTML parsing and web scraping. Check out our guide to learn how to combine these tools: Node.js and Cheerio integration.

How can I simulate user interactions on the target page?

How can I simulate user interactions on the target page?

Use the

js_render and js_instructions features to simulate actions such as clicking buttons or filling out forms. Discover more about interacting with web pages in our JavaScript instructions guide.How can I scrape faster using ZenRows?

How can I scrape faster using ZenRows?

You can scrape multiple URLs simultaneously by making concurrent API calls. Check out our guide on using concurrency to boost your scraping speed.