What Is Make?

Make is a no-code platform for automating tasks by connecting applications and services. The platform operates on scenario workflows, where connected services or apps execute tasks sequentially based on a user’s input. Make integrates with several services, including ZenRows, Google Sheets, Gmail, and many more, allowing users to link processes visually. ZenRows’ integration with Make enables users to automate ZenRows’ web scraping service, from data collection to scheduling, storage, and monitoring.Available ZenRows Integrations

- Monitor your API usage: Returns the Universal Scraper API usage information

- Make an API Call: Sends a GET, POST, or PUT request to a target URL via the Universal Scraper API.

- Scraping a URL with Autoparse: Automates the Universal Scraper API to auto-parse a target website’s HTML elements. It returns structured JSON data.

The autoparse option only works for some websites. Learn more about how it works in the autoparse FAQ.

- Scraping a URL with CSS Selectors: Automates data extraction from an array of CSS selectors.

Watch the Video Tutorial

Learn how to set up the Make ↔ ZenRows integration step-by-step by watching this video tutorial:Real-World End-to-End Integration Example

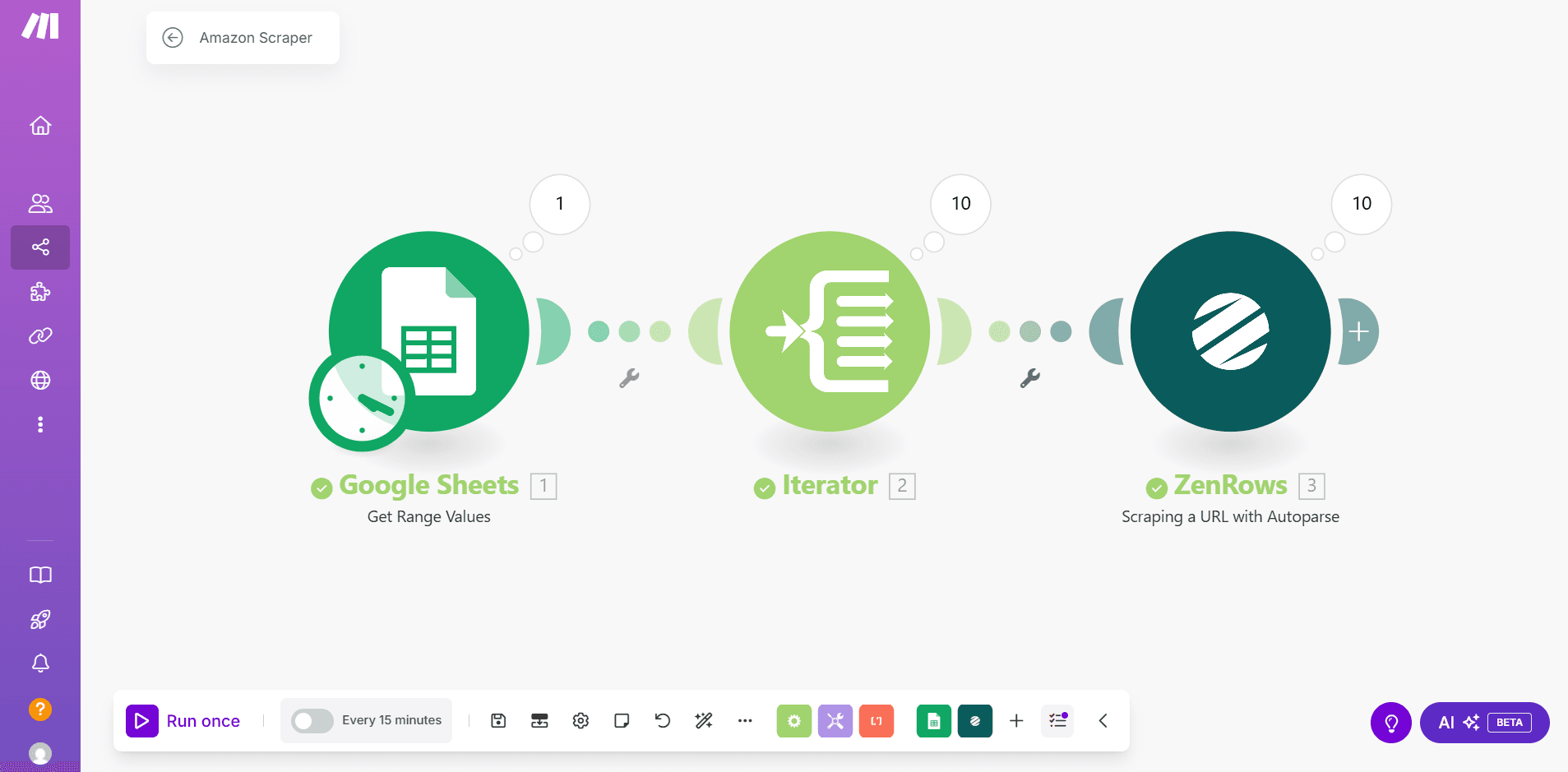

We’ll build an end-to-end Amazon scraping Scenario using Make and ZenRows with the following steps:- Pull target URLs from Google Sheets into Make’s Iterator

- Utilize ZenRows’ Scraping a URL with Autoparse integration option to extract data from URLs automatically

- Save the extracted data to Google Sheets

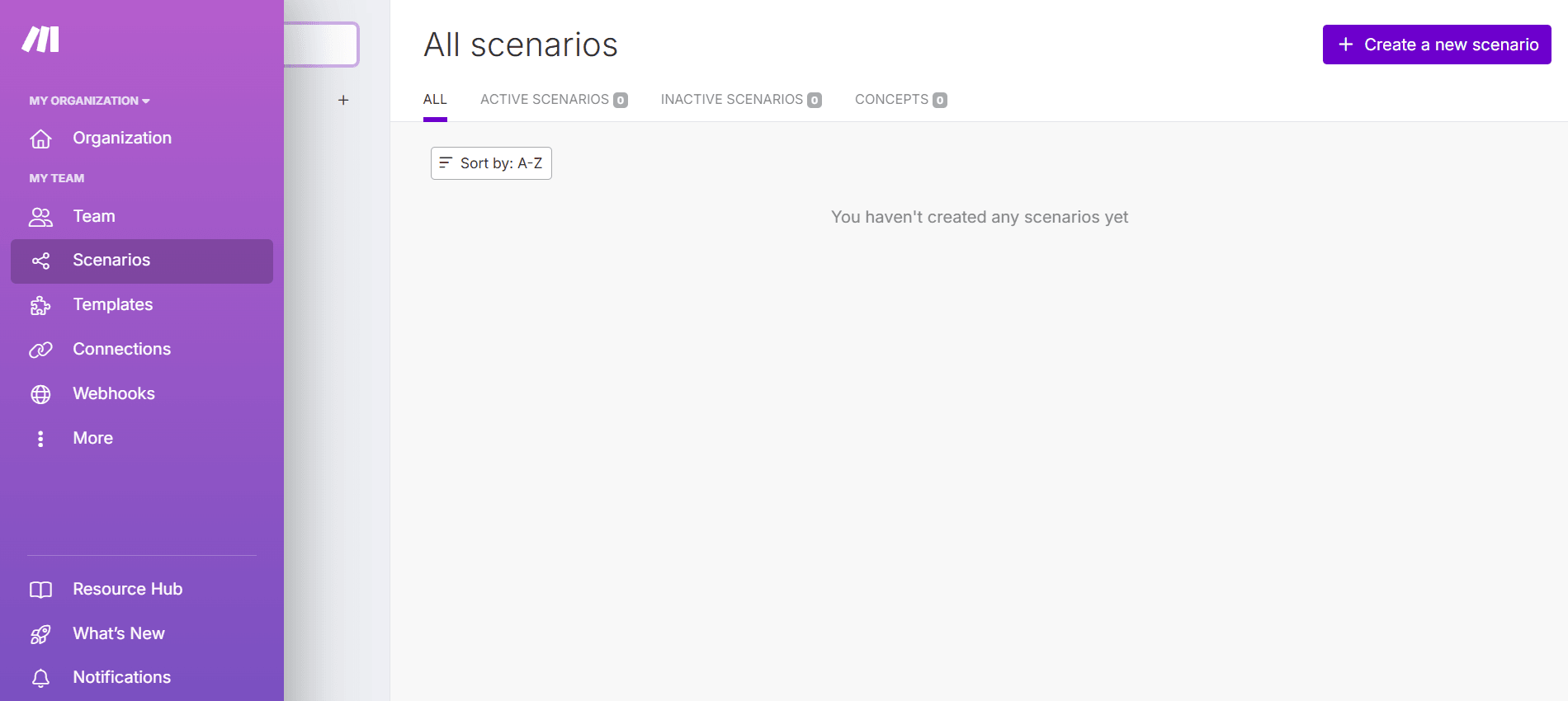

Step 1: Create a new Scenario on Make

- Log in to your account at https://make.com/

- Go to Scenarios on the left sidebar

- Click + Create a new scenario at the top right

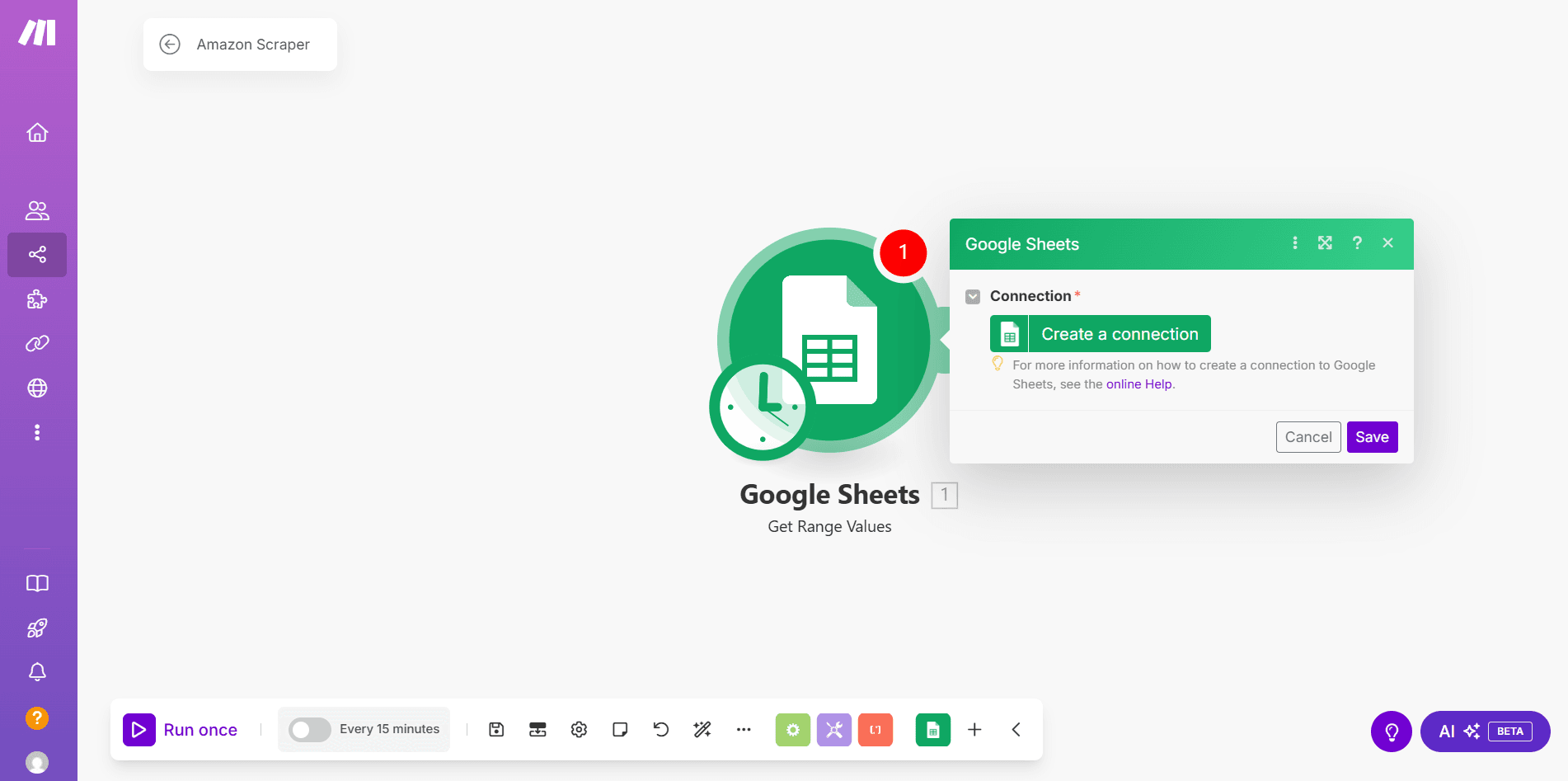

Step 2: Connect the Google Sheets containing the target URLs

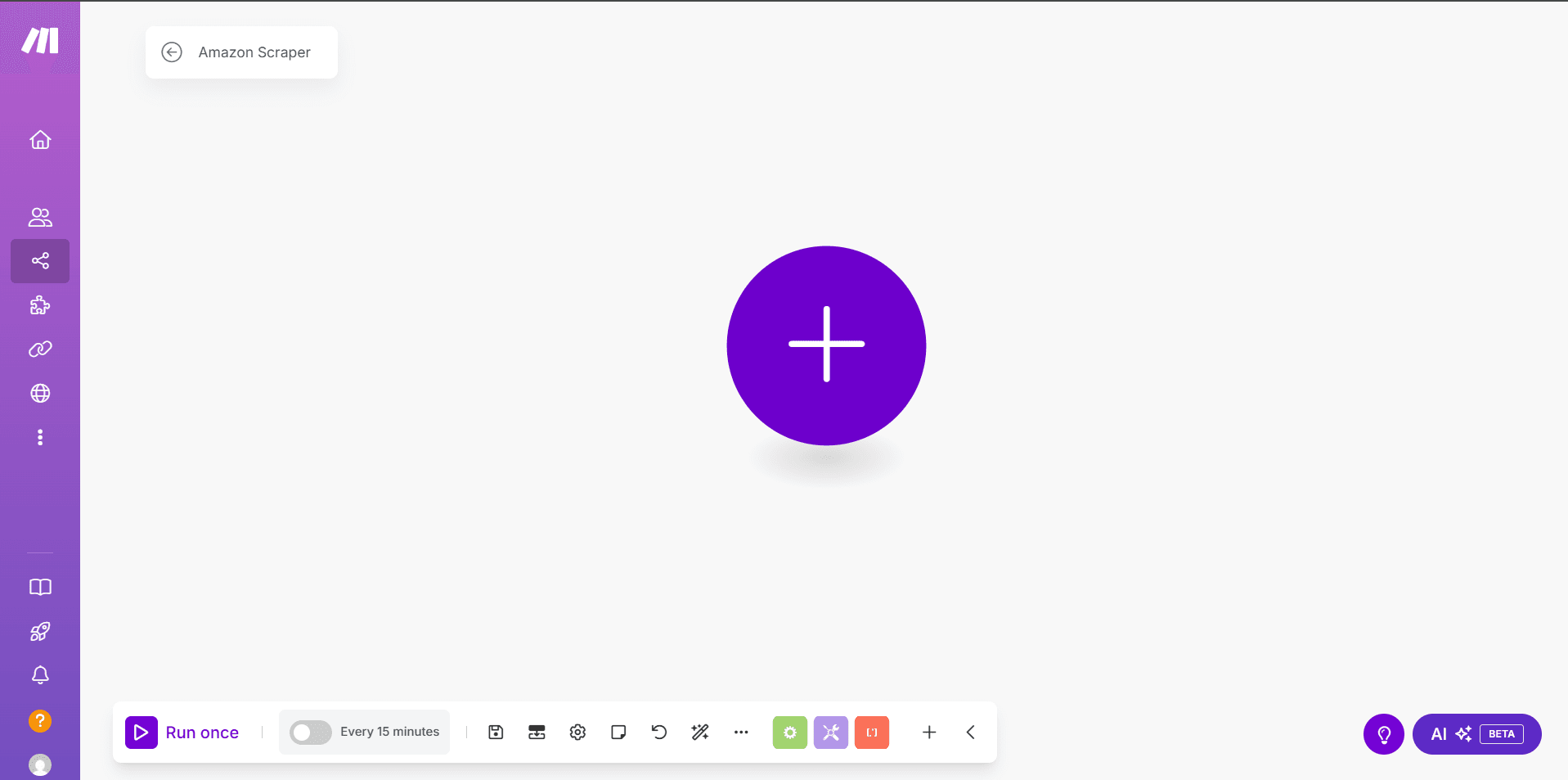

- Enter your Scenario’s name at the top-left (e.g., Amazon Scraper)

- Click the

+icon in the middle of the screen

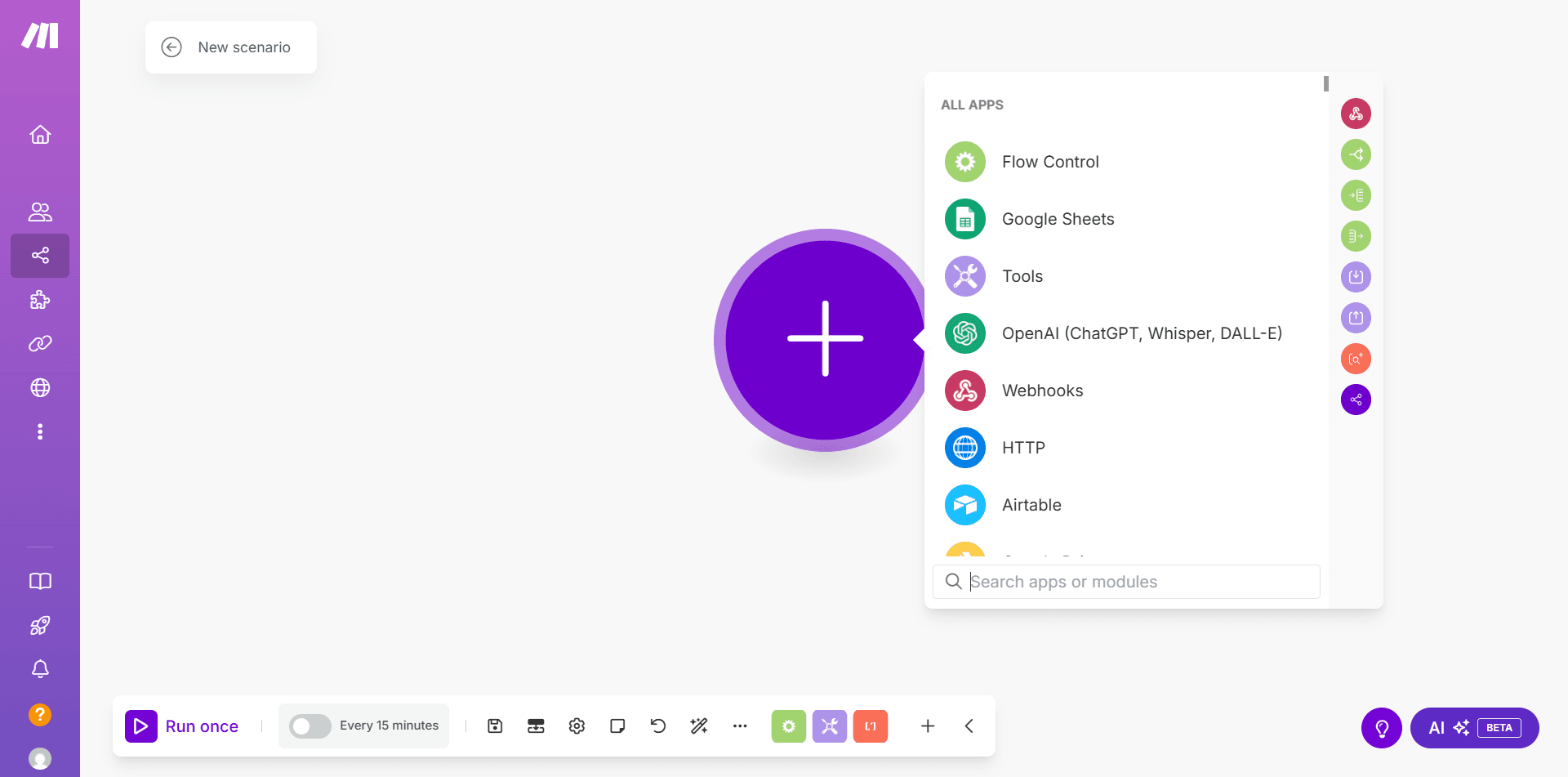

- Search and select Google Sheets from the modal

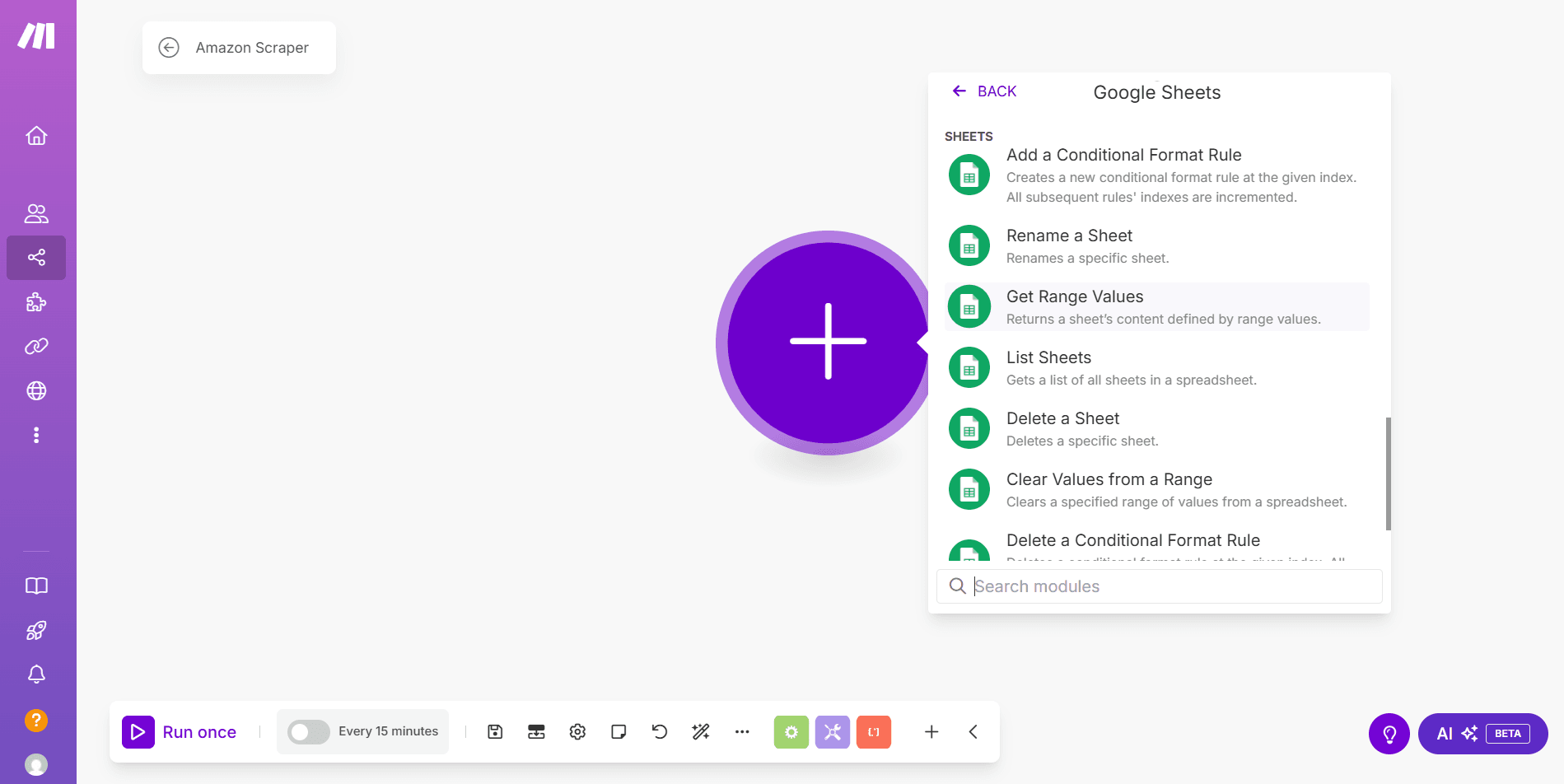

- Select Get Range Values from the options

- If prompted, click Create a connection to link Make with your Google account

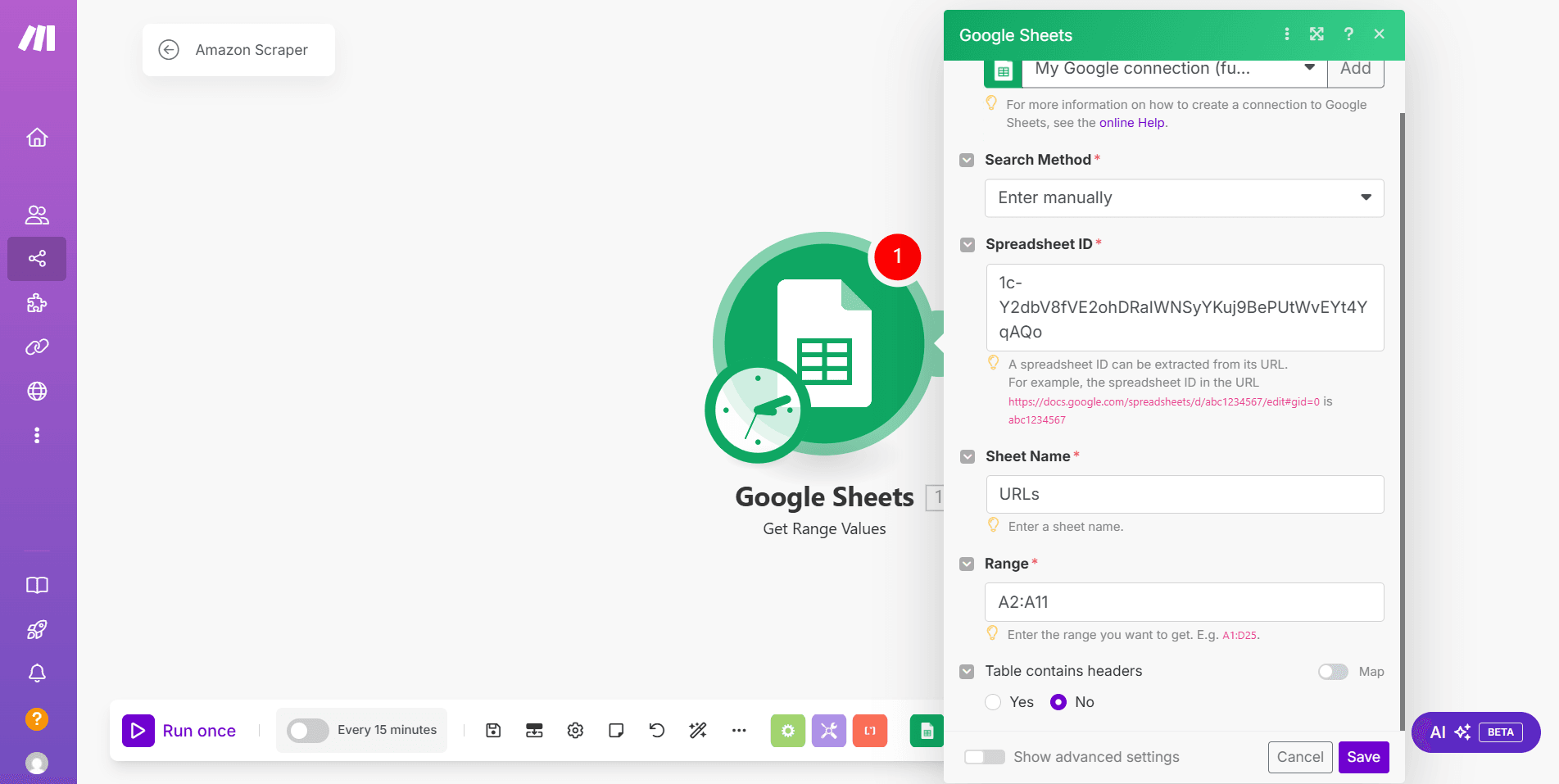

- Click the Search Method dropdown and select Enter manually

- Paste the Google Sheets ID in the Spreadsheet ID field

- Enter the sheet’s name in the Sheet Name field

- Provide the range of cells containing the target URLs in the Range field (e.g., A2:A11)

- Click Save

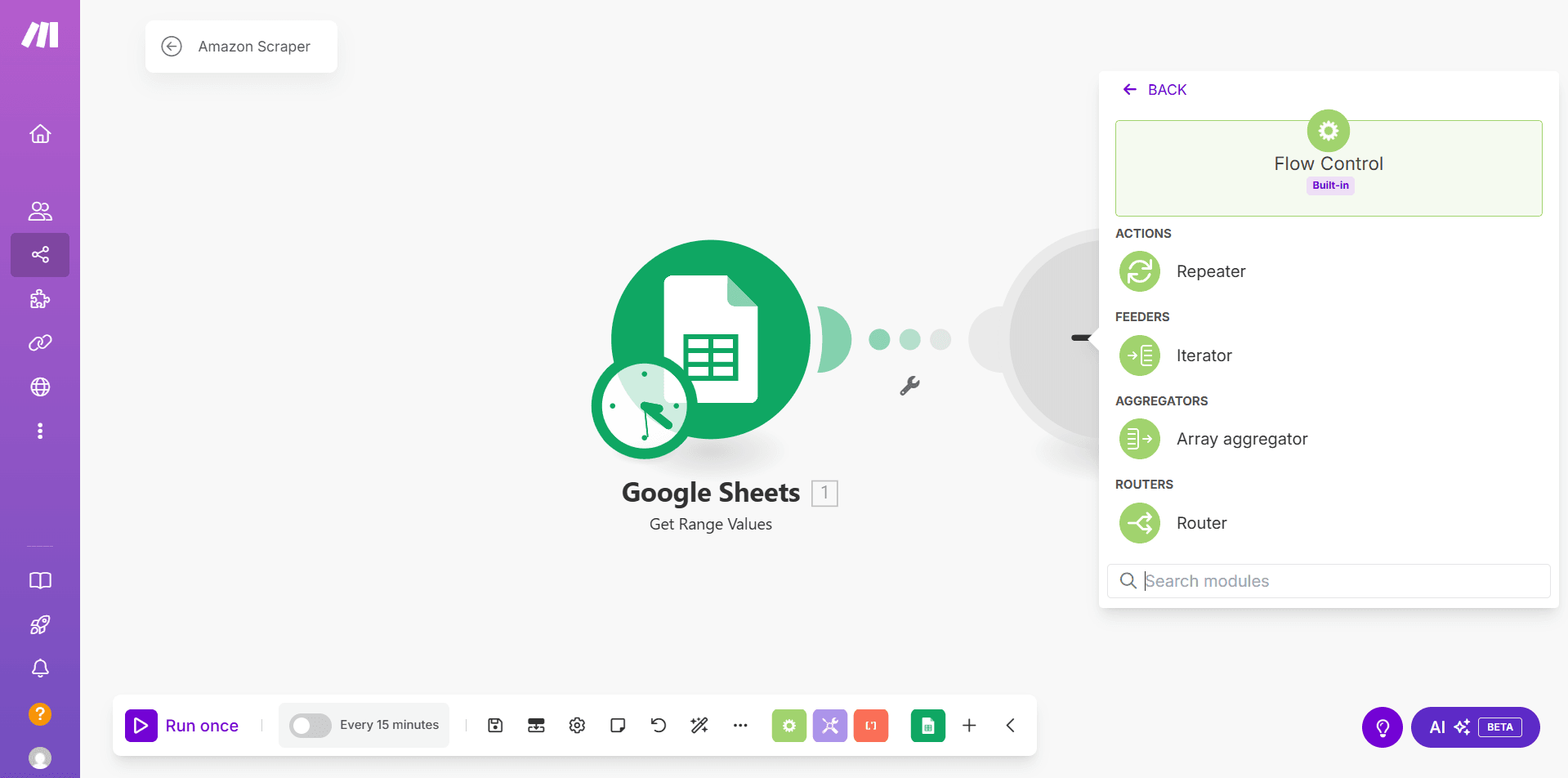

Step 3: Load the Target URLs into an Iterator

- Click

+next to the Google Sheets module to create a new connection - Search and select Flow Control, then click Iterator

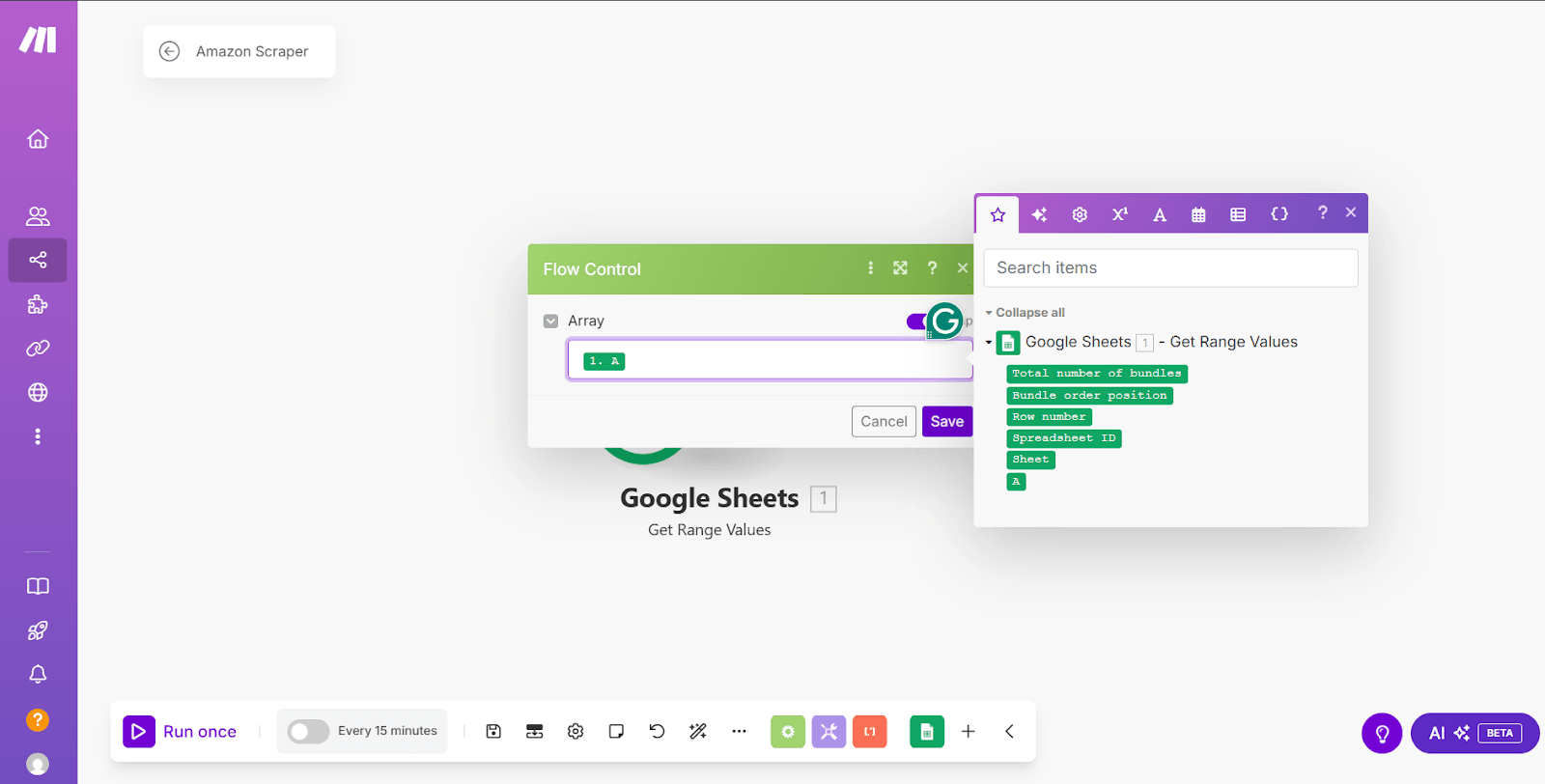

- Place your cursor in the Array field and select the column containing the target URLs (i.e., A) from the integrated Google Sheets

- Click Save

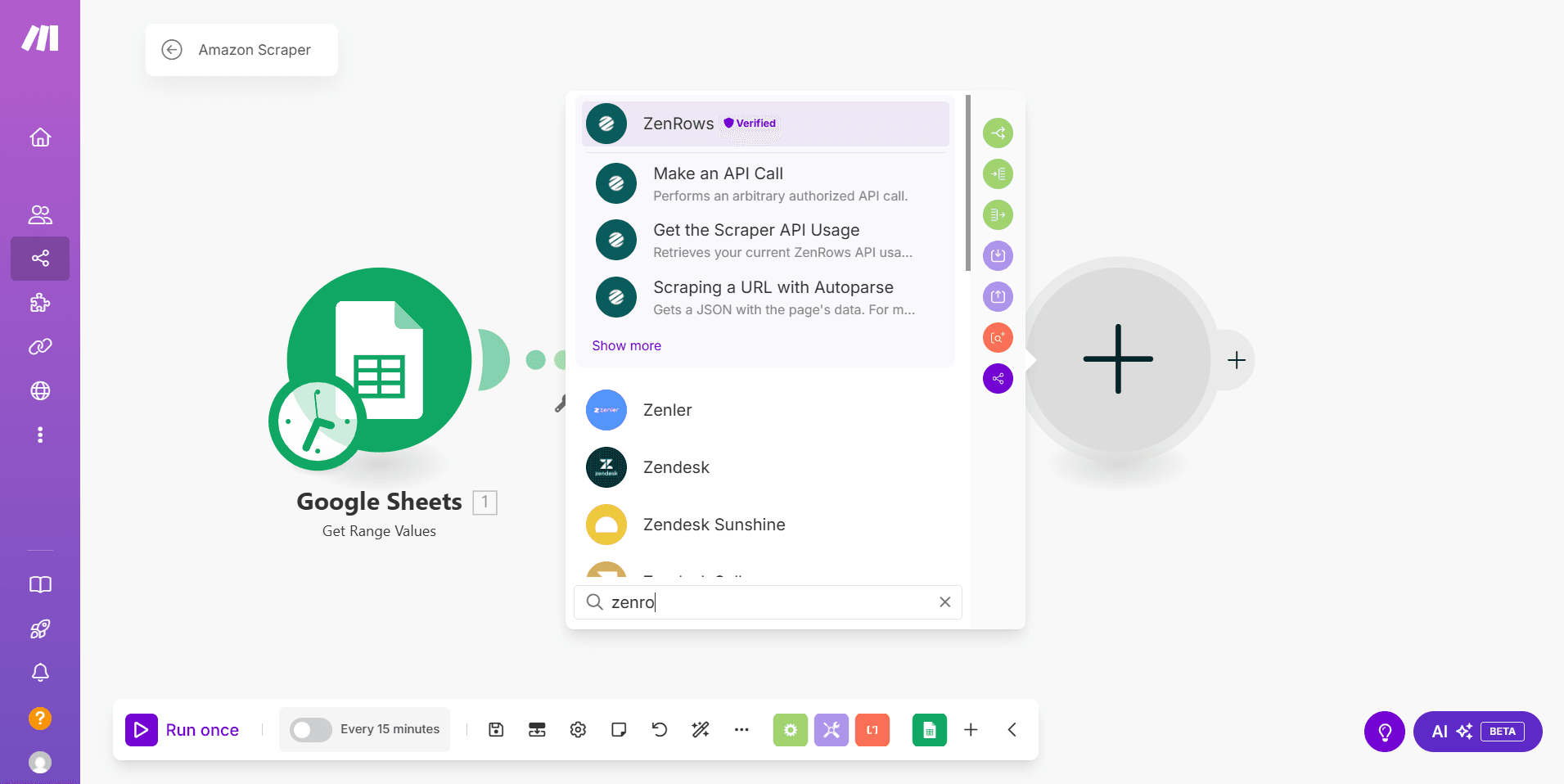

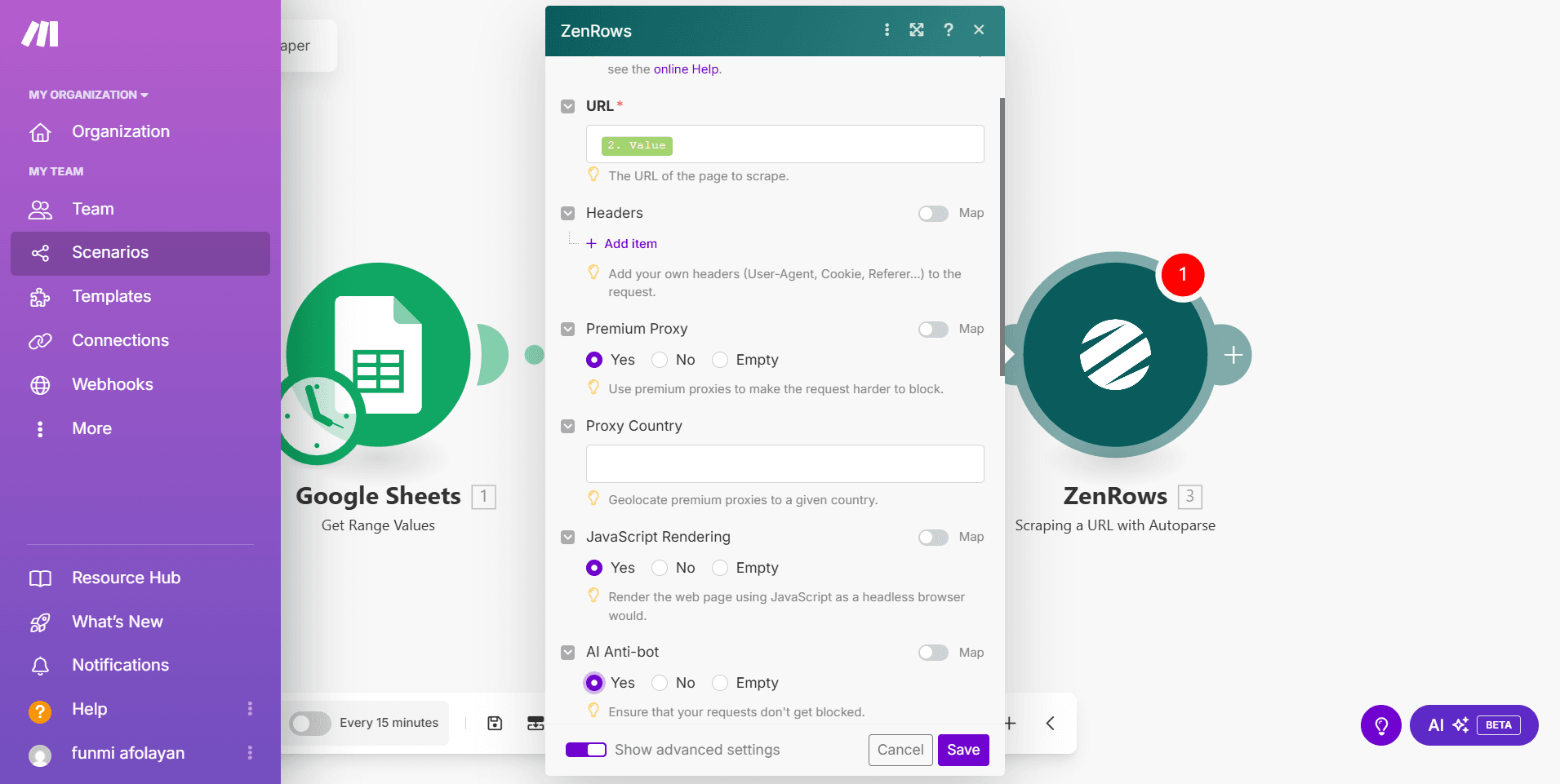

Step 4: Connect ZenRows with the Make workflow

- Click the

+icon - Search and select ZenRows

- Click Scrape a URL with Autoparse

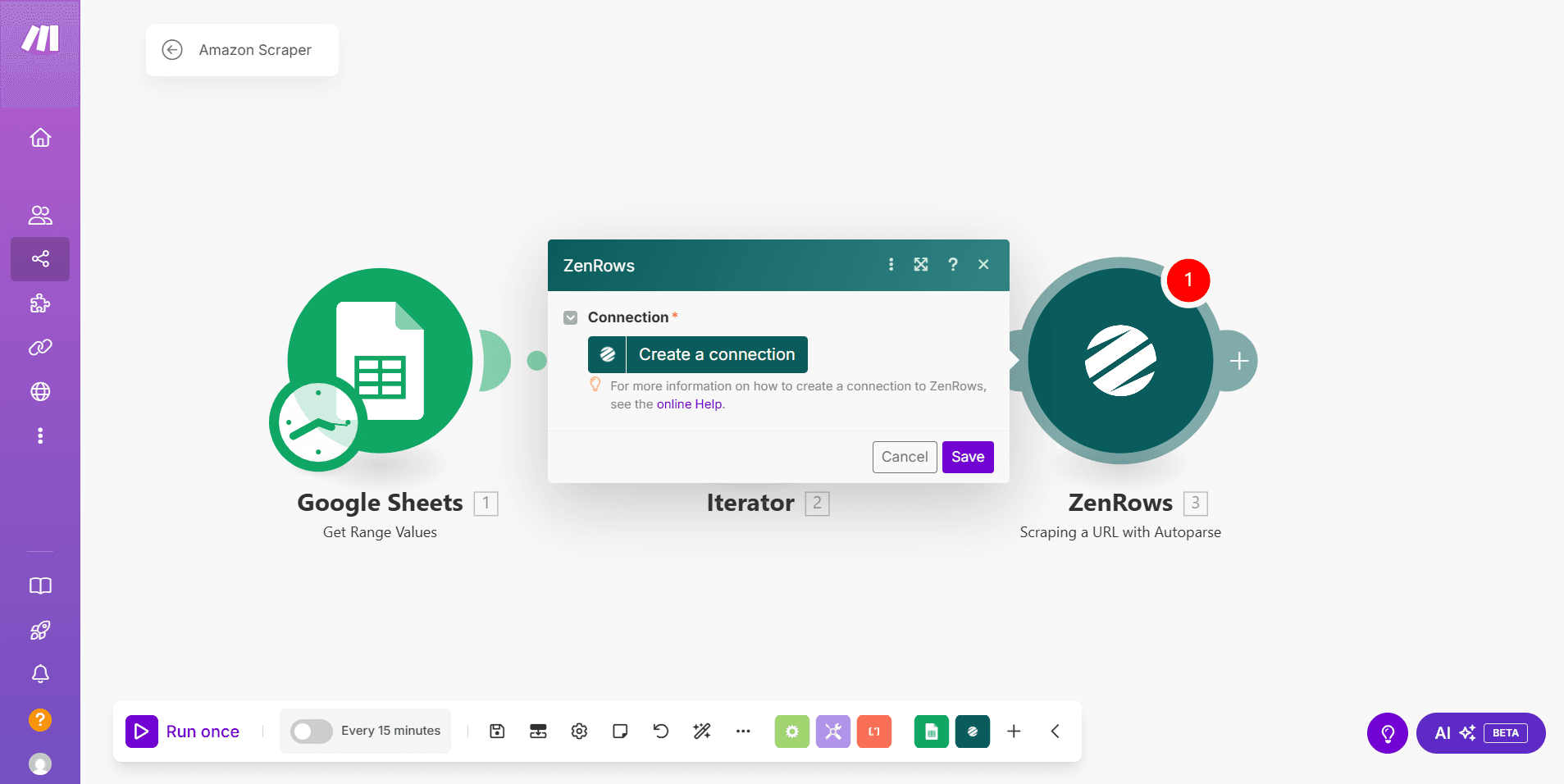

- Click Create a Connection

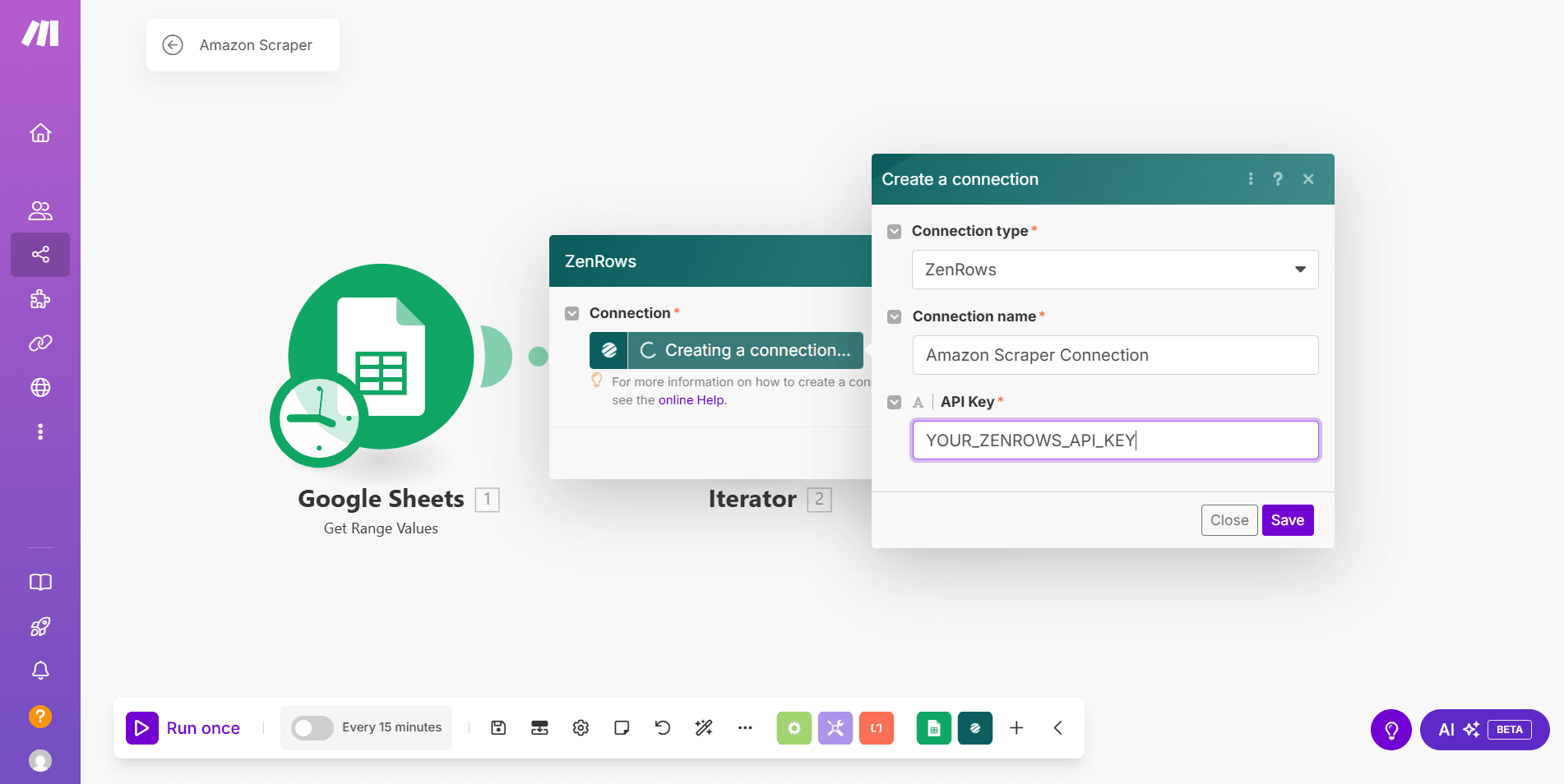

- Under Connection type, select ZenRows

- Enter a connection name in the Connection name field

- Provide your ZenRows API key in the API Key field

- Click Save

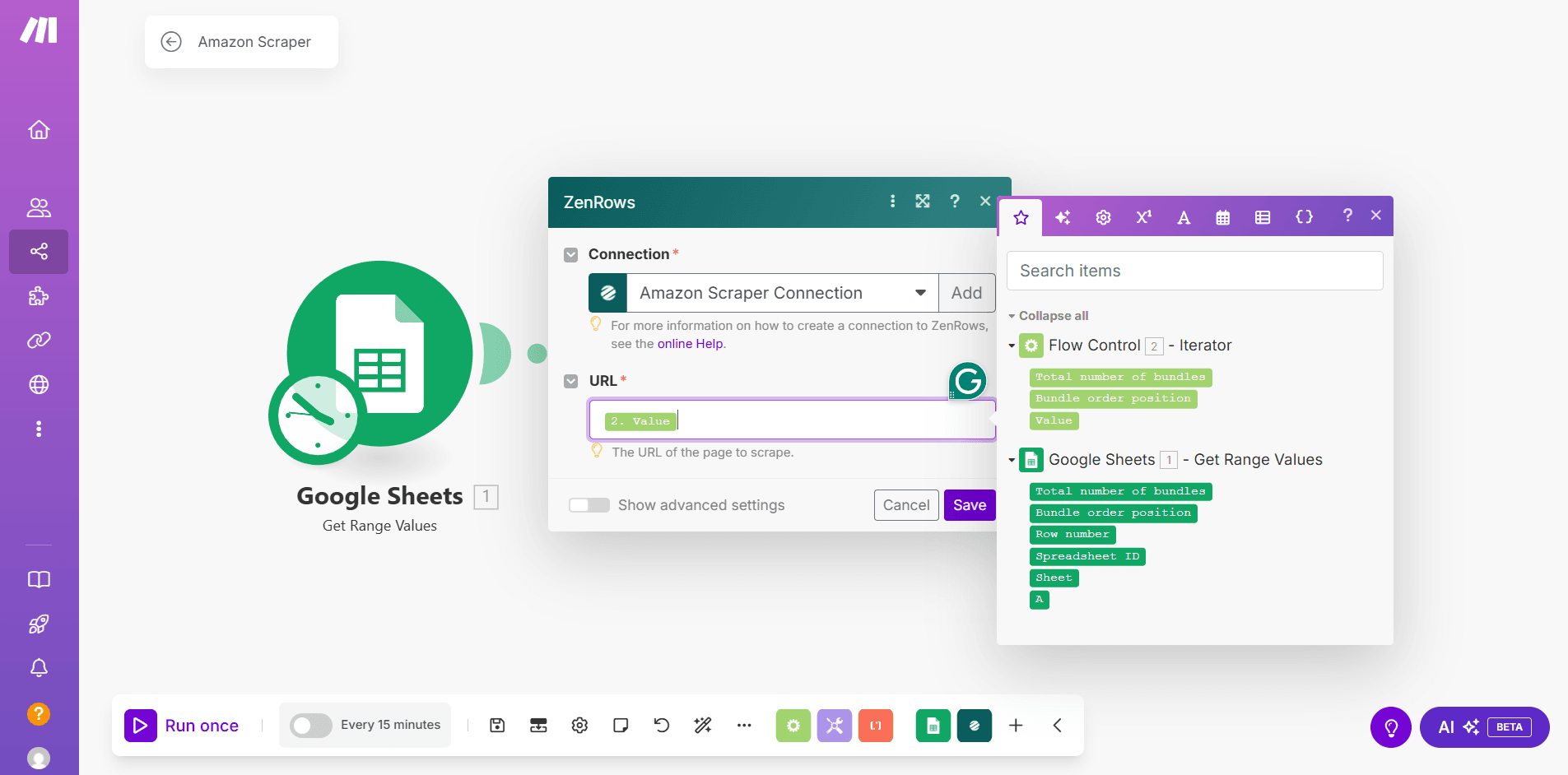

- Place your cursor in the URL field, then select Value from the Flow Control option in the modal box

- Click Show advanced settings

- Select Yes for Premium Proxy and JavaScript Rendering

- Click Save

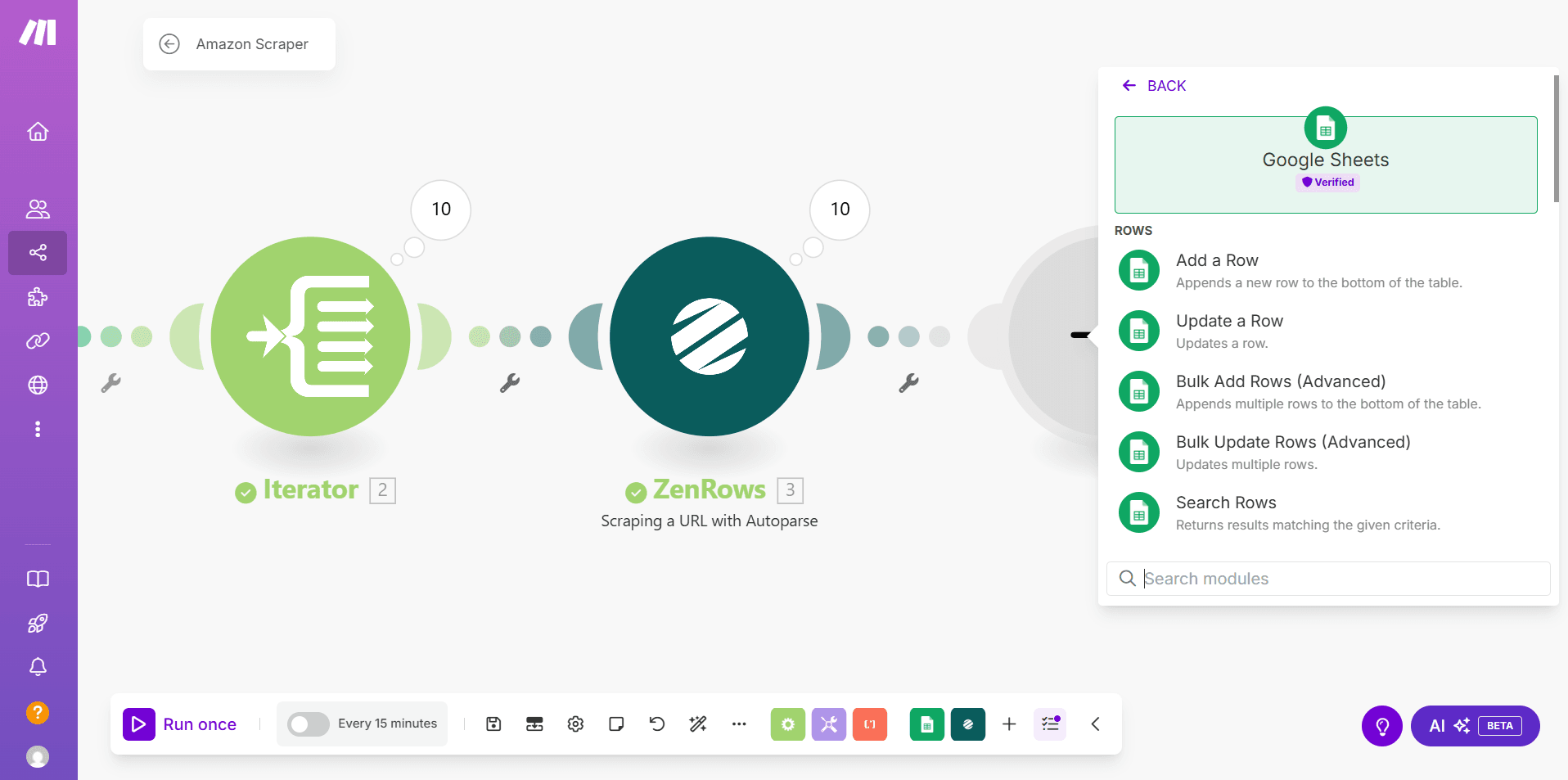

Step 5: Store the scraped data in a Google Sheets

- Click Run once in the lower left corner to scrape data from the URLs

- Add another named sheet (e.g., Products) to the connected Google Sheets. Include the following columns in the new sheet:

- Name

- Rating Value

- Review Count

- Price (USD)

- Out of Stock

- Click the

+icon to add another module. Then, select Google Sheets. - Select Add a row

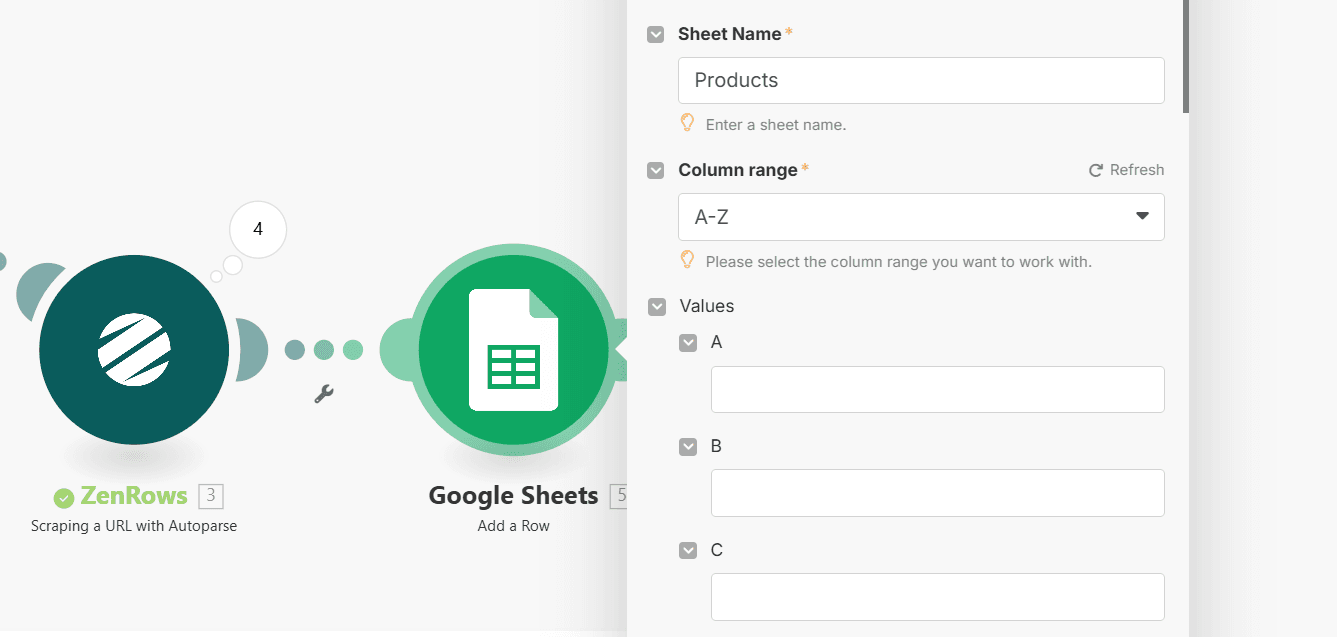

- Select Enter manually from the Search Method dropdown ⇒ enter the Spreadsheet ID and Sheet Name.

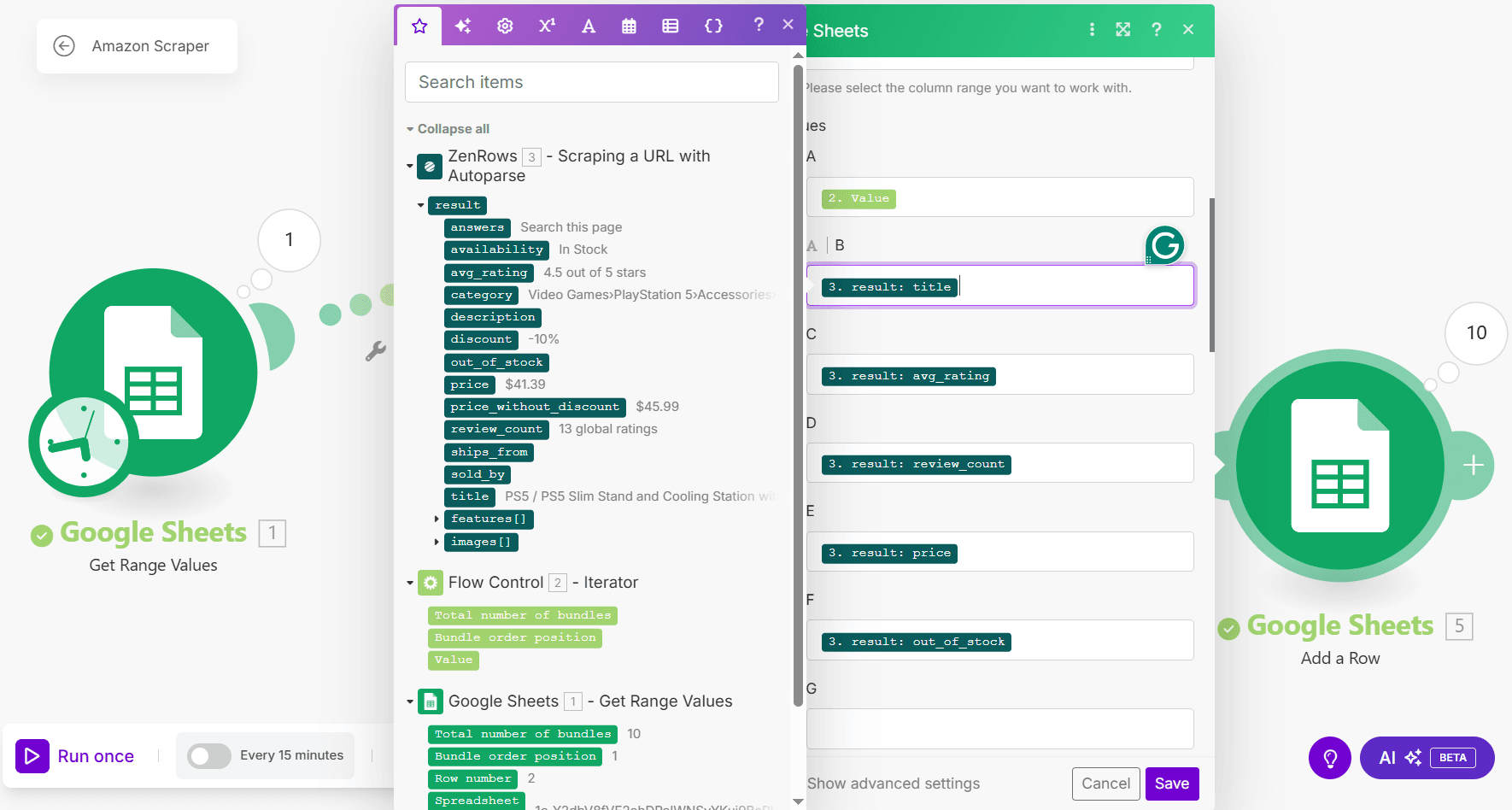

- Under Column Range, select A-Z. Then, map the columns using the data extracted from ZenRows.

- Place your cursor in each column field and map it with the extracted data field as follows:

- A =

Value(from the Iterator) - B =

title - C =

avg_rating - D =

review_count - E =

price - F =

out_of_stock

- A =

- Click Save

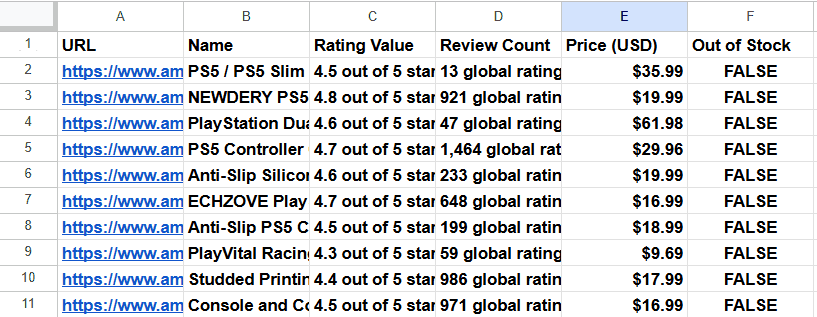

Step 6: Run the Make Scenario and Validate the Extraction

Click Run once in the lower-left corner of the screen. The workflow runs iteratively for each URL in the connected Google Sheets. The workflow scrapes the data into the Products sheet as shown: Congratulations! You’ve successfully integrated ZenRows with Make and automated your web scraping workflow.

Congratulations! You’ve successfully integrated ZenRows with Make and automated your web scraping workflow.

ZenRows Configuration Options

ZenRows supports the following configuration options on Make:| Configuration | Function |

|---|---|

| URL | The URL of the target website |

| Headers | |

| Premium Proxy | When activated, it routes requests through the ZenRows Residential Proxies, instead of the default Datacenter proxies |

| Proxy Country | |

| JavaScript Rendering | Ensures that dynamic content loads before scraping |

| Wait for Selector | |

| Wait Milliseconds | |

| Window Width | Sets the browser’s window width |

| Window Height | Sets the browser’s window height |

| JavaScript Instructions | |

| Session ID | Uses a session ID to maintain the same IP for multiple API requests for up to 10 minutes |

| Original Status | Returns the original status code returned by the target website |

Troubleshooting

Issue: The workflow returns incomplete data

Solution: Ensure that JavaScript Rendering and Premium Proxy are enabled in the ZenRows configuration.Issue: [400] Error with internal code ‘REQS004’

Solution 1: Double-check the target URL and ensure it’s not malformed or missing essential query strings. Solution 2: If using the CSS selector integration, ensure the extractor parameter is a valid JSON array of objects (e.g.,[{"title":"#productTitle", "price": ".a-price-whole"}]).

Issue: Failed request or 401 unauthorized response

Solution 1: Ensure you supply your ZenRows API key. Solution 2: Double-check to ensure you’ve provided the correct ZenRows API key.Issue: The Google Sheets module cannot find the spreadsheet

Solution: Double-check the Spreadsheet ID and ensure the Google Sheets API is enabled for your account.Integration Benefits & Applications

Integrating Make with ZenRows has the following benefits:- It enables businesses to automate data collection and build a complete data pipeline without writing code.

- You also overcome scraping challenges, such as anti-bot measures, dynamic rendering, geo-restrictions, and more.

- By automating the scraping process, you only focus on data processing and analysis rather than worrying about building out complicated scraping logic.

- Scaling up with Make integration is also easy, as you can add URLs continuously to your queue.

- You can also schedule the scraping job to refresh your data pipeline at specific intervals.

Frequently Asked Questions (FAQ)

Can I use My Existing ZenRows API Key with Make?

Can I use My Existing ZenRows API Key with Make?

Yes. You don’t need a separate ZenRows API key for Make. You need to provide your existing API key while connecting Make with ZenRows.

Can I Scrape with CSS Selectors on Make?

Can I Scrape with CSS Selectors on Make?

Make supports ZenRows CSS selector integration. It allows you to manually pass the target website’s CSS selectors as an array.

Can I use the autoparse integration for all websites?

Can I use the autoparse integration for all websites?

The autoparse integration isn’t available for all websites. If you use the Scraping a URL with Autoparse integration for an unsupported website, the scraper will return empty or incomplete data or an error. Visit the ZenRows web scraper page to view the supported websites.