What Is n8n?

n8n is a workflow automation platform that connects different services and builds custom workflows with a visual interface. Unlike many other automation tools, n8n can be self-hosted, giving you complete control over your data. With its node-based workflow editor, n8n makes it easy to connect apps, services, and APIs without writing code. You can process, transform, and route data between different services, and trigger actions based on schedules or events.Use Cases

Integrating ZenRows with n8n opens up numerous automation possibilities:- E-commerce Monitoring: Scrape product prices, availability, and ratings from competitor websites and store them in Google Sheets or Airtable for analysis.

- Lead Generation: Extract contact information from business directories and automatically add it to your CRM system, such as HubSpot or Salesforce.

- Job Market Analysis: Monitor job listings across multiple platforms and receive daily notifications of new opportunities via Slack or email.

- Content Aggregation: Scrape blog posts, news articles, or research papers and compile summaries in Notion or other knowledge bases.

- Real Estate Data Collection: Gather property listings, prices, and features from real estate websites for market analysis.

Watch the Video Tutorial

Learn how to set up the n8n ↔ ZenRows integration step-by-step by watching this video tutorial:How to Set Up ZenRows with n8n

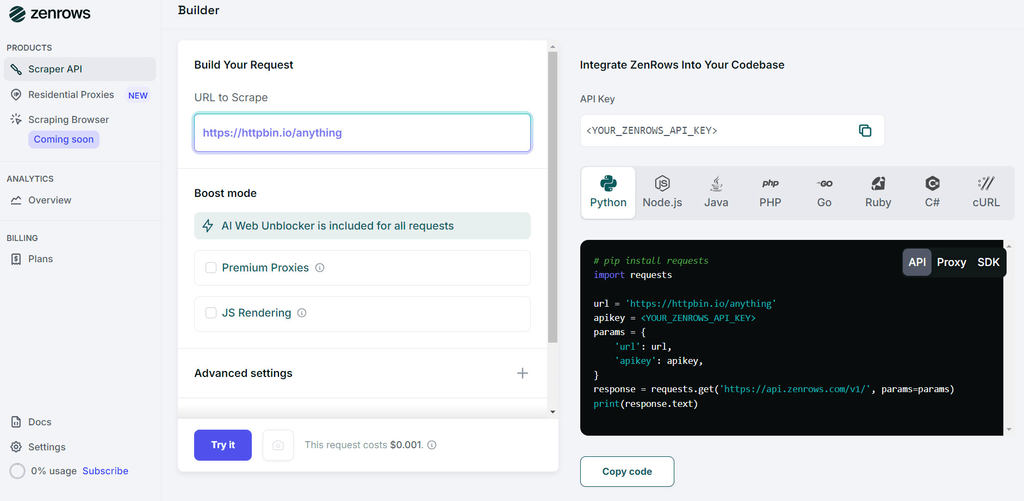

Follow these steps to integrate ZenRows with n8n:Step 1: Set up ZenRows

- Sign up for a ZenRows account if you don’t already have one.

- Navigate to ZenRows’ Universal Scraper API Playground.

- Configure the settings:

- Enter the URL to Scrape.

- Activate JS Rendering and/or Premium Proxies (as per your requirements).

- Customize other settings as needed for your specific scraping task.

Check out the Universal Scraper API documentation for all available features

- Click on the cURL tab on the right and copy the generated code.

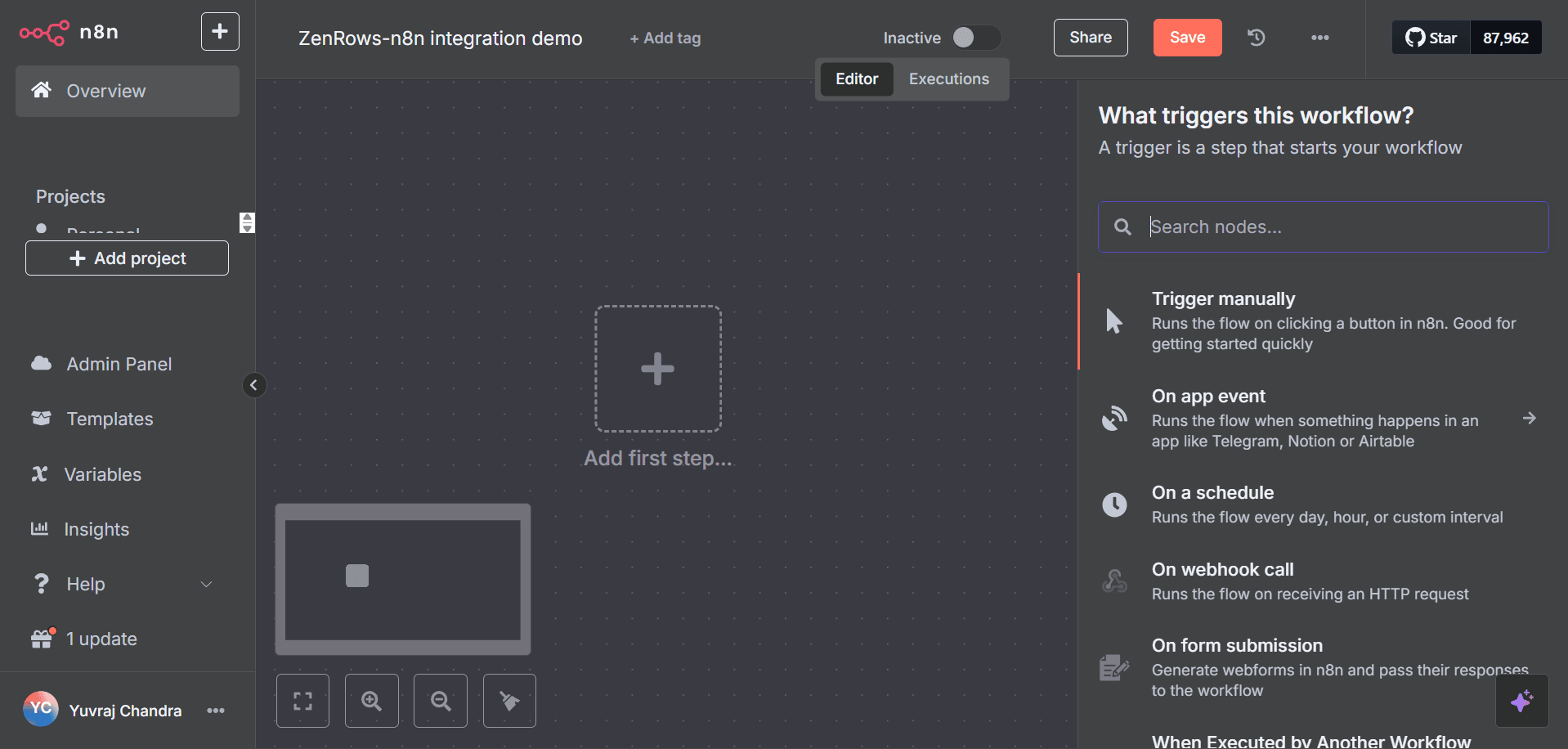

Step 2: Set up your n8n workflow

- Sign up or log in to open your n8n instance and create a new workflow.

- Add a Manual Trigger node as the first node. The Manual Trigger node allows you to manually start the workflow for testing or debugging purposes.

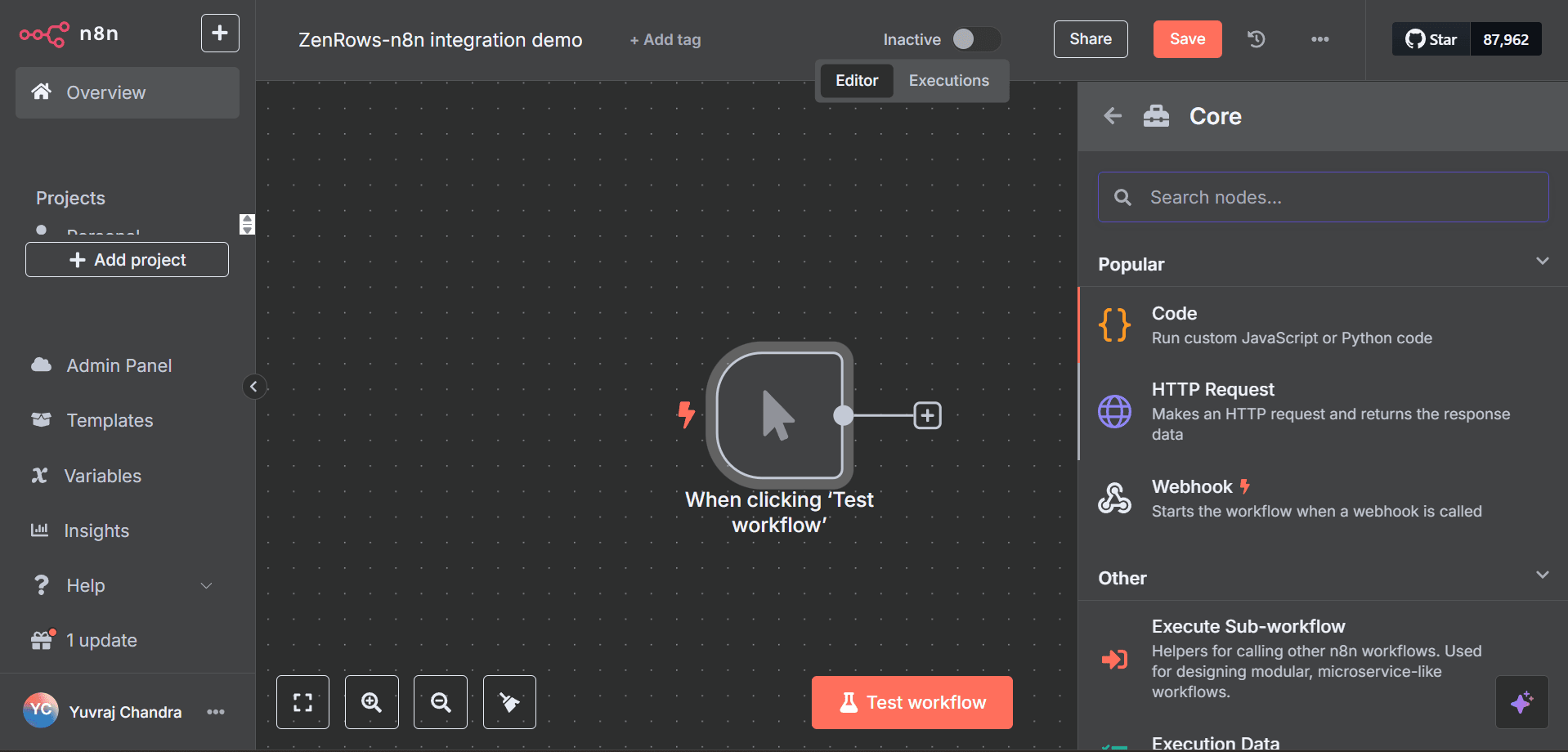

- Next, add an HTTP Request node:

- Click on the + button next to the Manual Trigger node.

- Select the Core tab on the right.

- Then, click the HTTP Request tab to open the configuration window.

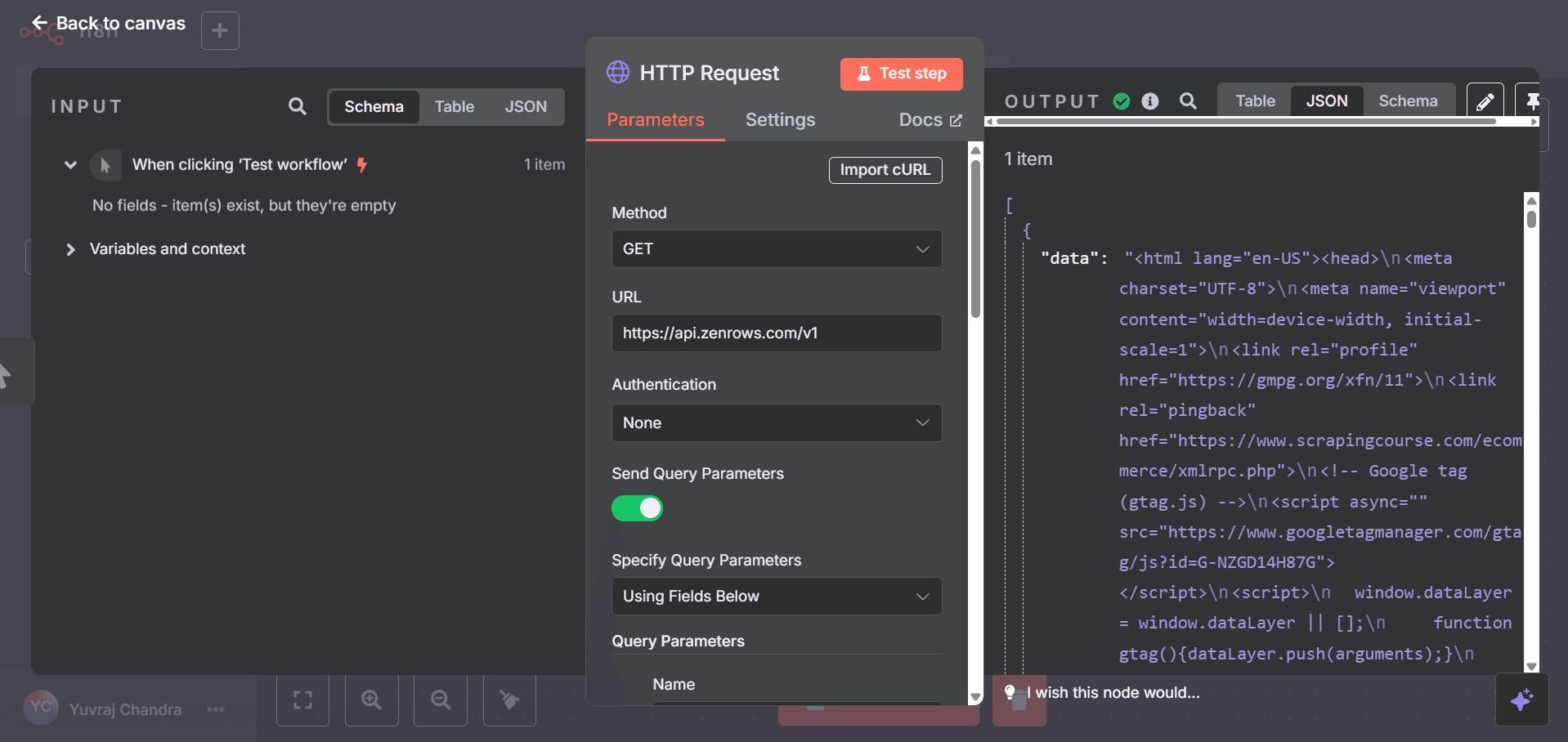

- Click the Import cURL button and paste the ZenRows cURL code you copied earlier. The HTTP Request node will automatically configure all the necessary parameters, including headers, authentication, and query parameters.

- Finally, click Test step to confirm your setup is configured correctly. You should get the full HTML of the target page as the output.

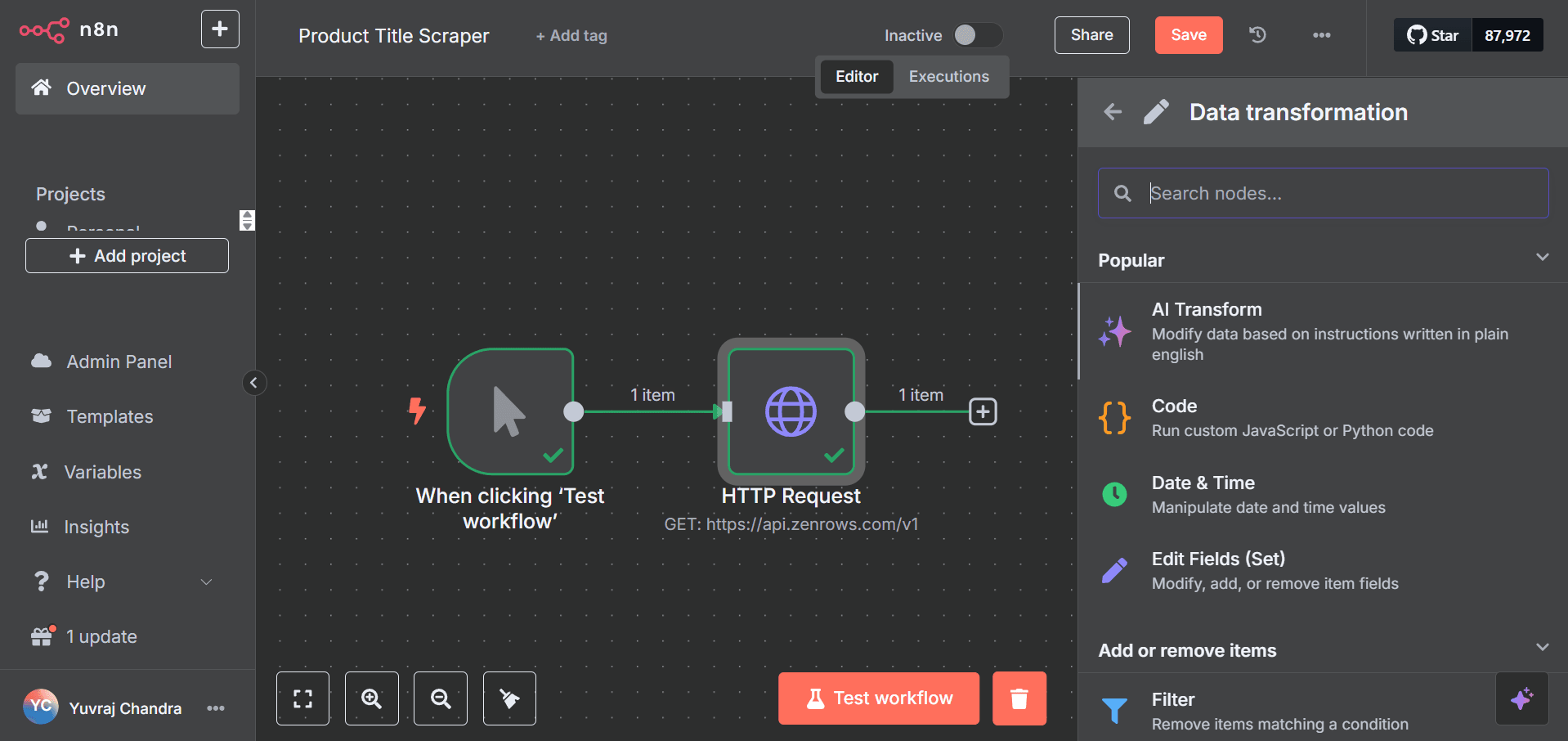

Example Workflow: Scraping Product Titles from a Website

Let’s build a complete workflow that scrapes product titles from an e-commerce website and stores them in a Google Sheet.Step 1: Create a new workflow

- In your n8n dashboard, click Create workflow and give it a name (e.g., “Product Title Scraper”).

- Add a Manual Trigger node as your starting point.

Step 2: Configure ZenRows

- Open ZenRows’ Universal Scraper API Request Playground.

- Enter

https://www.scrapingcourse.com/ecommerce/as the URL to Scrape. - Activate JS Rendering.

- Select Specific Data as the Output type and configure the CSS selector under the Parsers tab:

{ "product-names": ".product-name" }. - Copy the generated cURL code.

- In n8n, add an HTTP Request node after your trigger:

- Click on Import cURL and paste the generated cURL code.

- This configures a GET request to ZenRows’ Universal Scraper API, sending the target URL and parser configuration.

- The request will extract all elements matching the CSS selector

.product-name(which contains product titles).

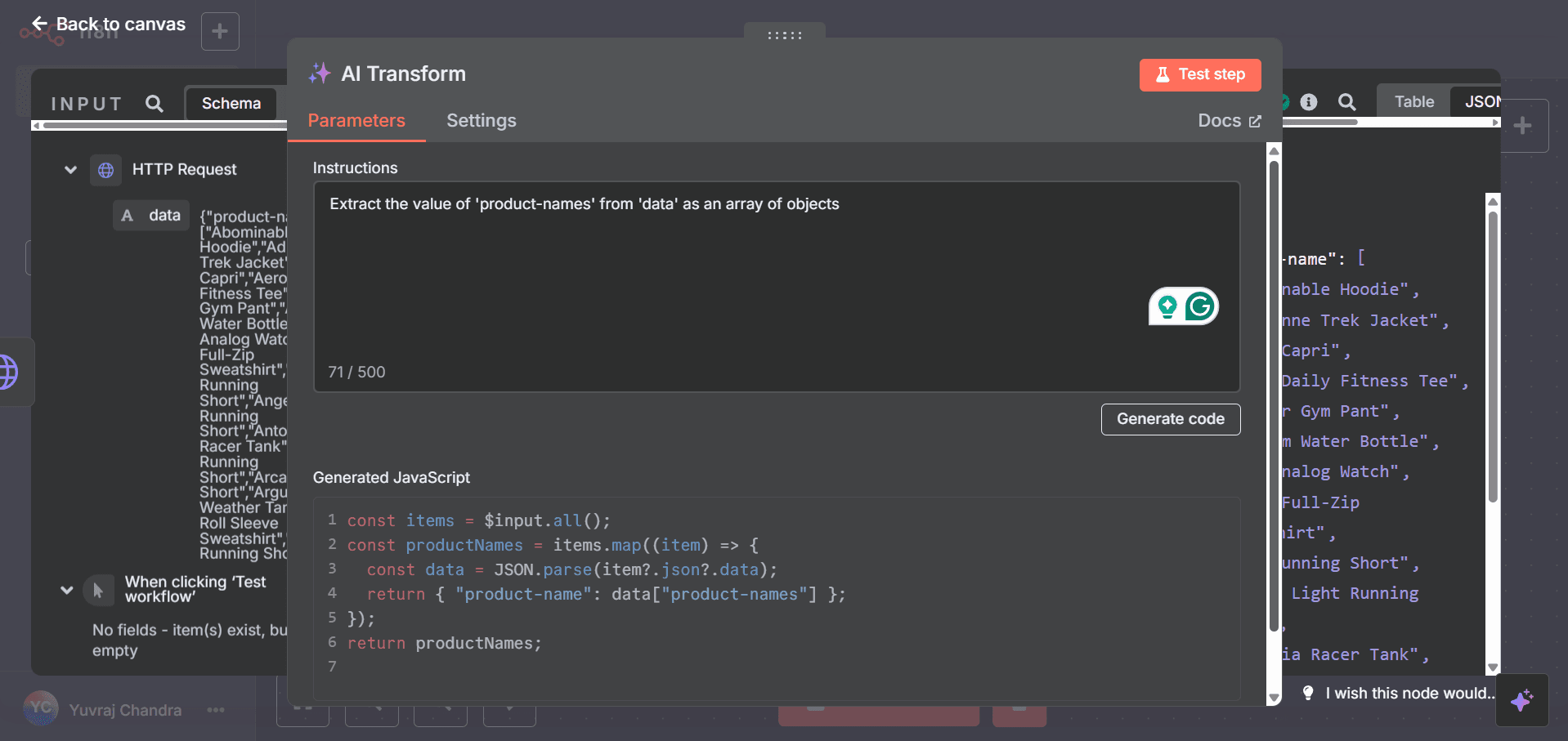

Step 3: Transform the response with AI Transform

After getting the ZenRows response, we need to extract the content values:- Click the + button next to the HTTP Request node and select the Data transformation tab on the right.

-

Then, select the AI Transform node. The AI Transform node allows you to process and manipulate data using AI-generated JavaScript code.

-

In the instructions field, enter: “Extract the value of ‘product-names’ from ‘data’ as an array of objects” and click the Generate code button.

The AI will generate JavaScript code that gets all items from the input, maps through each item, extracts the value from the JSON data, and returns an array of product names.

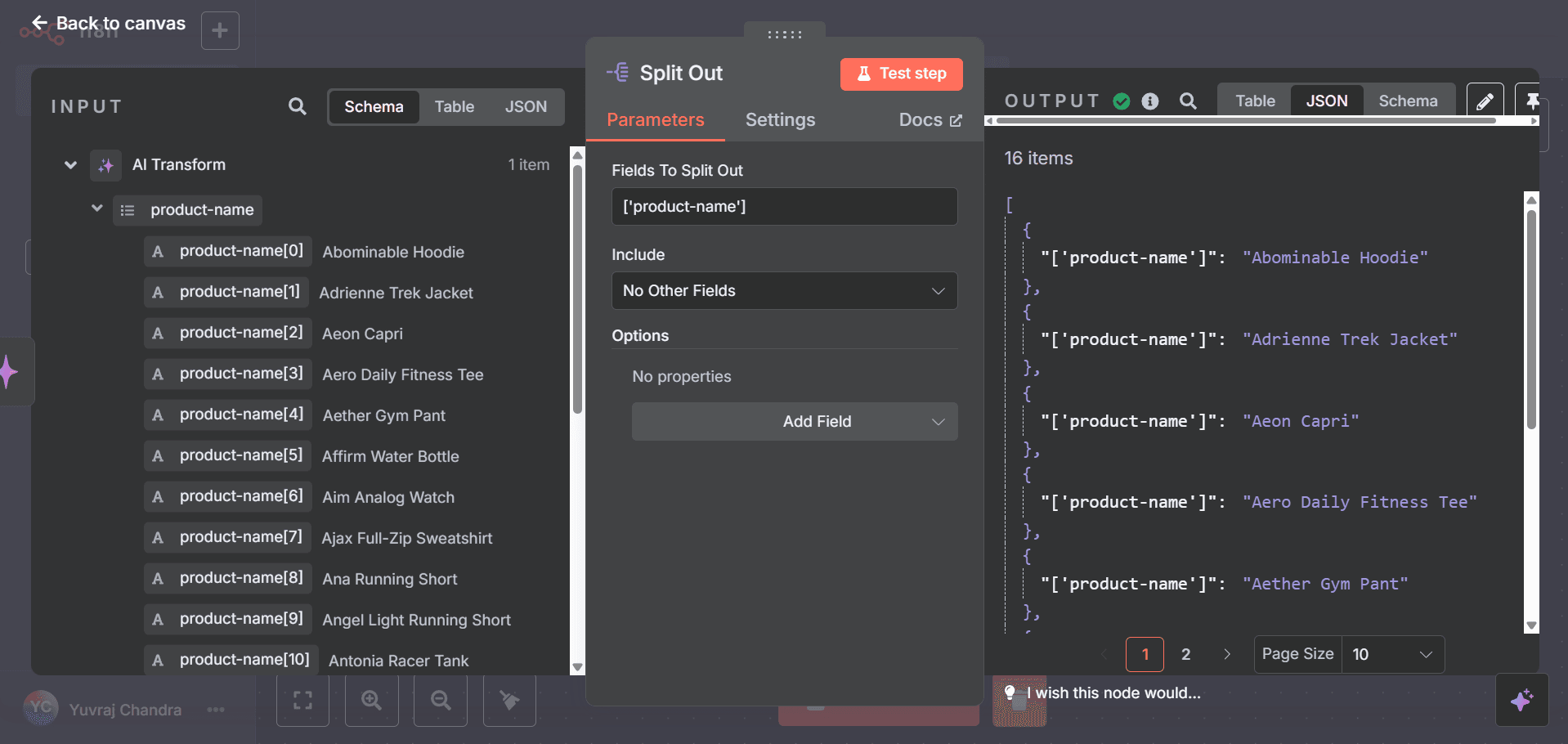

Step 4: Split the results

Now we need to split the array of product names into individual items:- Add a Split Out node after the AI Transform, as it takes an array of data and creates a separate output item for each entry.

- Configure it to split out the

product-namefield. This node will take the array of items and create a separate output item for each content value.

This node will take the array of items and create a separate output item for each content value.

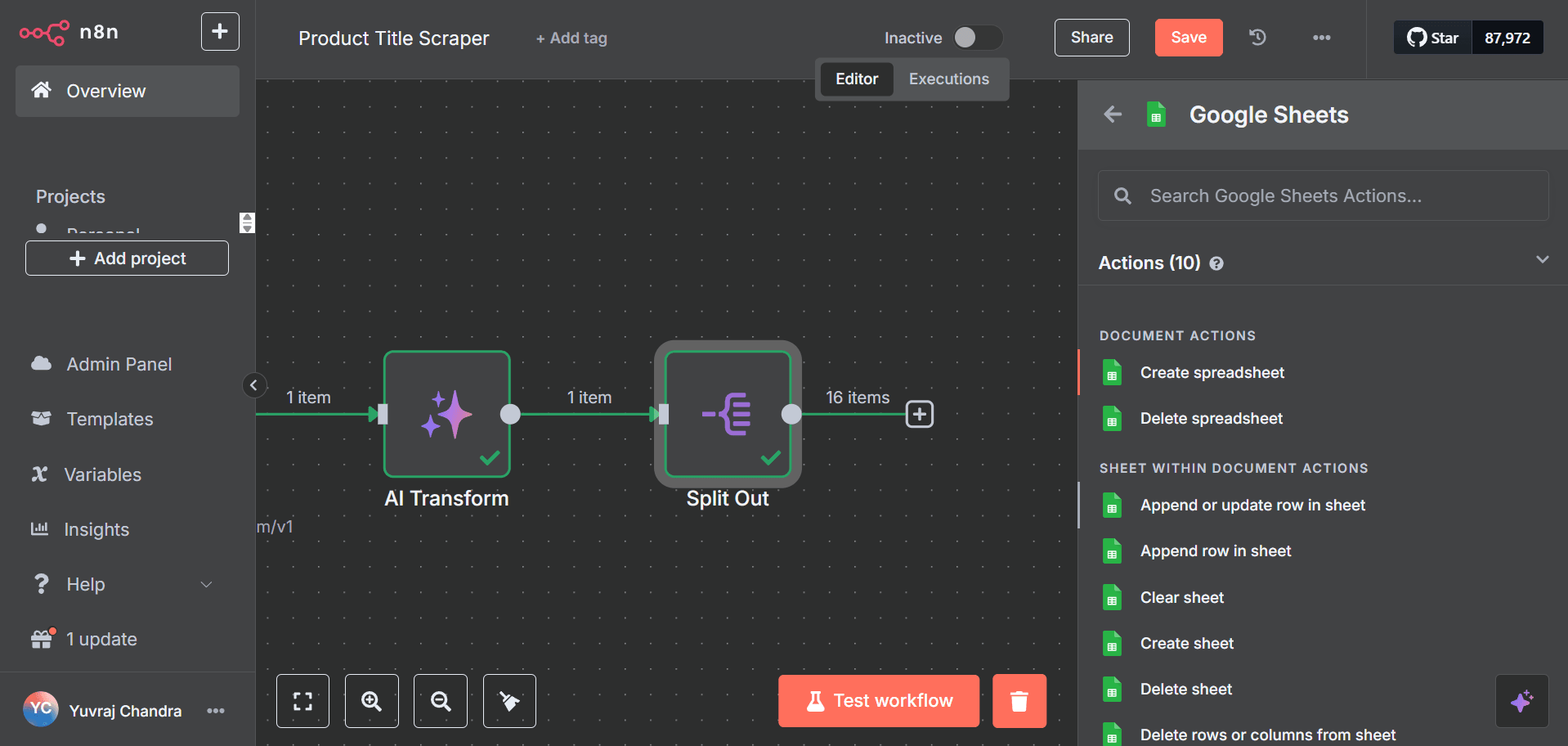

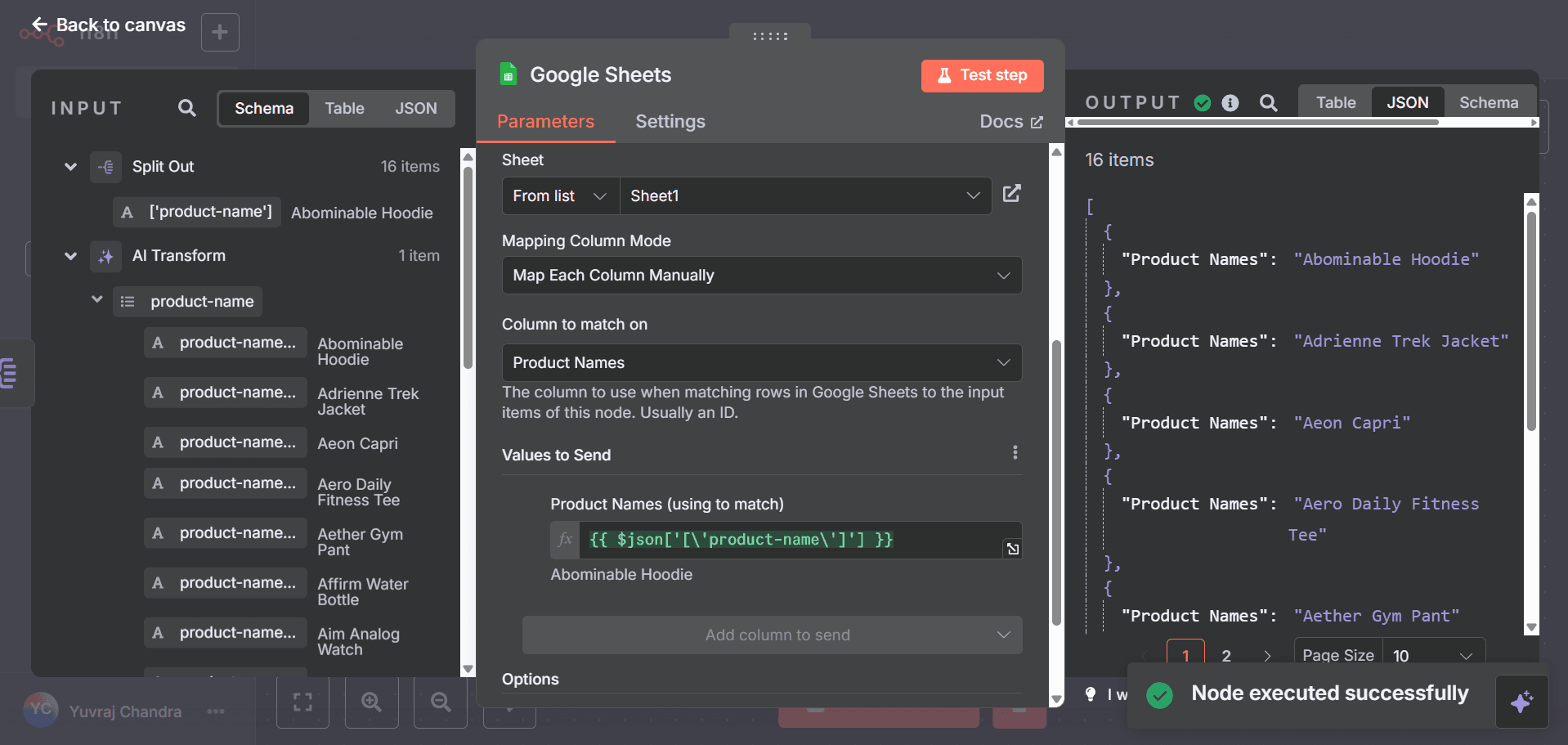

Step 5: Send data to Google Sheets

Finally, we’ll send our scraped product titles to a Google Sheet:- Add a Google Sheets node after the Split Out node.

- Under Sheet Within Document Actions, select the Append or Update Row in sheet action.

- Next, select your Google Sheets account or create a new connection.

- Specify the Google Sheets document URL and select the sheet where you want to store the data.

- Select the Map Each Column Manually mode and choose the column to match.

- Drag the

['product-name']variable under Split Out on the left to the Values to Send field. This configuration will append each product title as a new row in your Google Sheet.

This configuration will append each product title as a new row in your Google Sheet.

Step 6: Run the workflow

Click the Test workflow to run your automation. If everything is configured correctly, you should see product titles from the website appearing in your Google Sheet.Tips and Best Practices

When working with ZenRows and n8n together, keep these best practices in mind:- Enable JS Rendering: For modern websites with dynamic content, always enable the JS Rendering option in ZenRows.

- Set Appropriate Wait Selectors: Use the

wait_forparameter when scraping sites that load content asynchronously. - Implement Retry Logic: Add Error Handling with retry logic for failed requests using n8n’s Error Trigger nodes.

- Paginate Properly: For multi-page scraping, create loops with the Loop Over Items node and increment page numbers.

- Monitor Your Workflows: Set up notification triggers to receive alerts for failures or successful runs.

Troubleshooting

Common issues and solutions:- API Key Not Working

- Verify your ZenRows API key is correctly entered in the HTTP Request node.

- Check that your subscription is active in your ZenRows dashboard.

- Empty Results

- Confirm your CSS selectors are correct by testing them in your browser’s developer tools.

- Try enabling JavaScript rendering if the content is loaded dynamically.

- Increase wait time if the content takes longer to load.

- Workflow Execution Errors

- Check the JSON response format in the HTTP Request node output.

- Ensure all nodes are correctly configured with the correct field mappings.

- View execution logs for detailed error messages.