What Is Pipedream?

Pipedream is a serverless platform that connects APIs and automates workflows. With its event-driven architecture, you can build workflows that respond to triggers like webhooks or schedules. When combined with ZenRows, Pipedream enables you to automate web scraping and integrate structured data into your processes.Use Cases

Combining ZenRows with Pipedream creates powerful automation opportunities:- Market Intelligence: Extract competitor pricing and analyze trends automatically.

- Sales Prospecting: Scrape business directories and enrich contact data for your CRM.

- Content Monitoring: Track brand mentions and trigger notifications for specific keywords.

- Research Automation: Collect and organize articles, reports, or blog posts.

- Property Intelligence: Gather real estate listings and market data for analysis.

Real-World End-to-End Integration Example

We’ll build a basic end-to-end e-commerce product scraping workflow using Pipedream and ZenRows with the following steps:- Set up a scheduled trigger to automatically track product prices at regular intervals.

- Use

https://www.scrapingcourse.com/paginationas the target URL. - Utilize ZenRows’ Universal Scraper API to automatically extract product names and prices from the URL.

- Process and transform the scraped data.

- Save the extracted product information to Google Sheets.

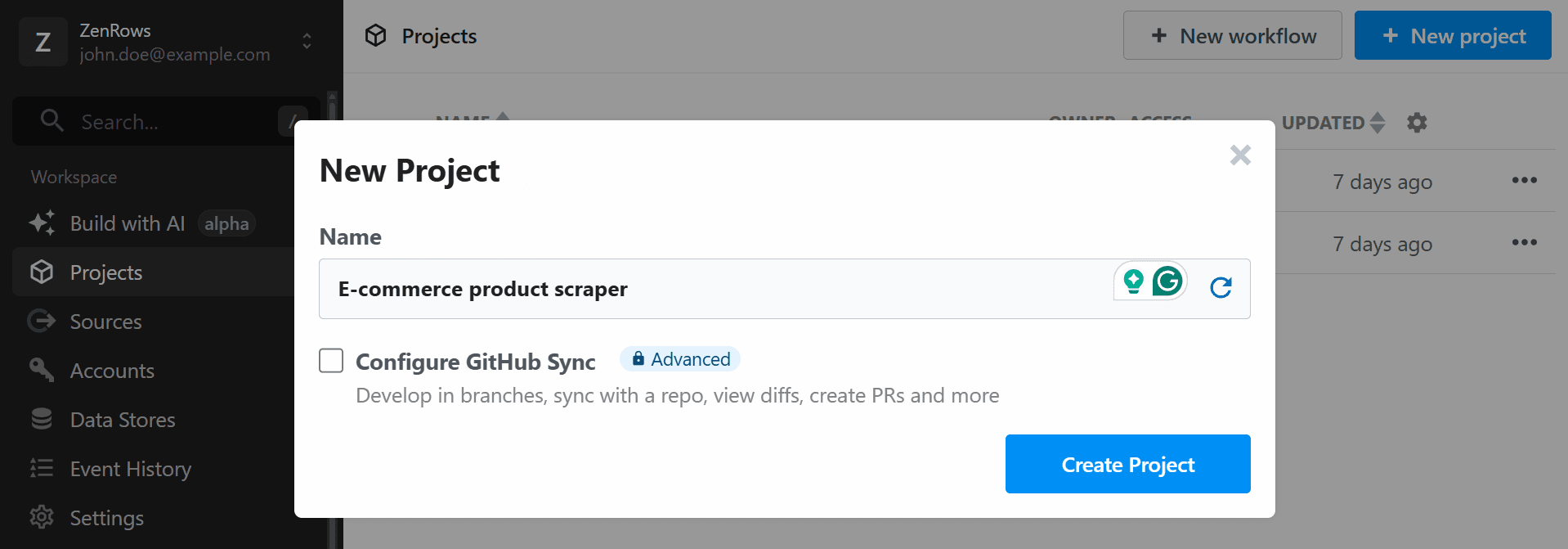

1. Create a new workflow on Pipedream

- Log in to your account at https://pipedream.com/.

- Access your

Workspaceand go toProjectsin the left sidebar. - Click

+ New projectat the top right and enter a name for your project (e.g., “E-commerce Product Scraper”).

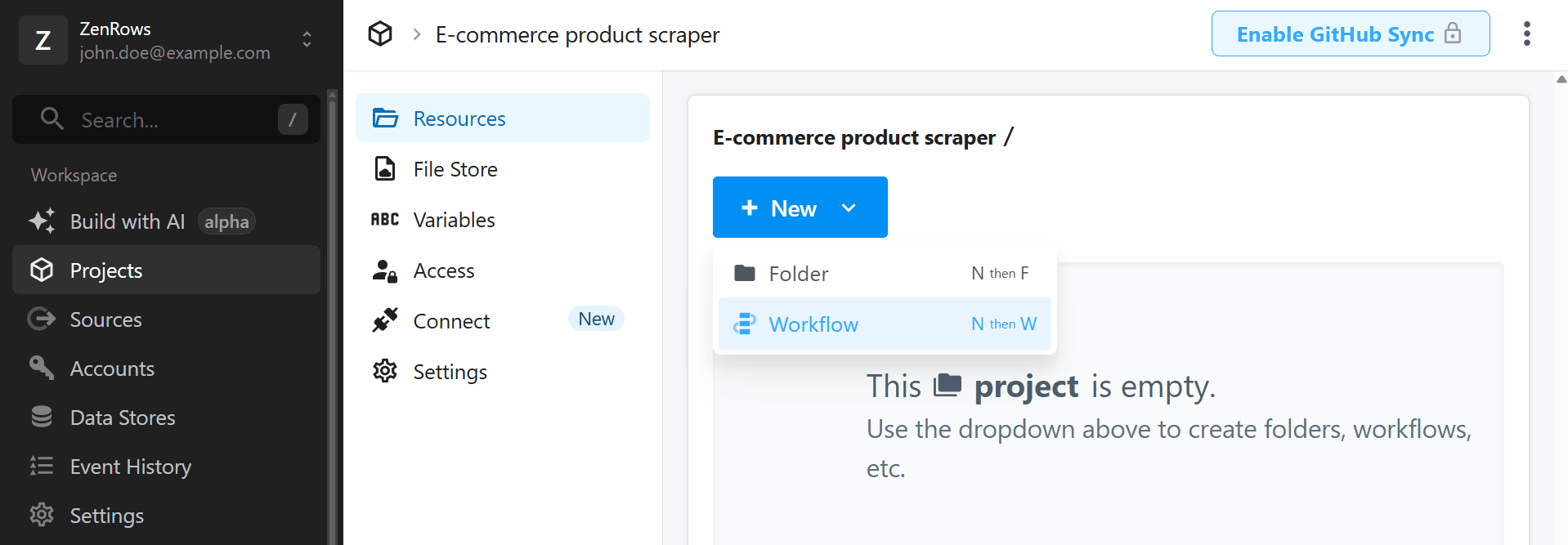

- Click

+ New Workflowand provide a name for your workflow (e.g., “Product name and price scraper”).

- Click the

Create Workflowbutton.

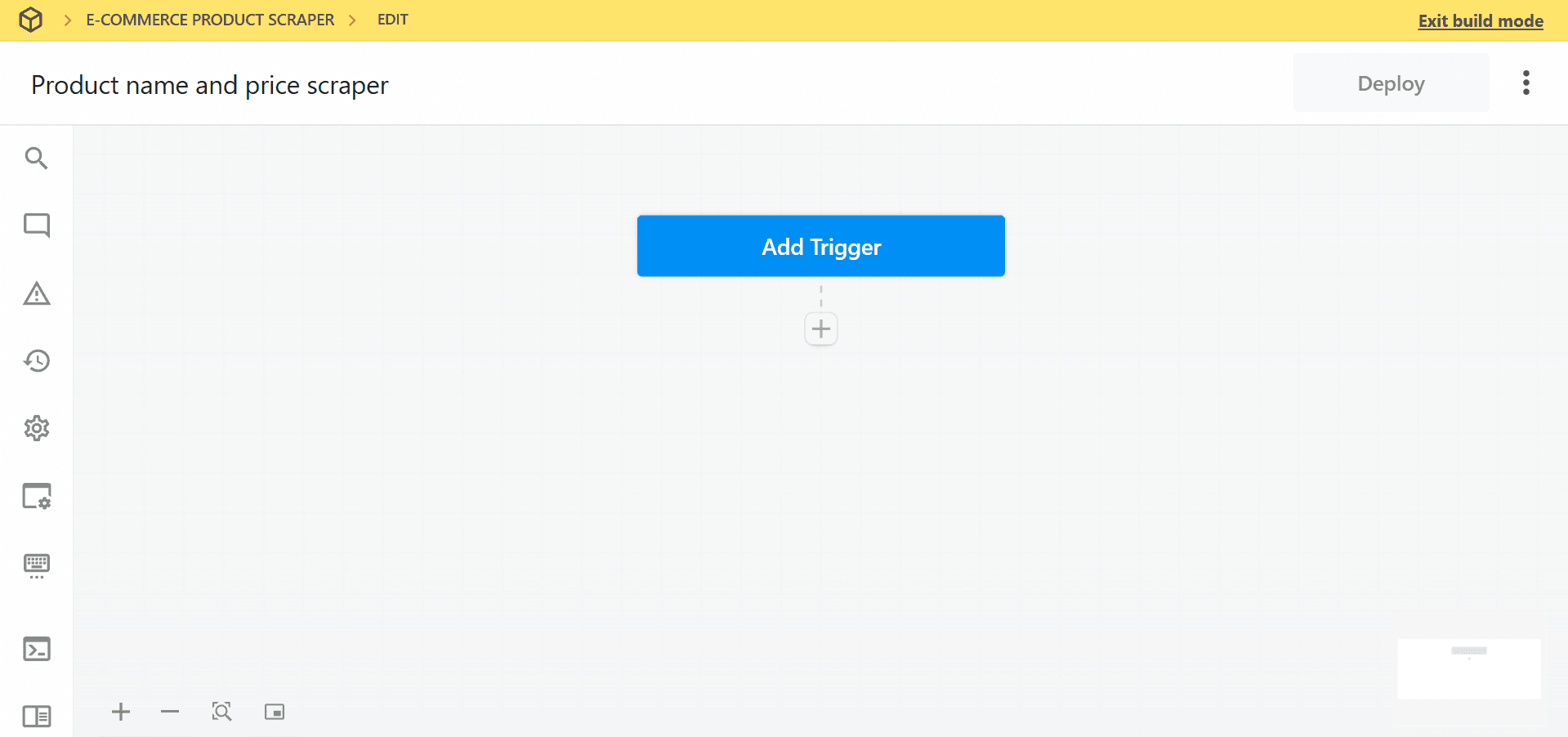

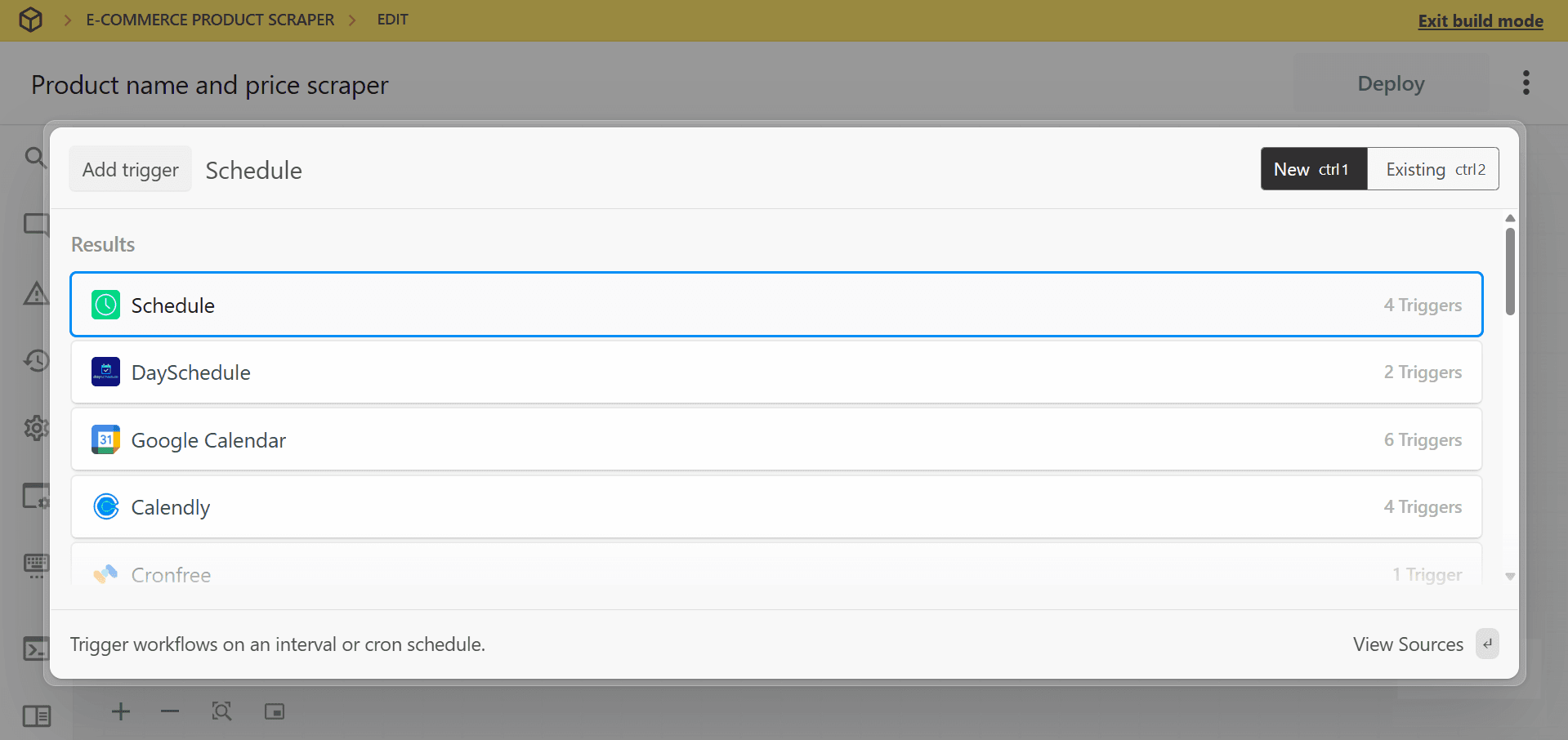

2. Set up a Schedule trigger

- Click

Add Triggerin your new workflow.

- Search for and select

Schedule.

- Choose

Custom Intervalfrom the trigger options. - Set your desired frequency (e.g., “Daily at 1:00 AM UTC”).

- Click

Save and continue.

3. Configure ZenRows integration with Pipedream

To extract specific elements from a dynamic page using ZenRows with Pipedream, follow these steps:Generate the cURL request from ZenRows

- Open ZenRows’ Universal Scraper API Request Builder.

- Enter

https://www.scrapingcourse.com/paginationas the URL to scrape. - Activate JS Rendering to handle dynamic content.

- Set Output type to Specific Data and configure the CSS selectors under the Parsers tab:

The CSS selectors provided in this example (

.product-name,.product-price) are specific to the page used in this guide. Selectors may vary across websites. For guidance on customizing selectors, refer to the CSS Extractor documentation. If you’re having trouble, the Advanced CSS Selectors Troubleshooting Guide can help resolve common issues. - Click on the

cURLtab on the right and copy the generated code. Example code:

Import the cURL into Pipedream

- In Pipedream, add an

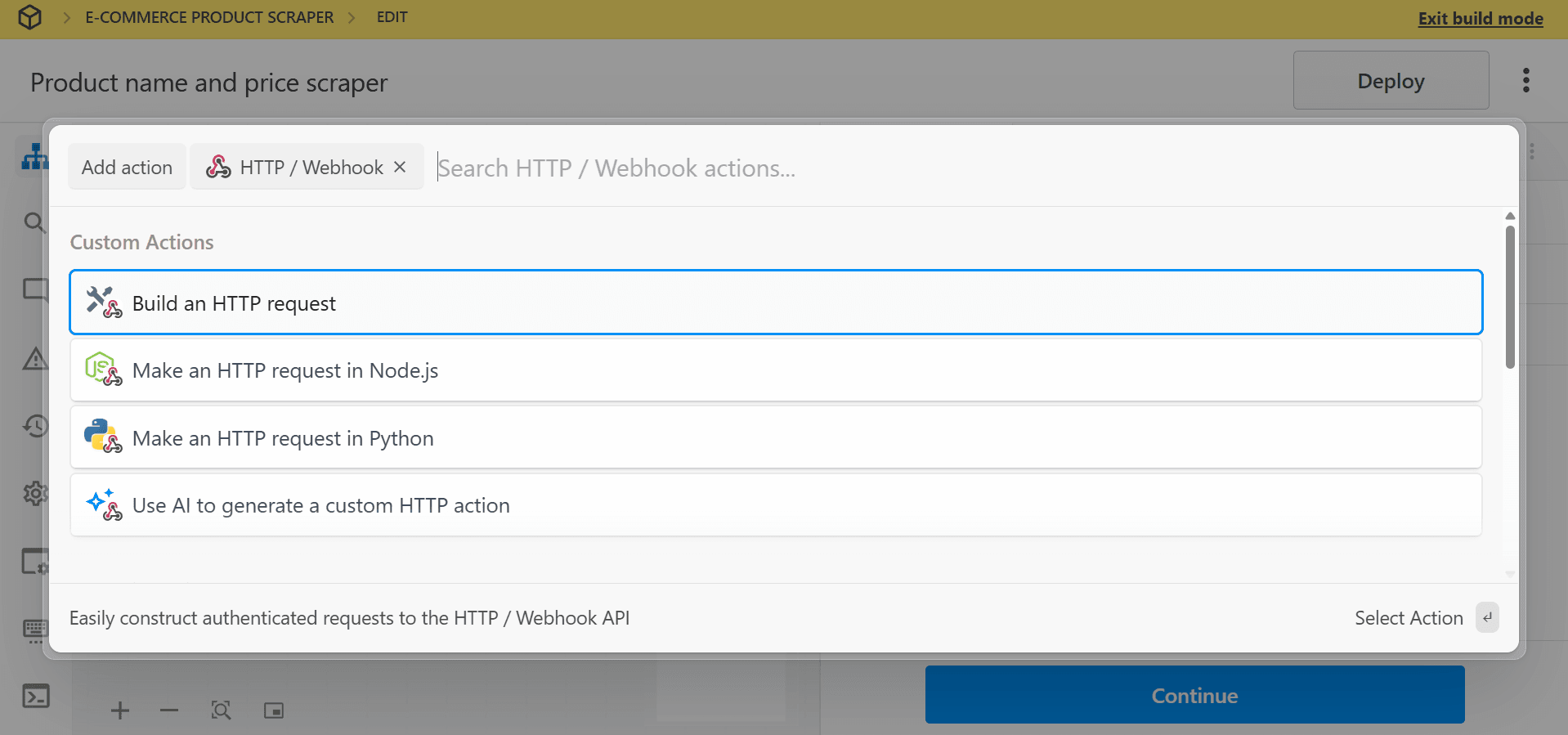

HTTP requeststep:- Click the

+button below your trigger to add a new step. - Search for and select

HTTP / Webhook. - Choose

Build an HTTP requestfrom the actions.

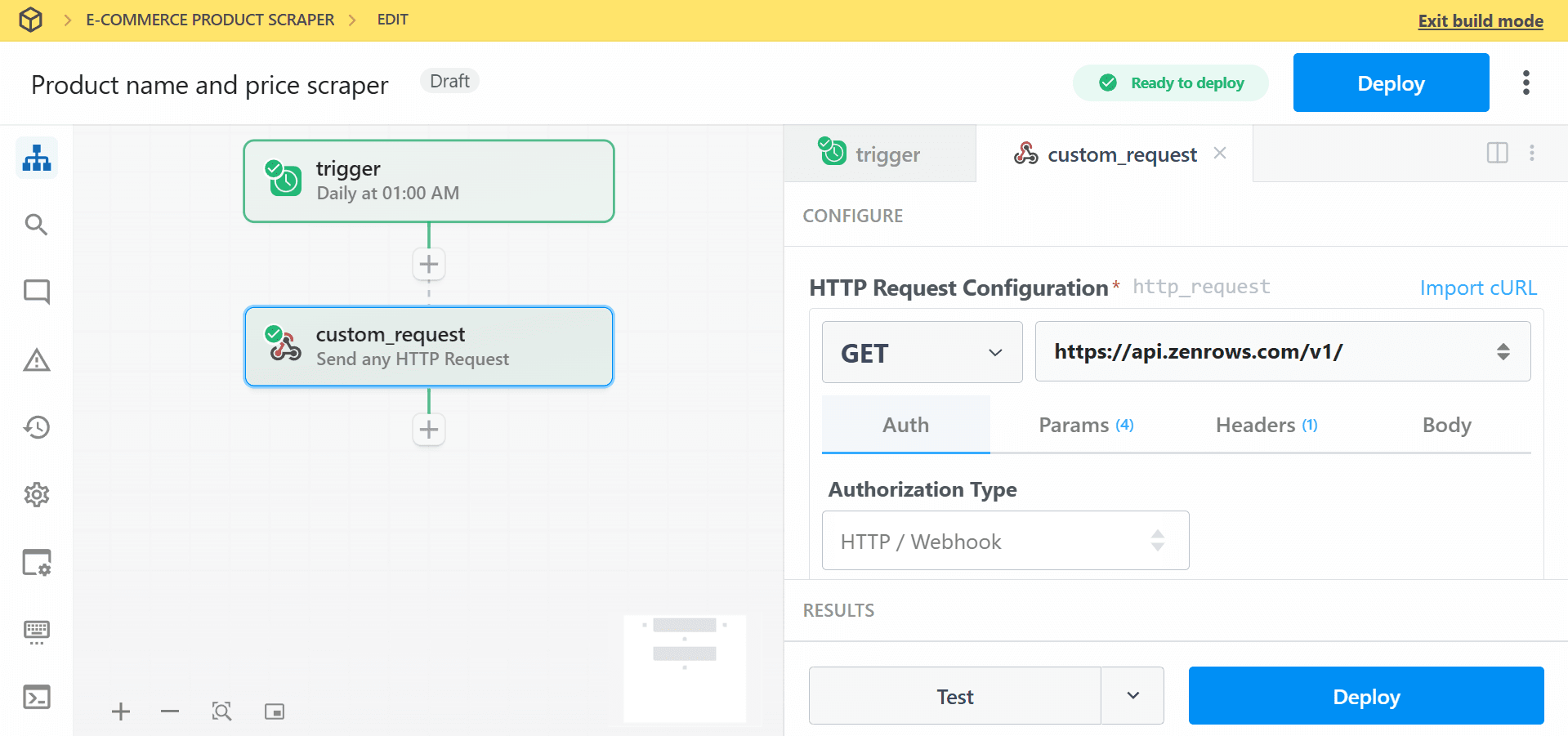

- Click the

- Click

Import cURLand configure the HTTP request using the copied cURL code.

- Test the step to confirm your setup works correctly. You should receive the extracted product names and prices.

4. Process and transform the scraped data

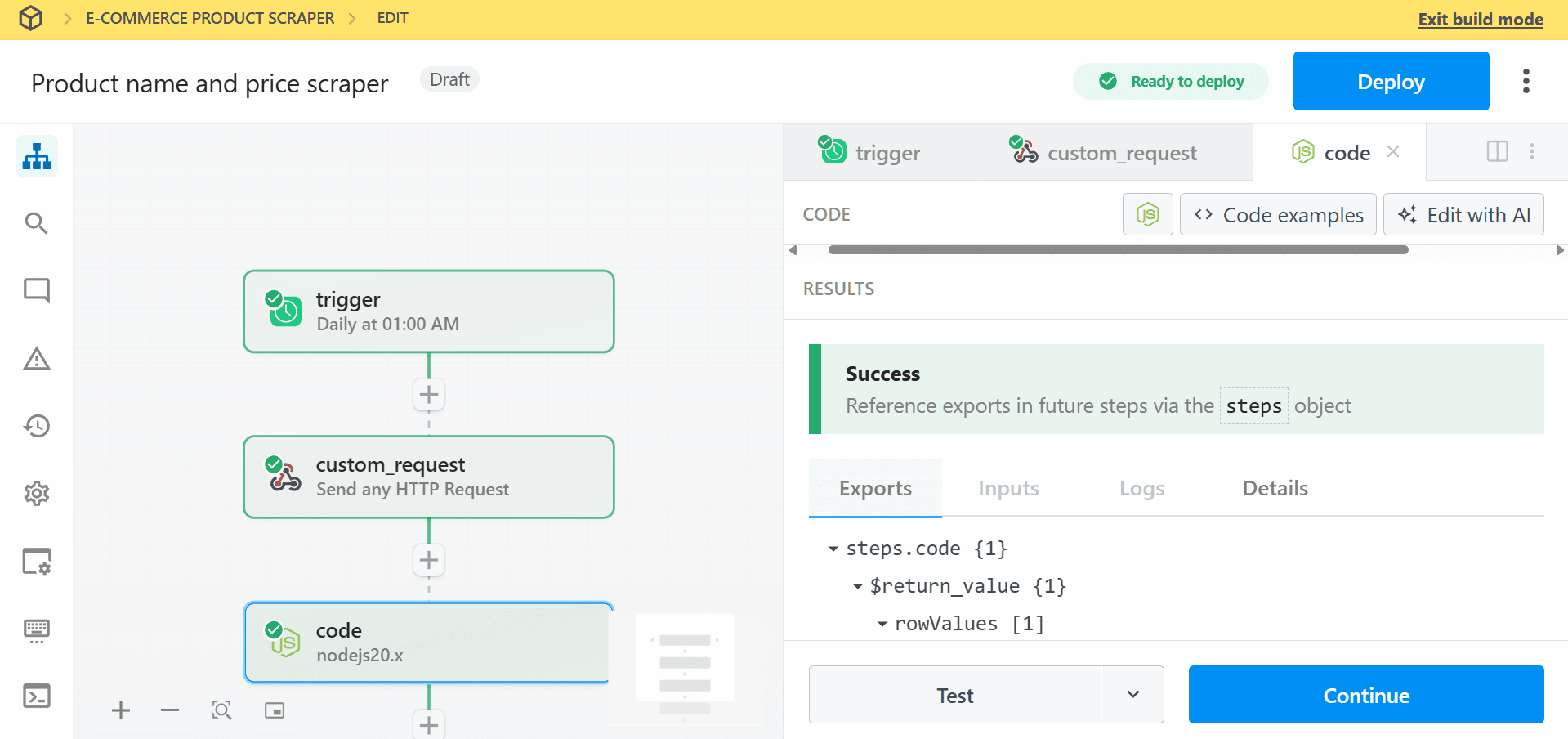

- Click the

+button to add another step. - Select

Nodeaction and chooseRun Node code. - Add the following data transformation code to format the data as an array of arrays, which will be used in the next step:

JavaScript

- Test the step to confirm the code works.

5. Save results to Google Sheets

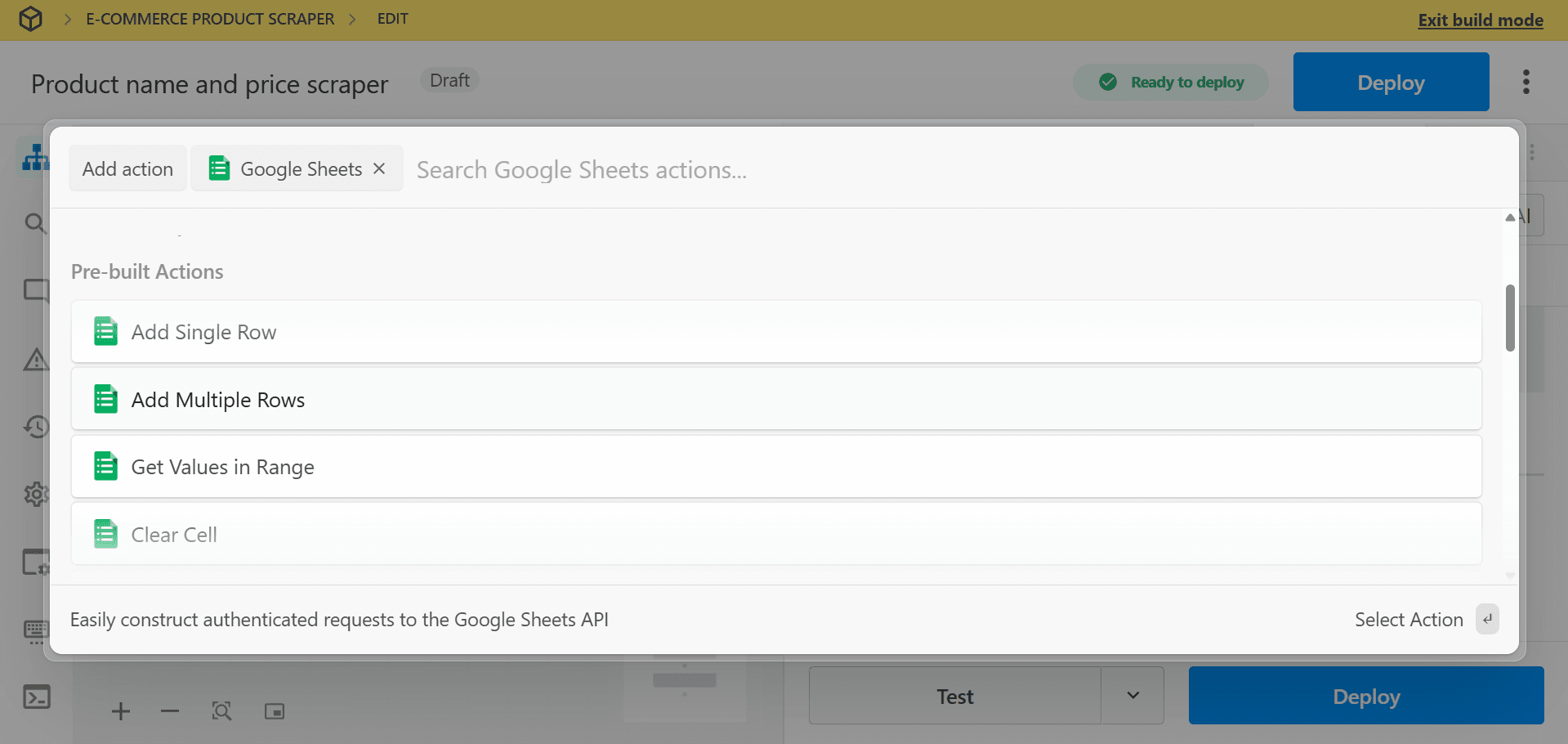

- Click the

+button to add a final step. - Search for and select

Google Sheets. - Choose

Add Multiple Rowsfrom the Pre-built Actions.

- Click

Connect new accountto link your Google account with Pipedream. - Select your target Spreadsheet from the dropdown (or provide the Spreadsheet ID).

- Choose the Worksheet where you want to store the data.

- Configure Row Values. This field expects an array of arrays. Map the data from your processing step:

{{steps.code.$return_value.rowValues[0]}} - Click

Test stepto verify that the data is correctly added to your Google Sheets. - Click

Deployin the top right corner of your workflow.

Troubleshooting

Authentication Issues

-

Check your ZenRows API key

Go to your ZenRows dashboard and copy your API key. Make sure it is pasted exactly as it appears into your workflow or tool. Even a small typo can prevent it from working. -

Verify your subscription status

Make sure your ZenRows subscription is active and that your account has enough usage. If your usage run out, the scraping requests will stop working.

No Data Retrieved

-

Check if the page loads content right away

Visit the page in your browser. If you don’t see the data immediately, it might load a few seconds later. This means the content is dynamic. -

Enable JavaScript rendering

If the website loads content using JavaScript, you need to enable JS Rendering in ZenRows. This allows ZenRows to wait and capture the full content, just like a real browser. -

Check your CSS selectors

Right-click on the item you want to scrape (like a product name or price) and select “Inspect” to find the correct CSS selector. Make sure the selectors you are using match the content on the website. -

Allow more time for the page to load

If the content takes a few seconds to appear, try increasing the wait time in ZenRows using the wait orwait_foroptions. This gives the page more time to fully load before ZenRows tries to scrape it.

Workflow Execution Failures

-

Look at the error logs in your tool

If you are using a tool like Pipedream, check the logs to see if there is a specific error message. This can help you understand what went wrong. -

Review each step in your workflow

Make sure each step has the correct data and settings. If a step depends on information from a previous one, double-check that everything is connected properly. -

Confirm the format of the API response

Some tools expect data in a specific format. Make sure your setup is handling the response correctly, especially if you are extracting specific fields like text or prices.

Conclusion

You’ve successfully learned how to integrate ZenRows with Pipedream! This powerful combination enables you to build sophisticated web scraping automations that can collect, process, and distribute data across your entire tech stack efficiently.Frequently Asked Questions (FAQs)

How do I handle dynamic content in ZenRows?

How do I handle dynamic content in ZenRows?

Some websites load their content a few seconds after the page opens. To make sure ZenRows captures everything, turn on JavaScript rendering in your API request. This lets ZenRows wait and load the page like a real browser before scraping.

What CSS selectors should I use for scraping specific data?

What CSS selectors should I use for scraping specific data?

CSS selectors are used to tell ZenRows what data to extract from the page. For example, if you want to collect product names, you might use

.product-name. To find the right selector, open the page in your browser, right-click the item you want, and select “Inspect” to view its code.Can I integrate ZenRows with other tools besides Google Sheets?

Can I integrate ZenRows with other tools besides Google Sheets?

Yes. You can connect ZenRows to other platforms using tools like Pipedream or Zapier. This allows you to send scraped data to CRMs, databases, Slack, or any tool that accepts API connections.

How do I schedule automatic scraping at regular intervals?

How do I schedule automatic scraping at regular intervals?

In Pipedream, use the “Schedule” trigger to run your scraping workflow automatically. You can choose how often it runs, such as daily at a specific time (for example, every day at 1:00 AM UTC).

What are the limits of the ZenRows and Pipedream integration?

What are the limits of the ZenRows and Pipedream integration?

Limits depend on your ZenRows plan, such as how much usage limit you have. Pipedream also has limits on how many times workflows can run. You can check both platforms to see your current usage and available limits.