What Is Zapier & Why Integrate It with ZenRows?

Zapier is a no-code platform that automates repetitive tasks by linking applications into workflows. Each workflow consists of a trigger and one or more actions. Triggers can be scheduled, event-based, or manually initiated using webhooks. When combined with ZenRows, Zapier enables full automation of your scraping process. Here are some key benefits:- Scheduled scraping: Run scraping tasks on a regular schedule, such as hourly or daily, without manual input.

- No-code setup: Create scraping workflows without needing to write or maintain code.

- Simplified scraping: ZenRows handles JavaScript rendering, rate limits, dynamic content, anti-bot protection, and geo-restrictions automatically.

- Seamless data integration: Store results directly in tools like Google Sheets, Excel, SQL databases, or visualization platforms like Tableau.

- Automated monitoring: Track price changes, stock updates, and website modifications with minimal effort.

Watch the Video Tutorial

Learn how to set up the Zapier ↔ ZenRows integration step-by-step by watching this video tutorial:ZenRows Integration Options

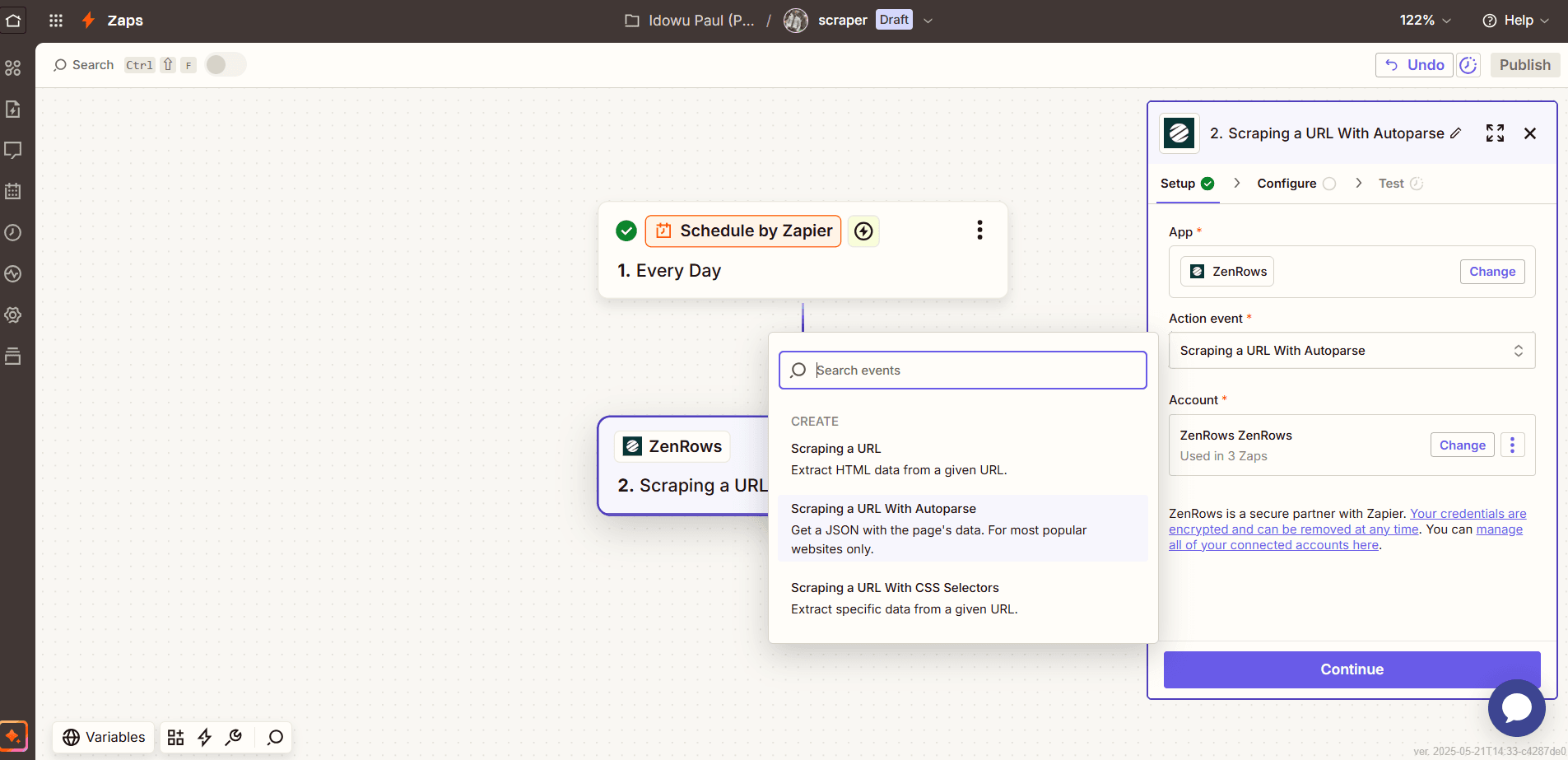

With ZenRows, you can perform various web scraping tasks through Zapier:- Scraping a URL: Returns a full-page HTML from a given URL.

- Scraping a URL With CSS Selectors: Extracts specific data from a URL based on given selectors.

- Scraping a URL With Autoparse: Parses a web page automatically and returns relevant data in JSON format.

The autoparse option only works for some websites. Learn more about how it works in the Autoparse FAQ.

Real-World Integration

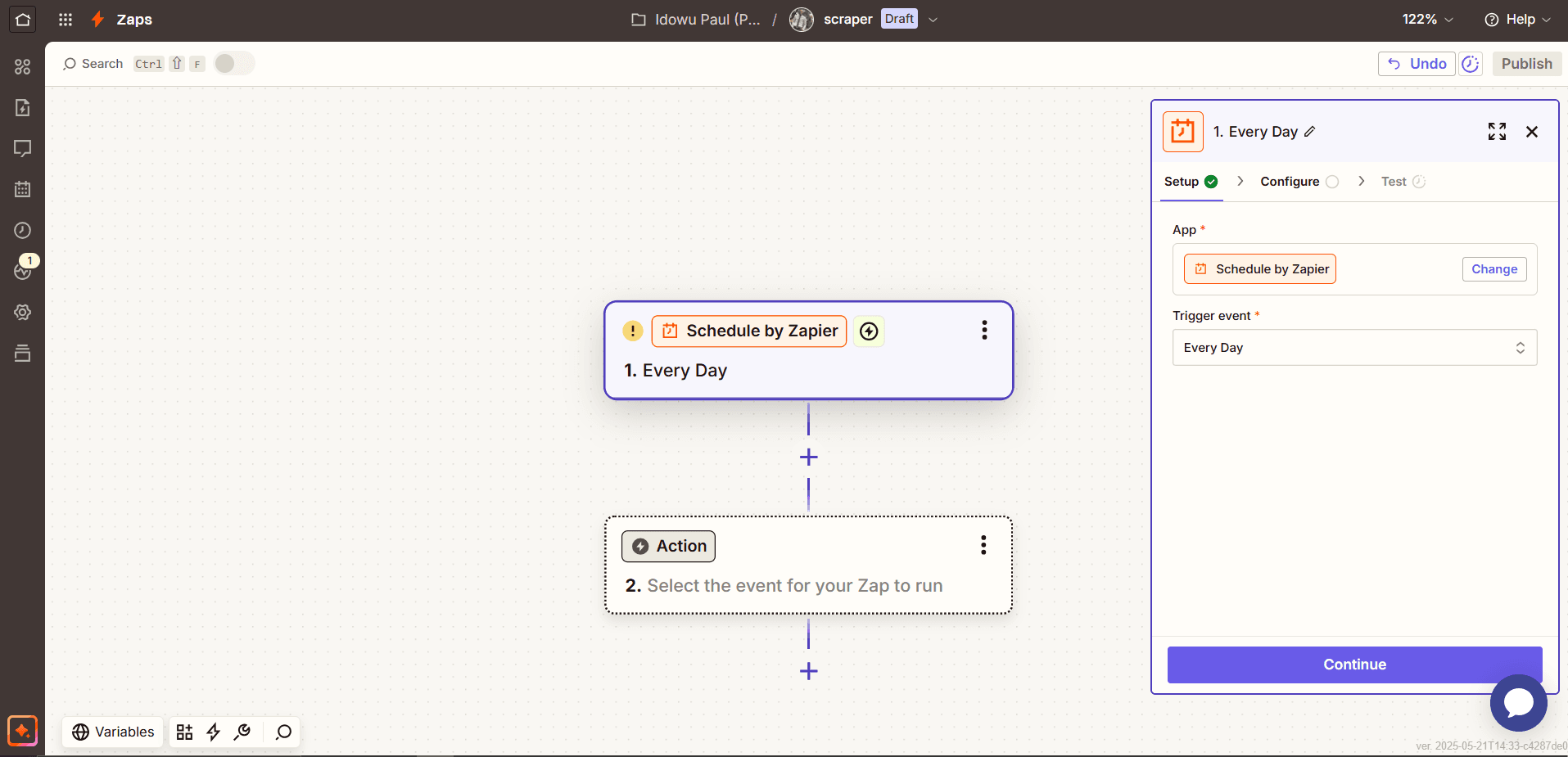

In this guide, we’ll use Zapier’s schedule to automate web scraping with ZenRows’ autoparse integration option. This setup will enable you to collect data at regular intervals and store it in a Google Sheet.Step 1: Create a new trigger on Zapier

- Log in to your Zapier account at Zapier.

- Click

Createat the top left and selectZap.

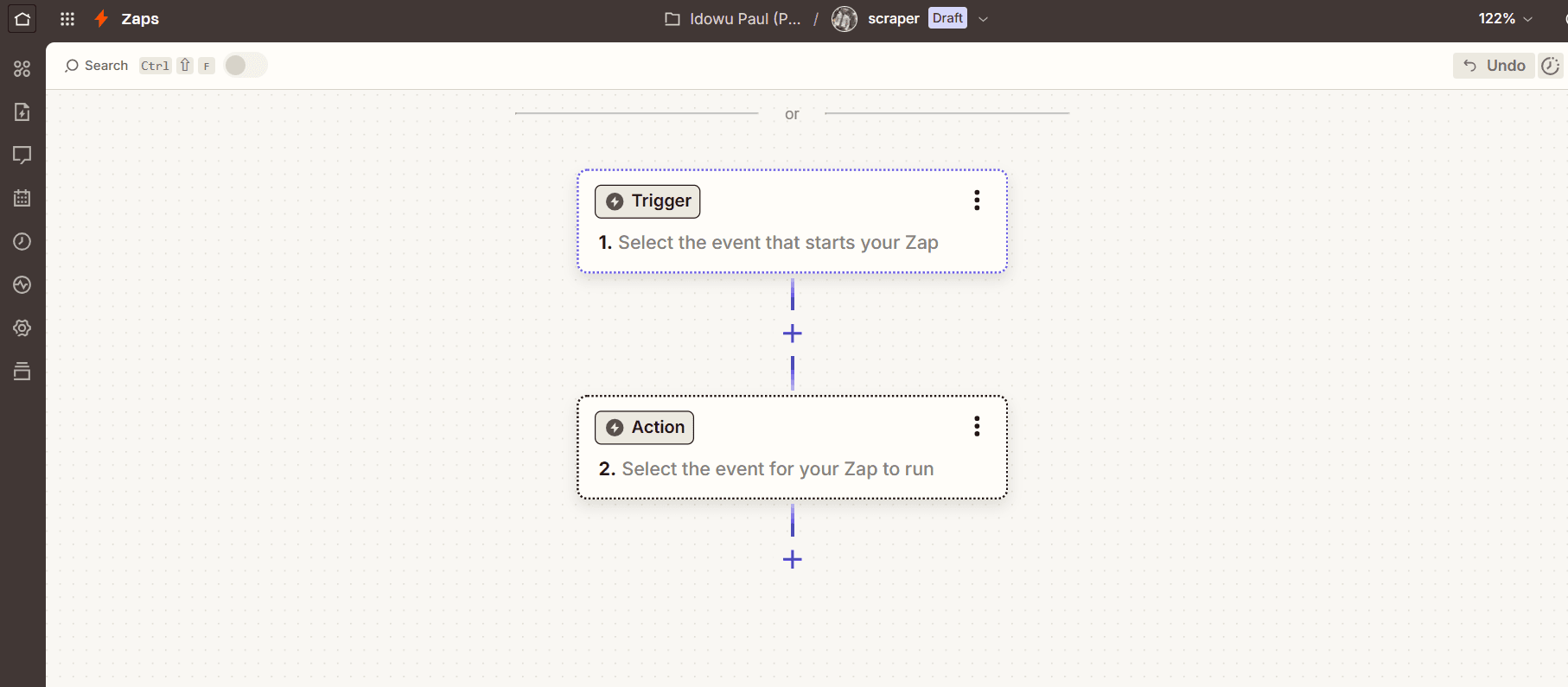

- Click

Untitled Zapat the top and selectRenameto give your Zap a specific name. - Click

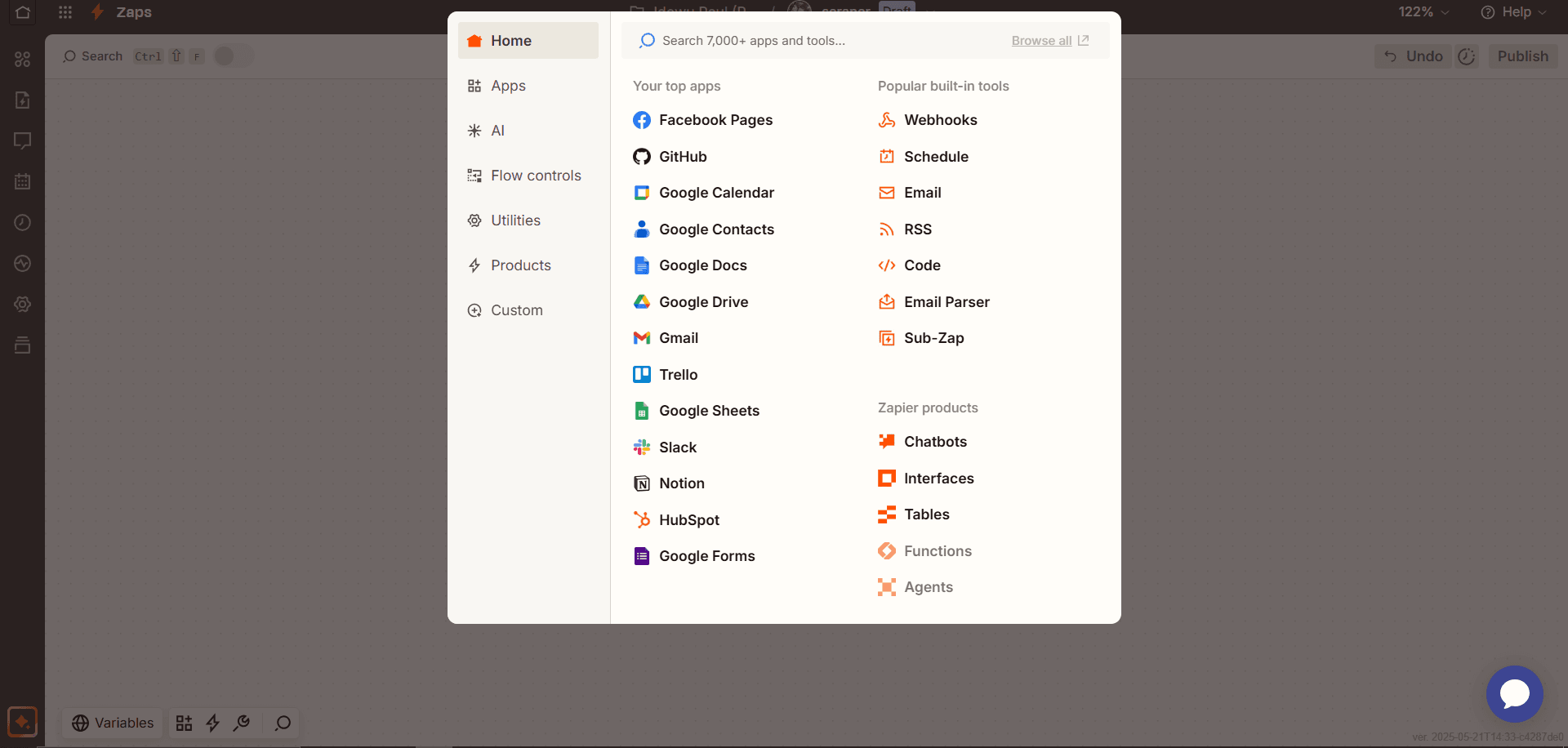

Trigger.

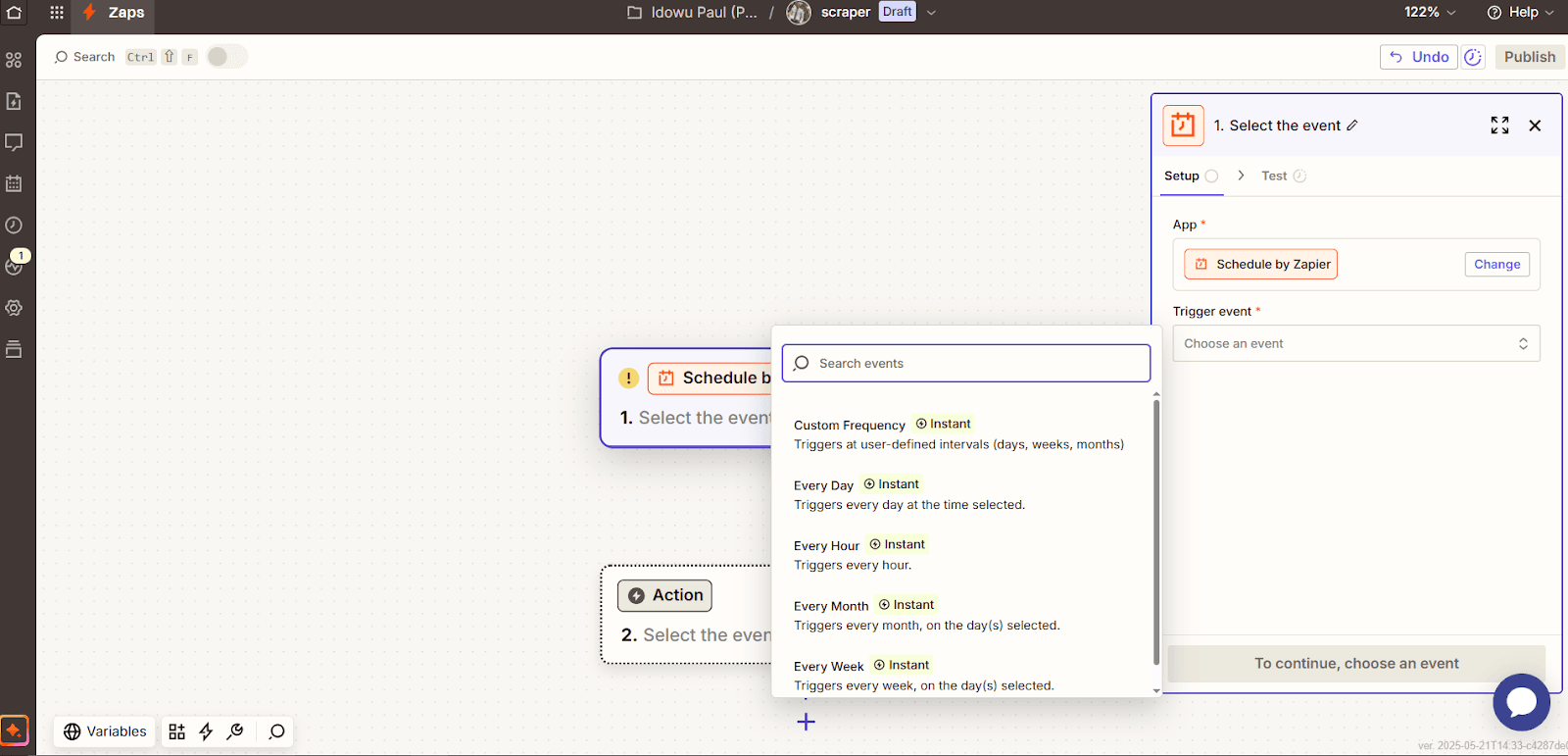

- Select

Scheduleto create a scheduled trigger.

- Click the

Choose an eventdropdown and choose a frequency. You can customize the frequency or choose from existing ones (e.g., “Every Day”).

- Click

Continue.

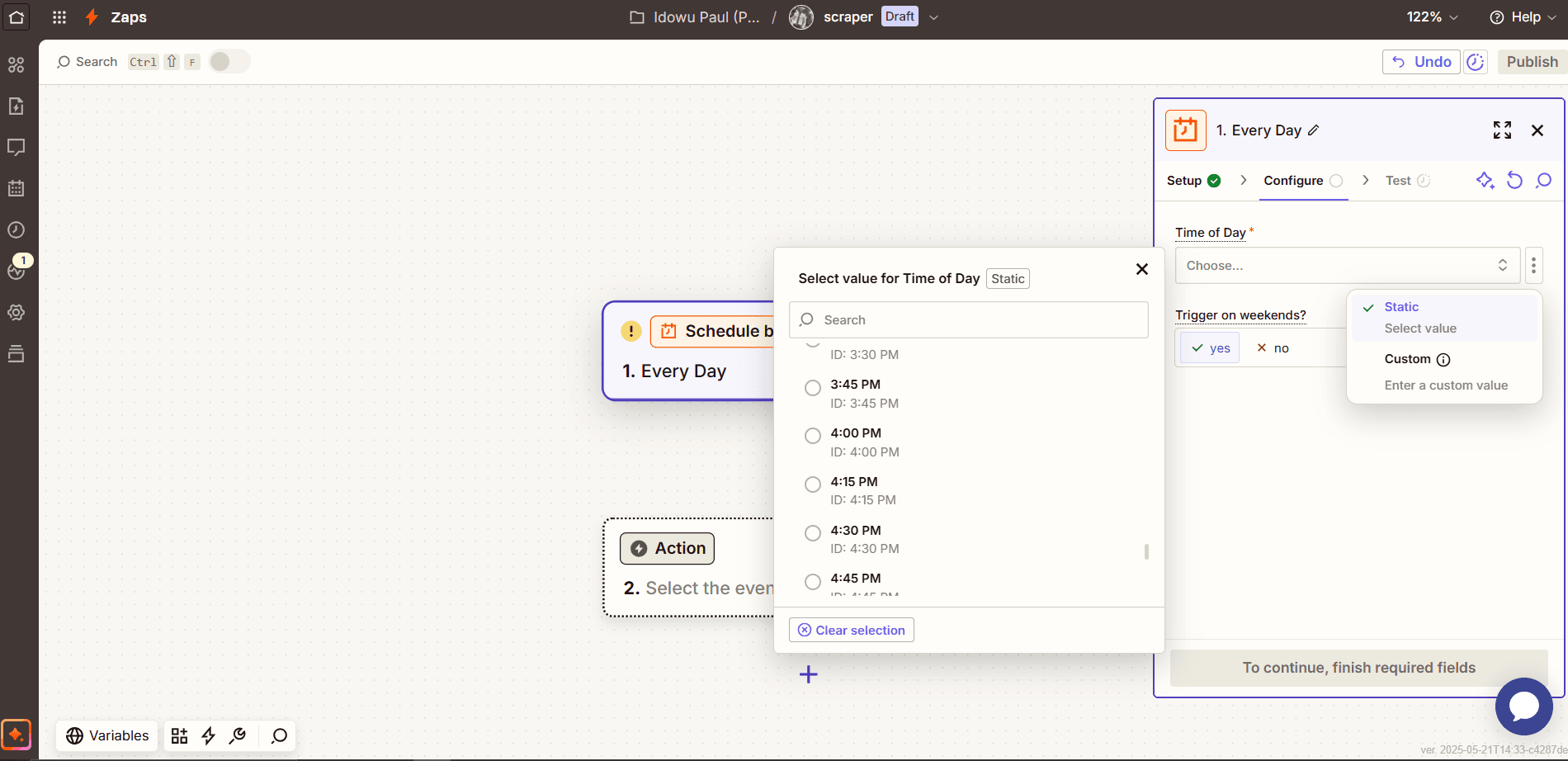

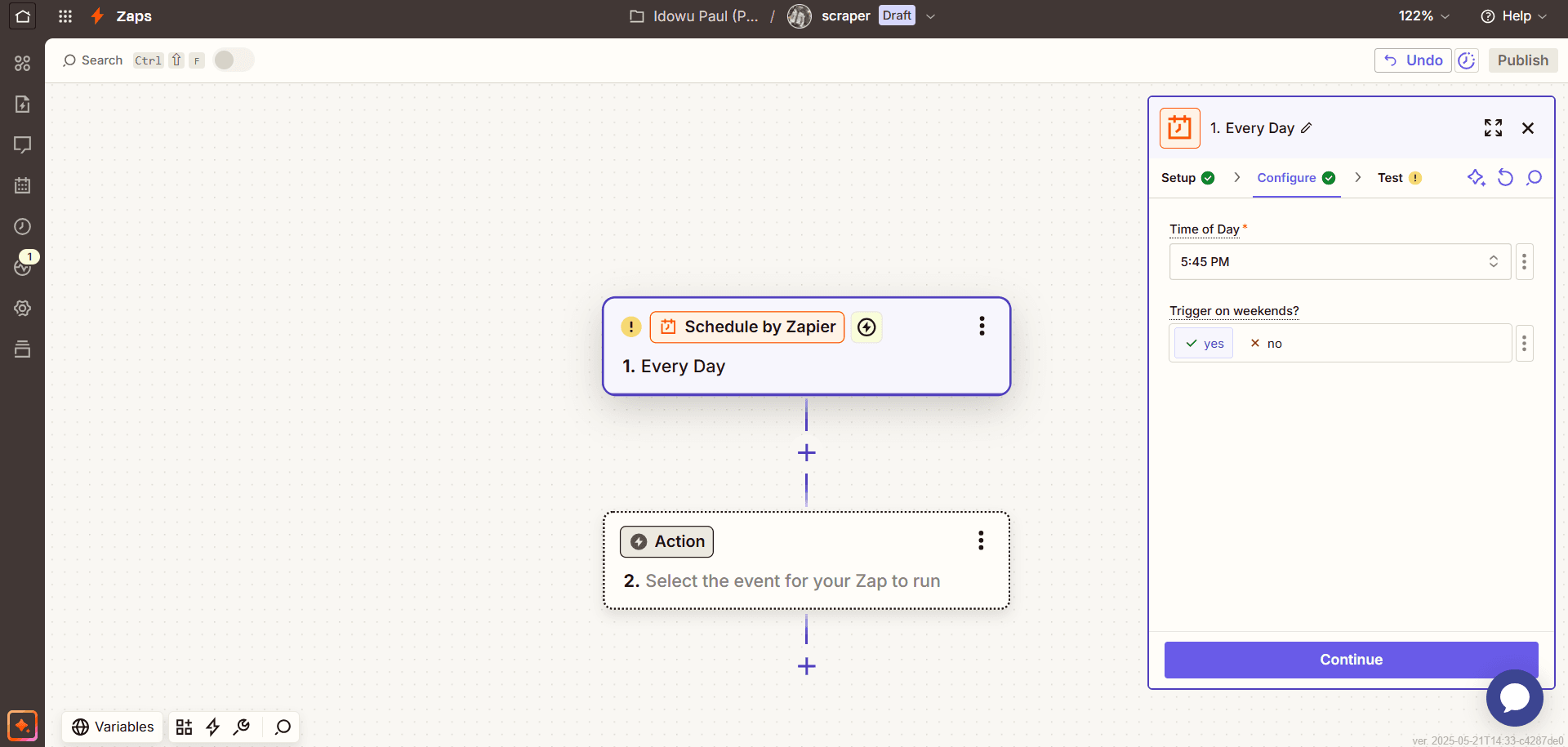

- Click the

Time of the daydropdown and choose a scheduled time for the trigger, or click the option icon to customize.

- Click

Continue.

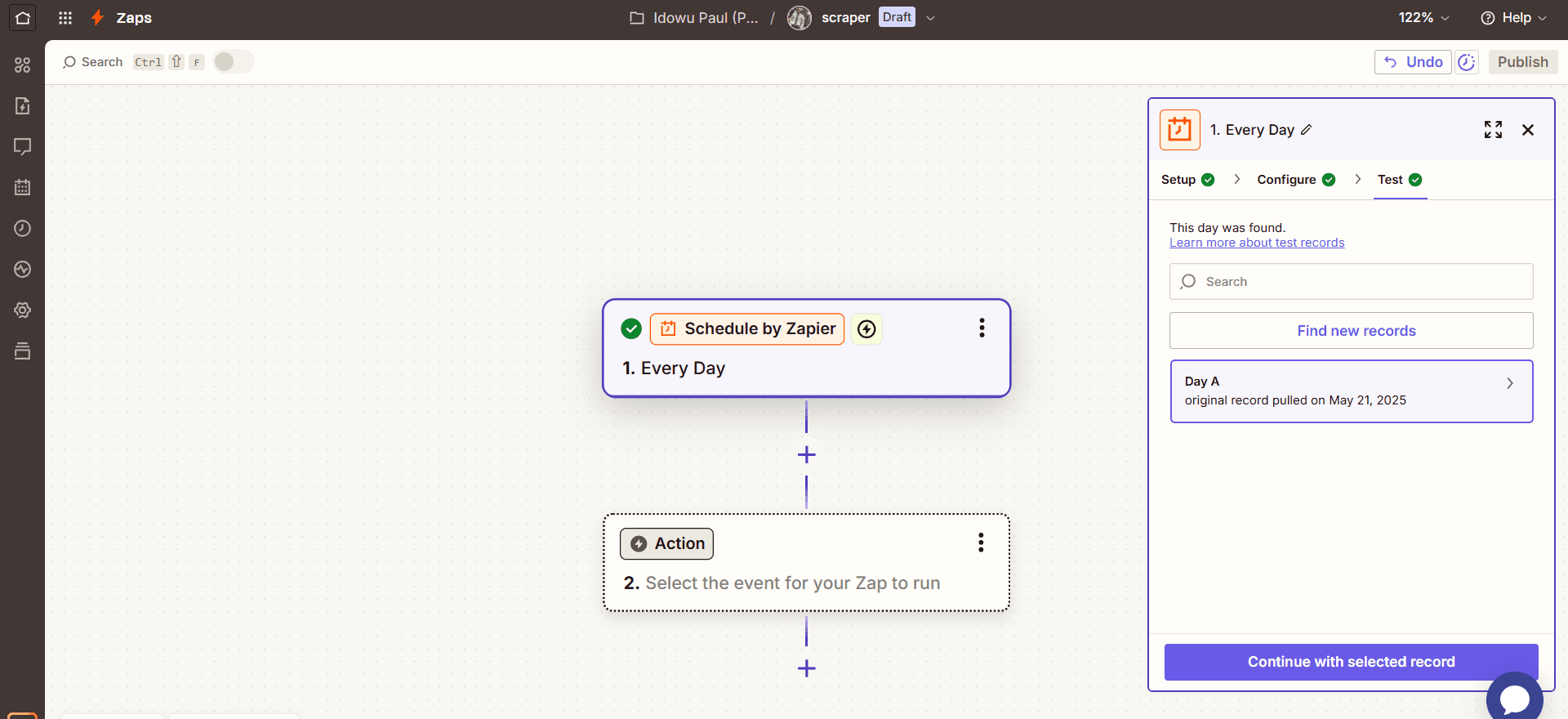

- Click

Test triggerand thenContinue with selected record.

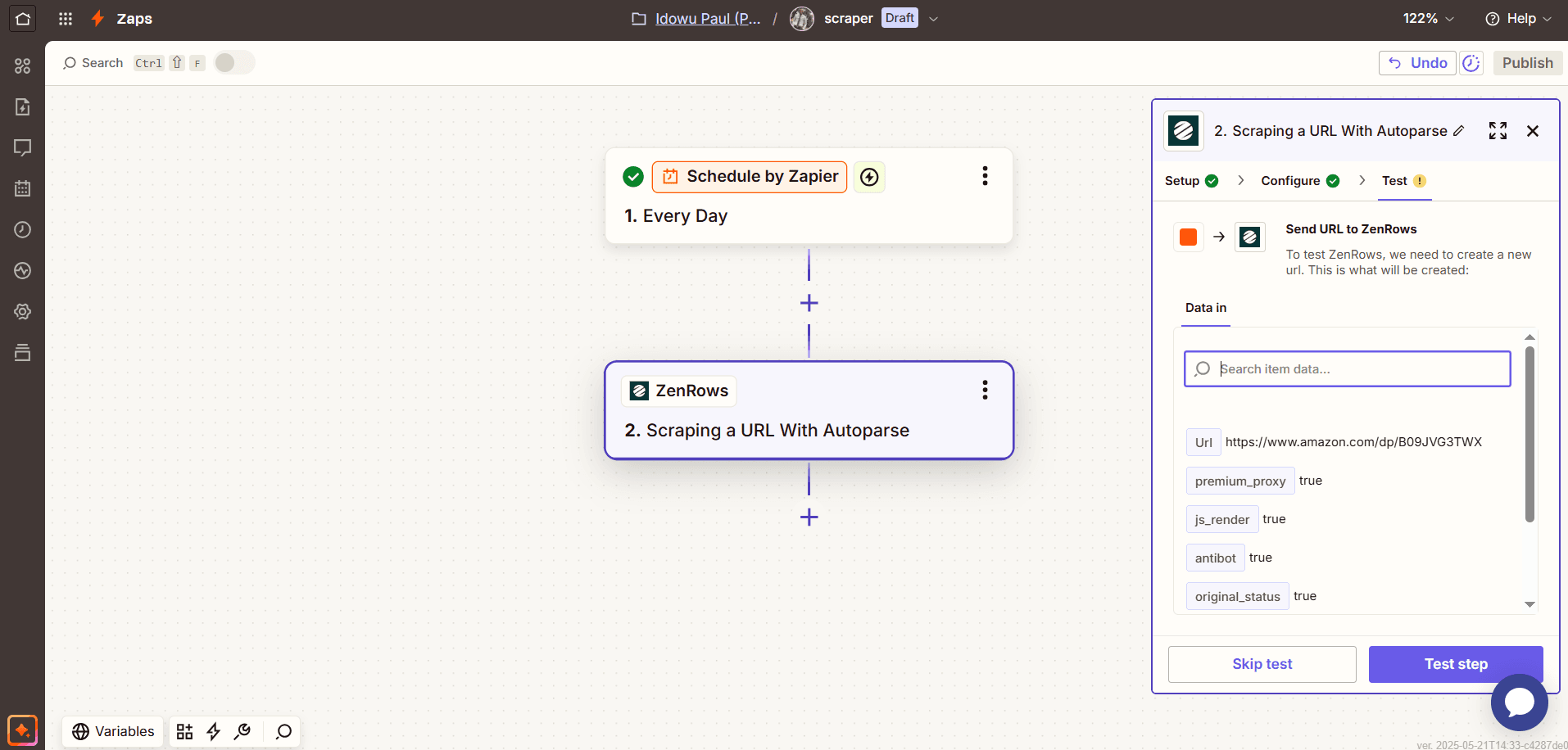

Step 2: Add ZenRows Scraping Action

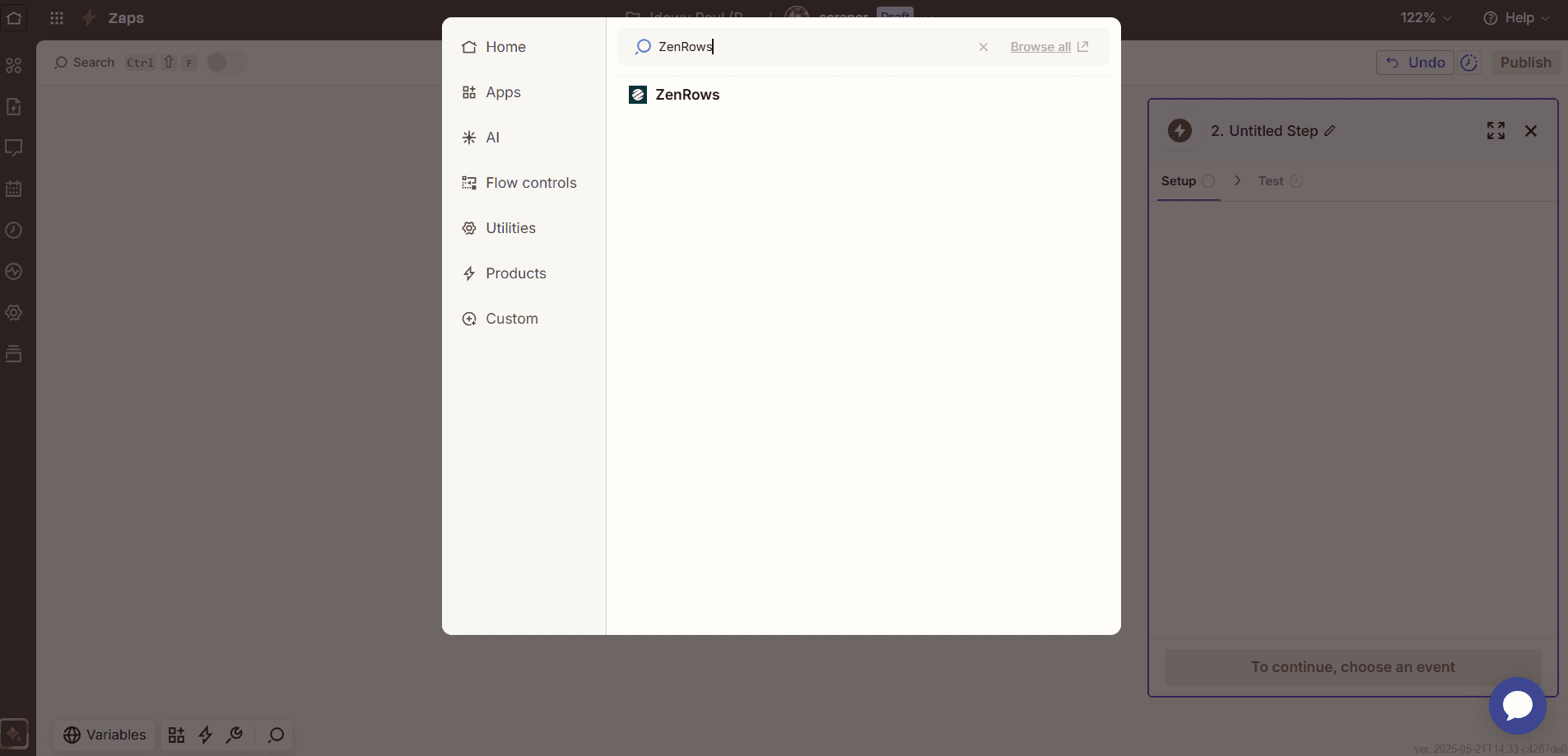

- Type

ZenRowsin the search bar and click it when it appears.

- Click the

Action eventdropdown and selectScraping a URL With Autoparse. Then clickContinue.

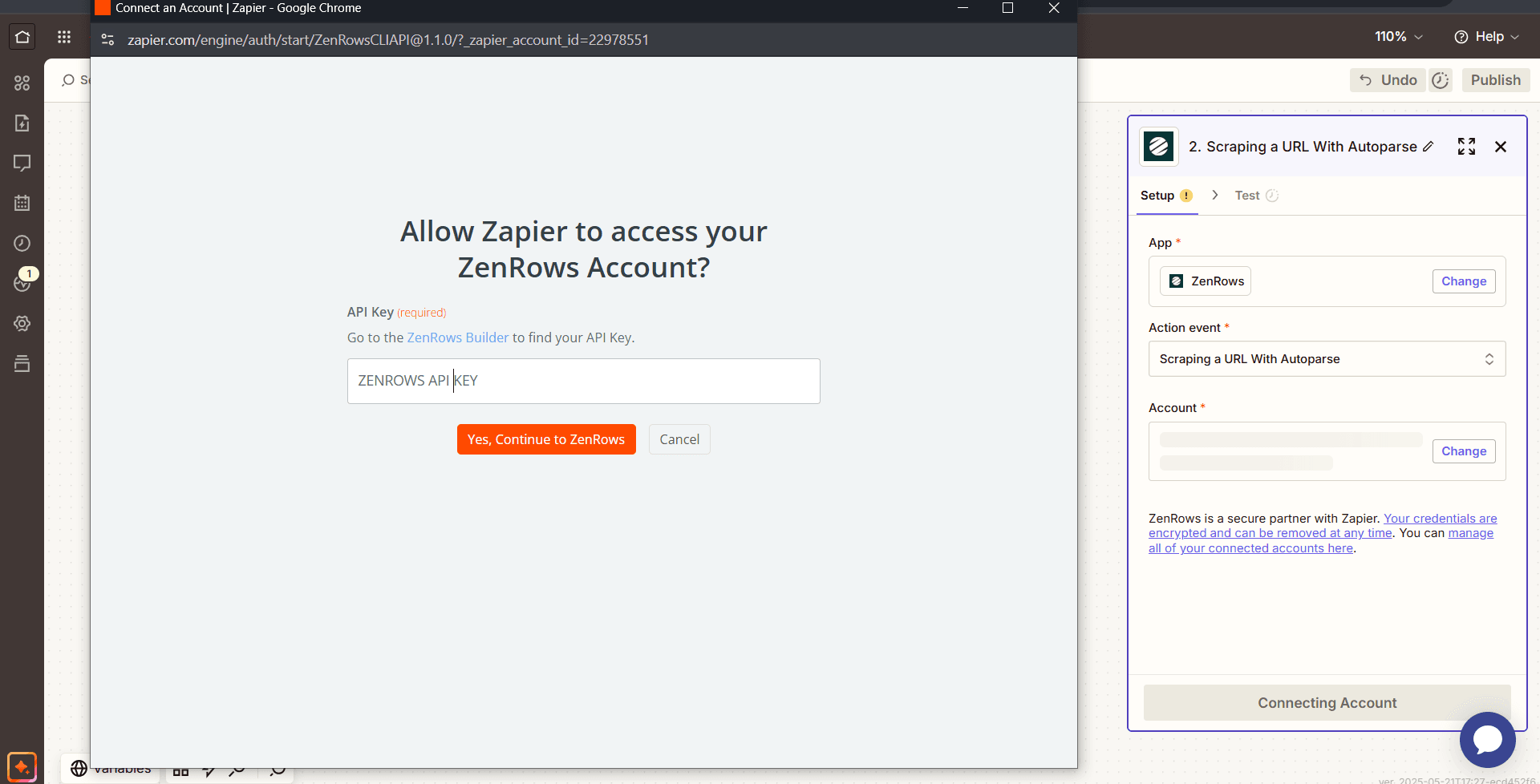

- Click the

Connect ZenRowsbox and paste your ZenRows API key in the pop-up box. ClickYes, Continue to ZenRows, thenContinue.

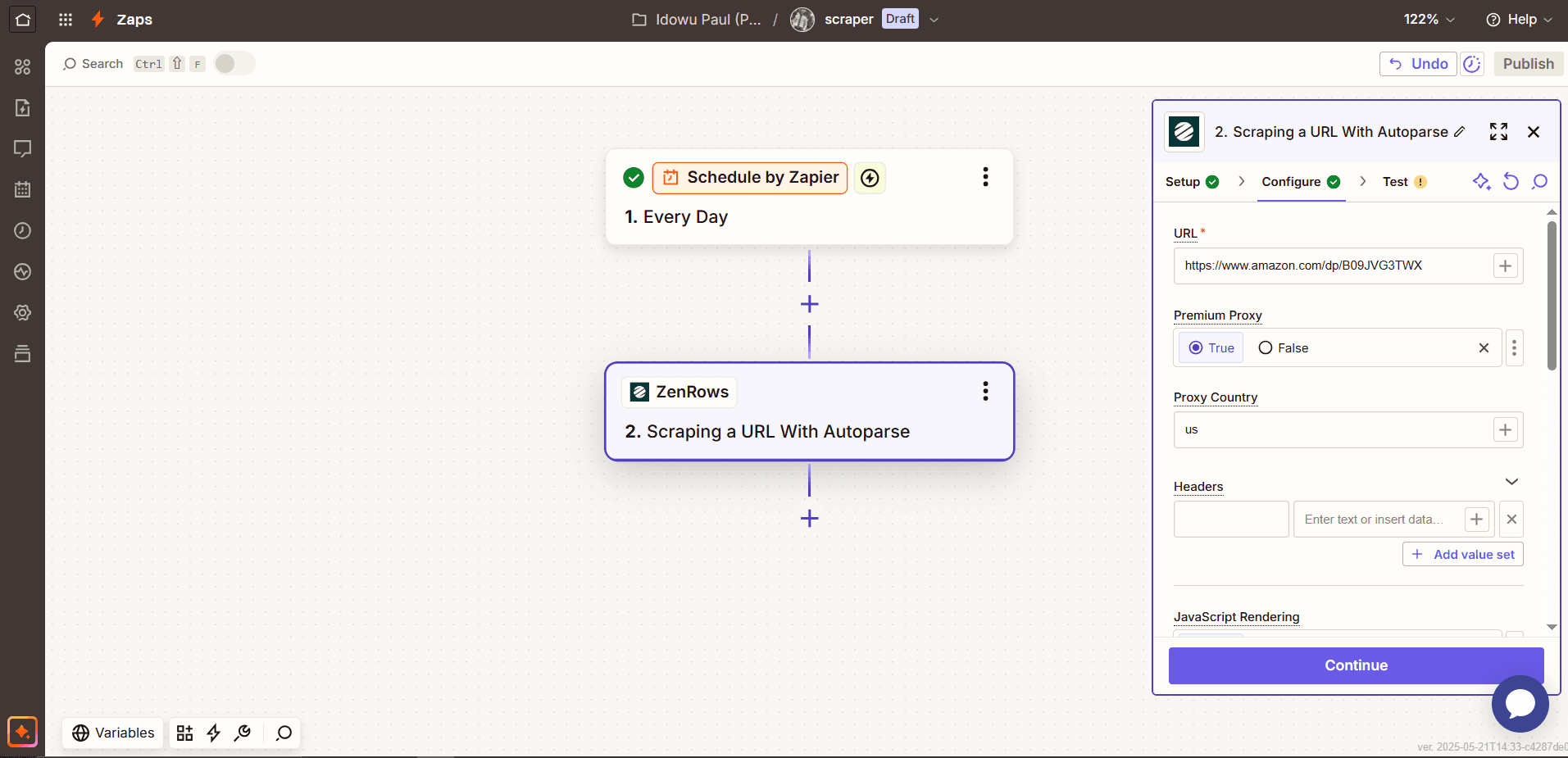

- Paste the following URL in the URL box:

https://www.amazon.com/dp/B0DKJMYXD2/. - Select

Truefor Adaptive Stealth Mode and clickContinue.

- Click

Test stepto confirm the integration and pull initial data from the page.

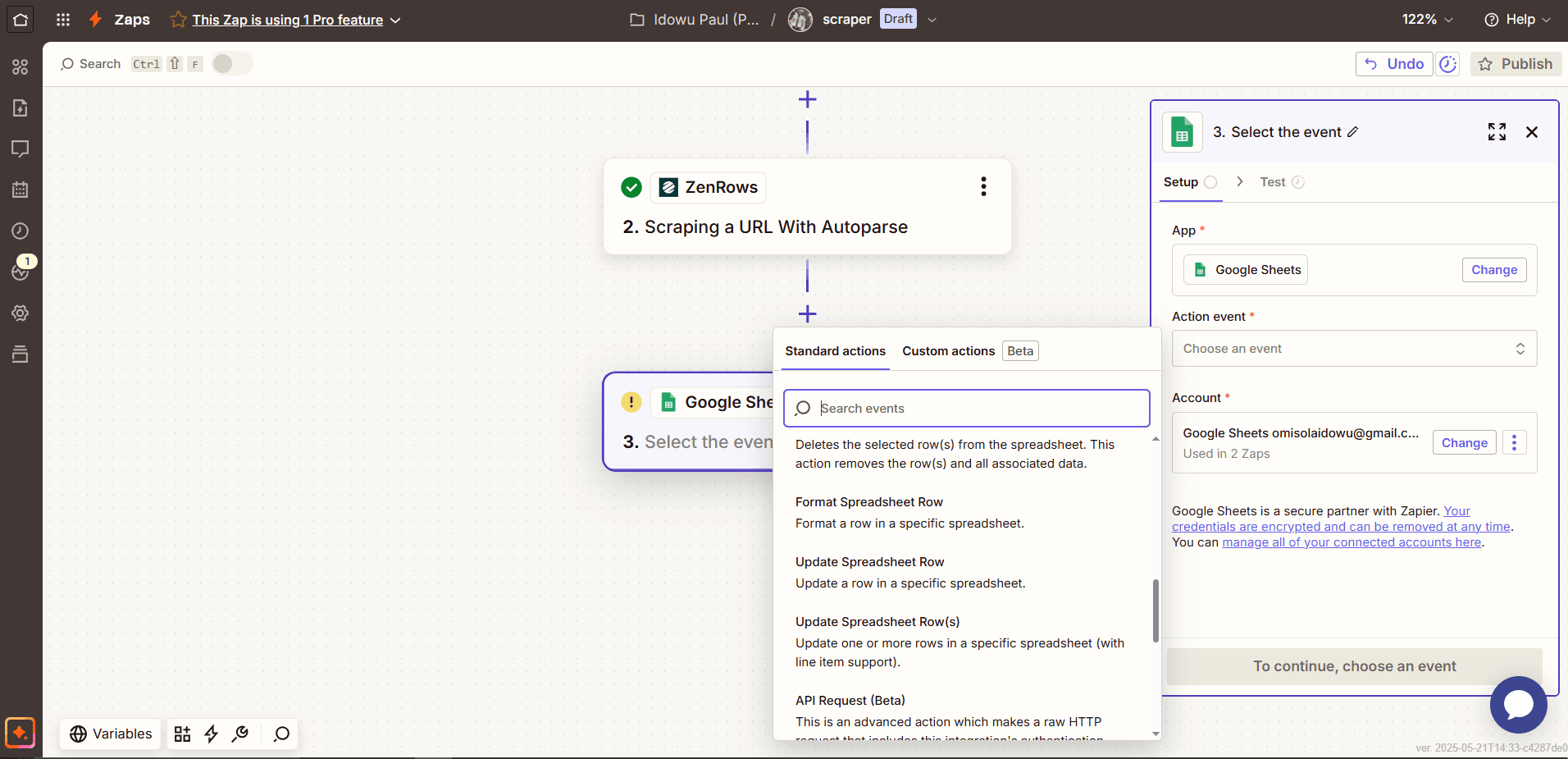

Step 3: Save the extracted data

- Click the

+icon below the ZenRows step. Then, search and selectGoogle Sheets. - Click the

Action eventdropdown and selectCreate Spreadsheet Row. - Click the

Accountbox to connect your Google account, and clickContinue.

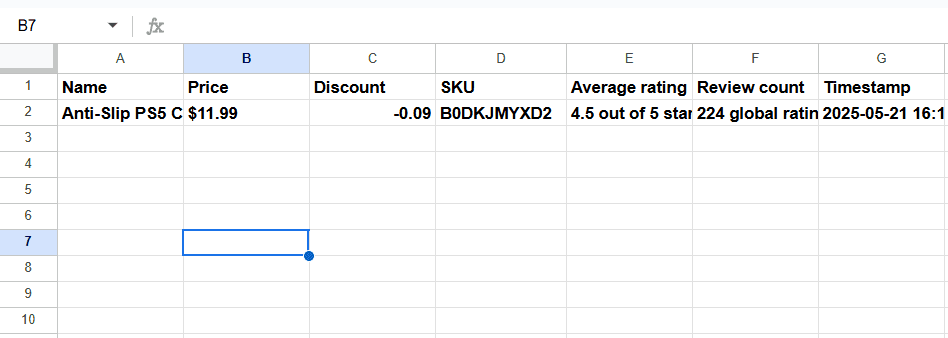

- Add the following column names to the spreadsheet you want to connect:

- Name

- Price

- Discount

- SKU

- Average rating

- Review count

- Timestamp

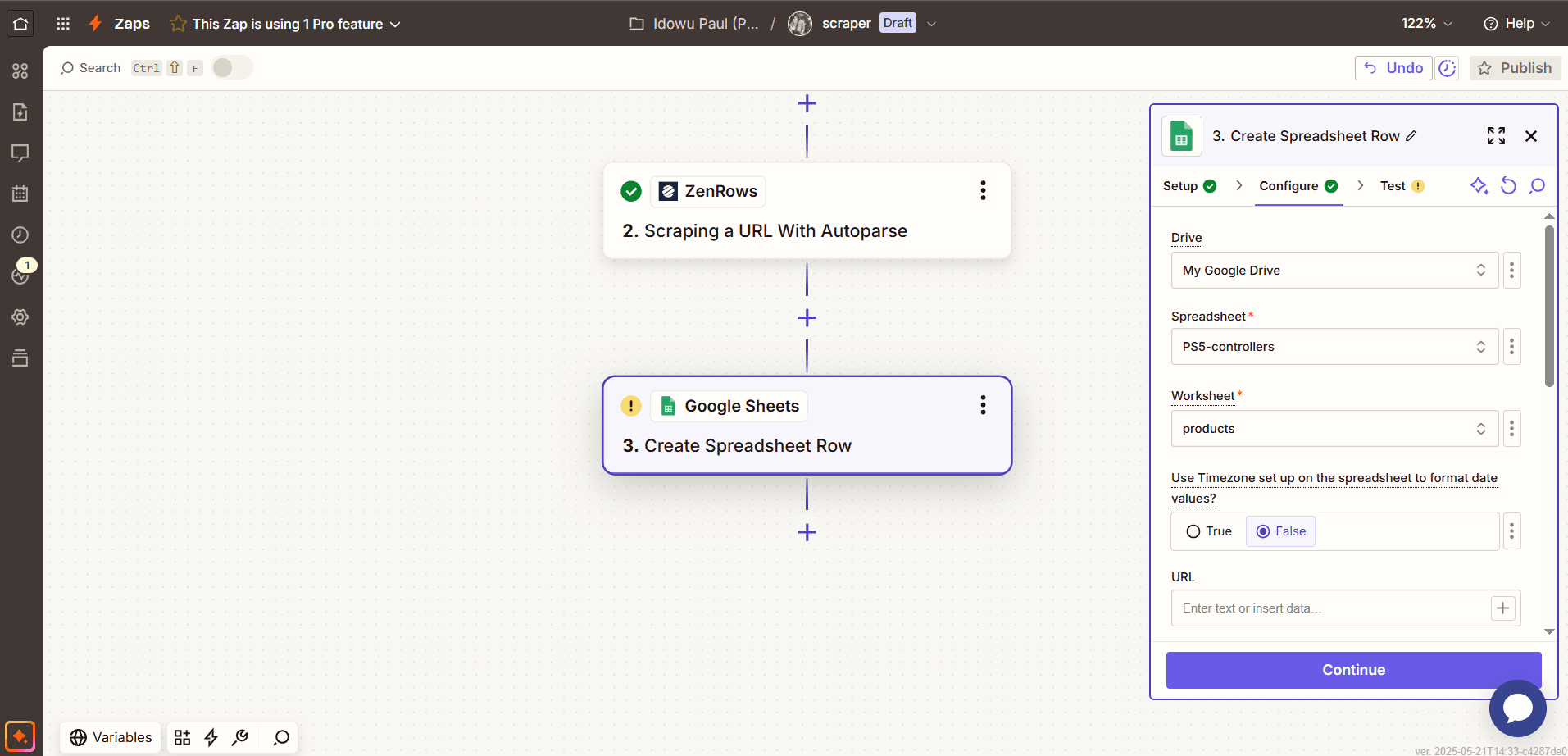

- Click the

Drivebox and select your Google Sheets location. Choose the targetSpreadsheetandWorksheet.

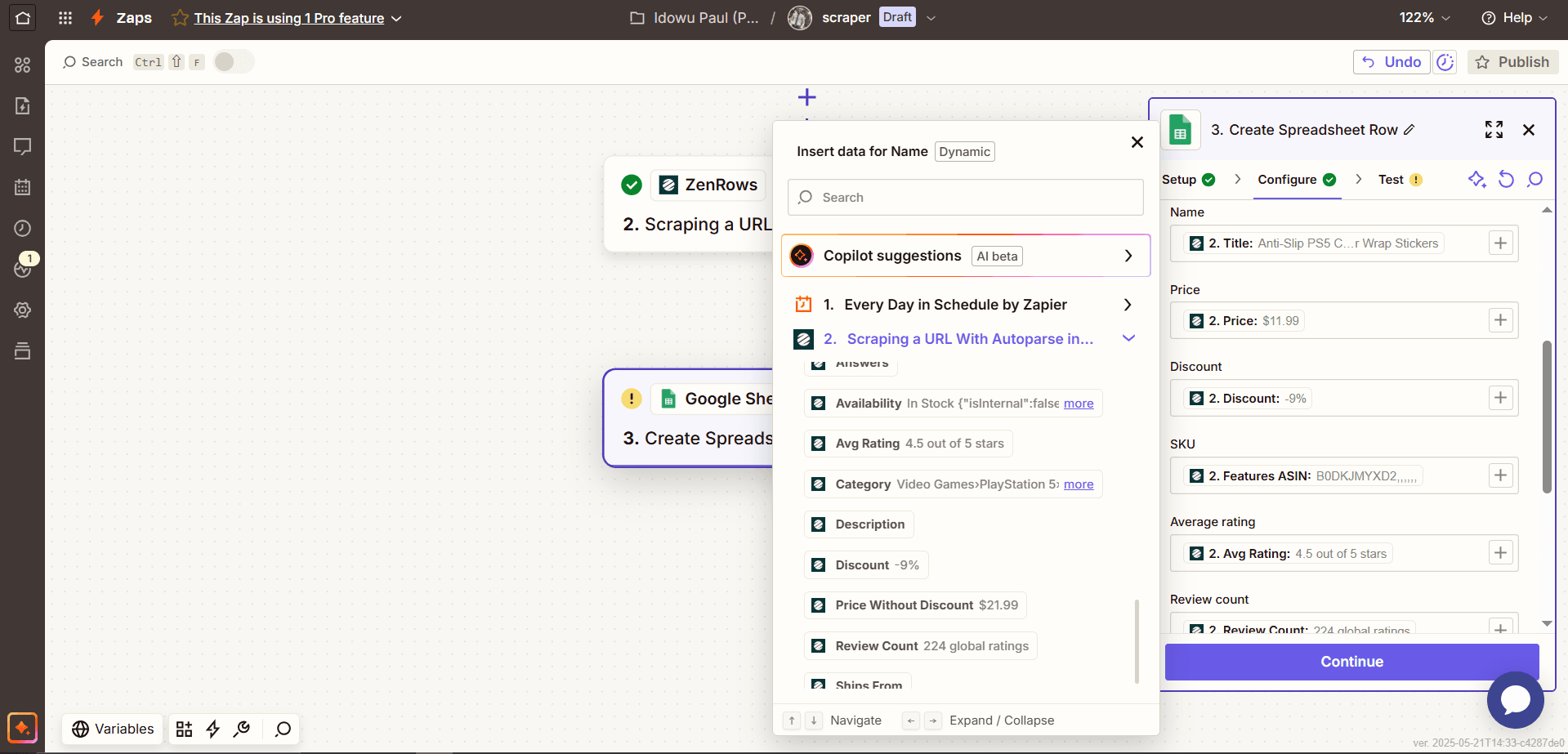

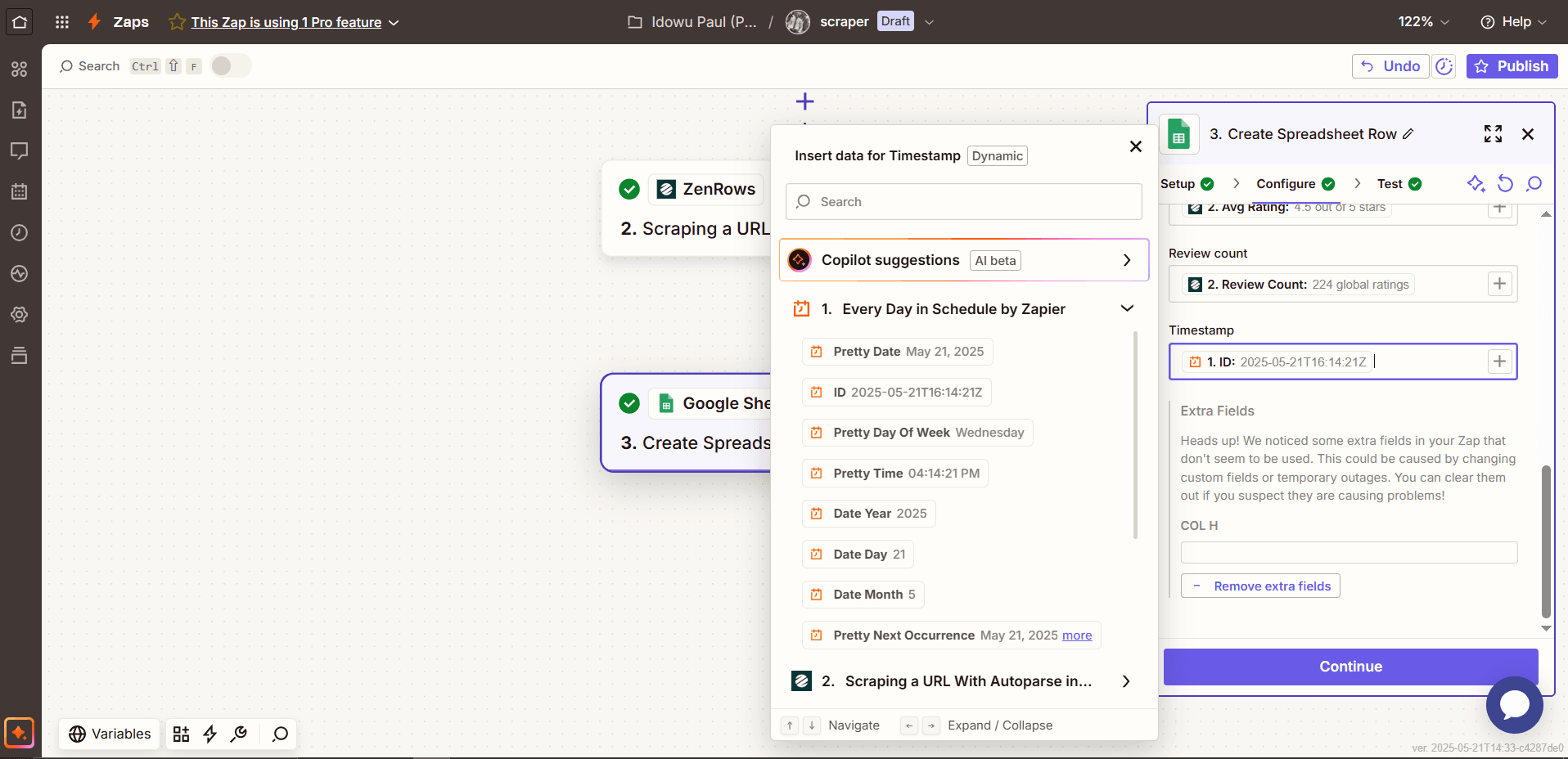

- Map the columns with the scraped data by clicking the

+icon next to each column name. Select the corresponding data for each column from the Scraping a URL With Autoparse step.

- Map the

Timestampcolumn with the ID data from the schedule trigger and clickContinue.

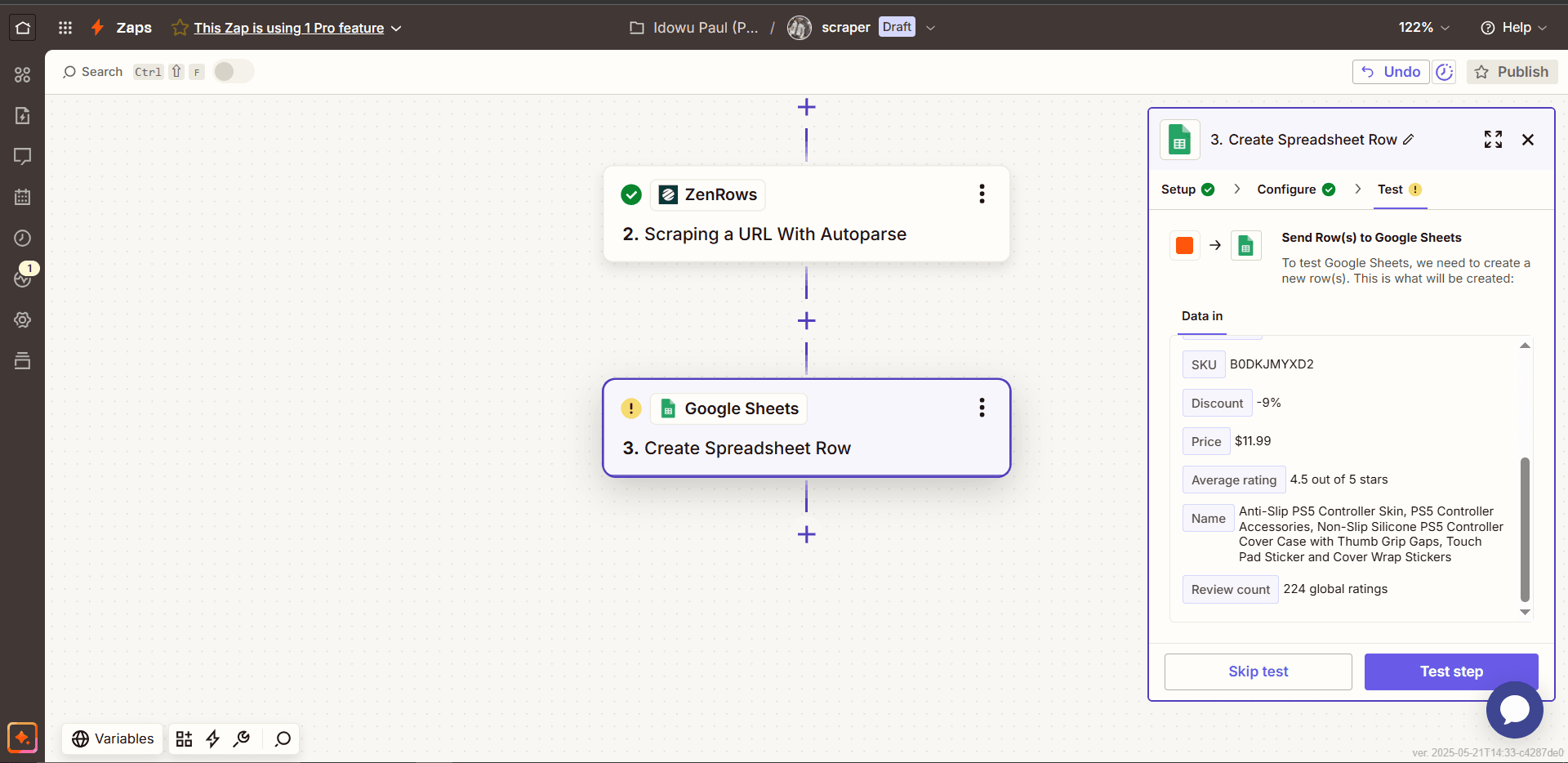

- Click

Test stepto confirm the workflow.

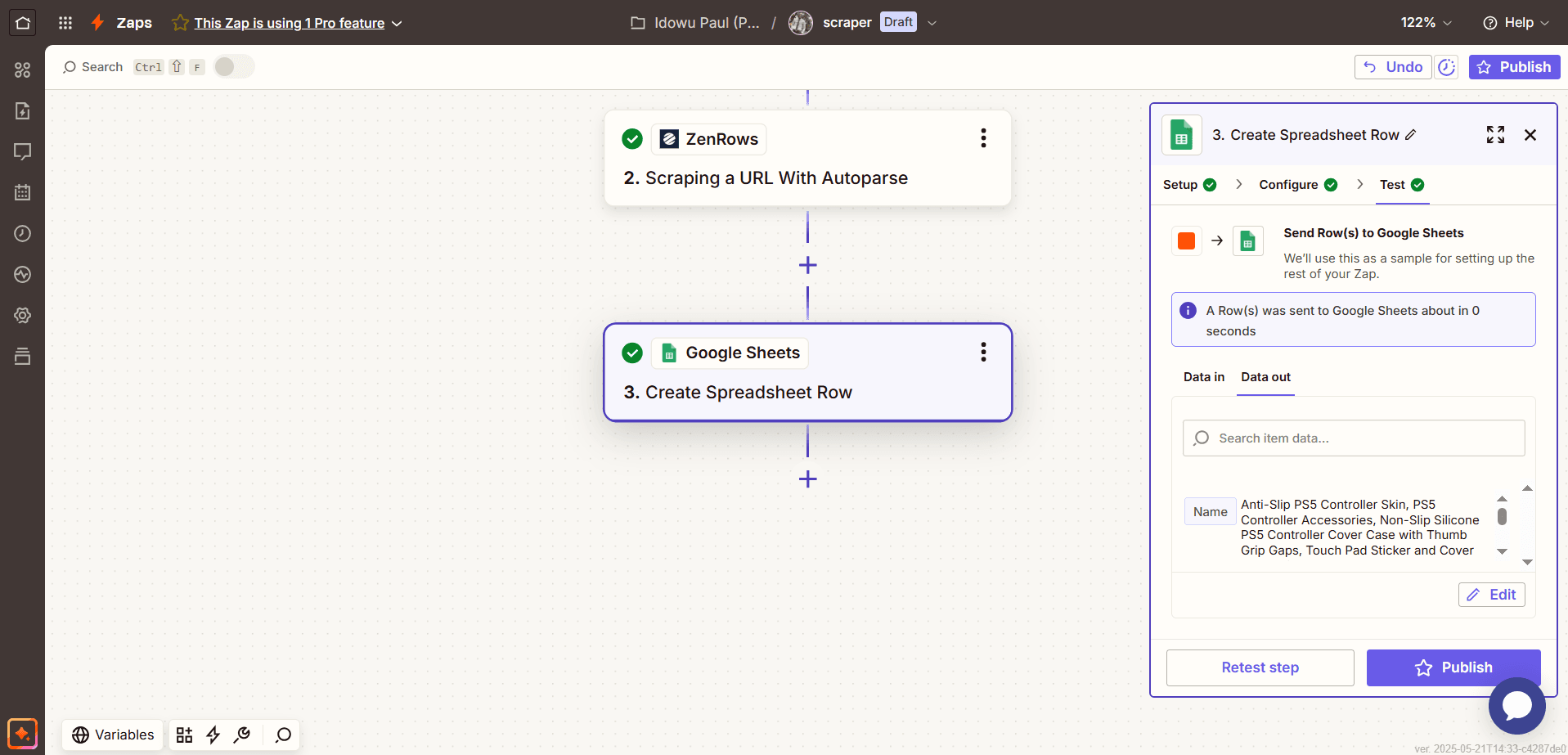

- Click

Publishto activate your scraping schedule.

Step 4: Validate the Workflow

The workflow runs automatically on schedule every day and adds a new row of data to the connected spreadsheet. Congratulations! 🎉 You just integrated ZenRows with Zapier and are now automating your scraping workflow.

Congratulations! 🎉 You just integrated ZenRows with Zapier and are now automating your scraping workflow.

ZenRows Configuration Options

ZenRows accepts the following configuration options during Zapier integration:| Configuration | Function |

|---|---|

| URL | The URL of the target website. |

| Premium Proxy | When activated, it routes requests through the ZenRows Residential Proxies, instead of the default Datacenter proxies. |

| Proxy Country | |

| JavaScript Rendering | Ensures that dynamic content loads before scraping. |

| Wait for Selector | |

| Wait Milliseconds | |

| JavaScript Instructions | |

| Headers | |

| Session ID | Uses a session ID to maintain the same IP for multiple API requests for up to 10 minutes. |

| Original Status | Returns the original status code returned by the target website. |

Troubleshooting

Common Issues and Solutions

-

Issue: Failed to create a URL in ZenRows (‘REQS004’).

- Solution: Double-check the target URL and ensure it’s not malformed or missing essential query strings.

- Solution 2: If using the CSS selector integration, ensure you pass the selectors as an array (e.g.,

[{"title":"#productTitle", "price": ".a-price-whole"}]).

-

Issue: Authentication failed (

AUTH002).- Solution: Double-check your ZenRows API key and ensure you enter a valid one.

-

Issue: Empty data.

- Solution: Ensure ZenRows supports autoparsing for the target website. Check the ZenRows Data Collector Marketplace to view the supported websites.

- Solution 2: If using the CSS selector integration, supply the correct CSS selectors that match the data you want to scrape.

Conclusion

You’ve successfully integrated ZenRows with Zapier and are now automating your scraping workflow, from scheduling to data storage. You can extend your workflow with more applications, including databases like SQL and analytic tools like Tableau.Frequently Asked Questions (FAQs)

How do I authenticate my requests?

How do I authenticate my requests?

To authenticate requests, use your ZenRows API key. Replace

ZENROWS API KEY in the authentication modal that Zapier shows.How do I use the wait or wait_for parameters?

How do I use the wait or wait_for parameters?

Use

wait to pause the rendering for a specific number of milliseconds. Use wait_for with a CSS selector to wait until that element appears before capturing the response. Both require JS Rendering.How do I rotate IPs per request?

How do I rotate IPs per request?

ZenRows rotates IPs automatically per request.