In Python, Playwright supports two variations: synchronous (great for small-scale scraping where concurrency isn’t an issue) and asynchronous (recommended for projects where concurrency, scalability, and performance are essential factors)

Use ZenRows’ Proxies with Playwright to Avoid Blocks

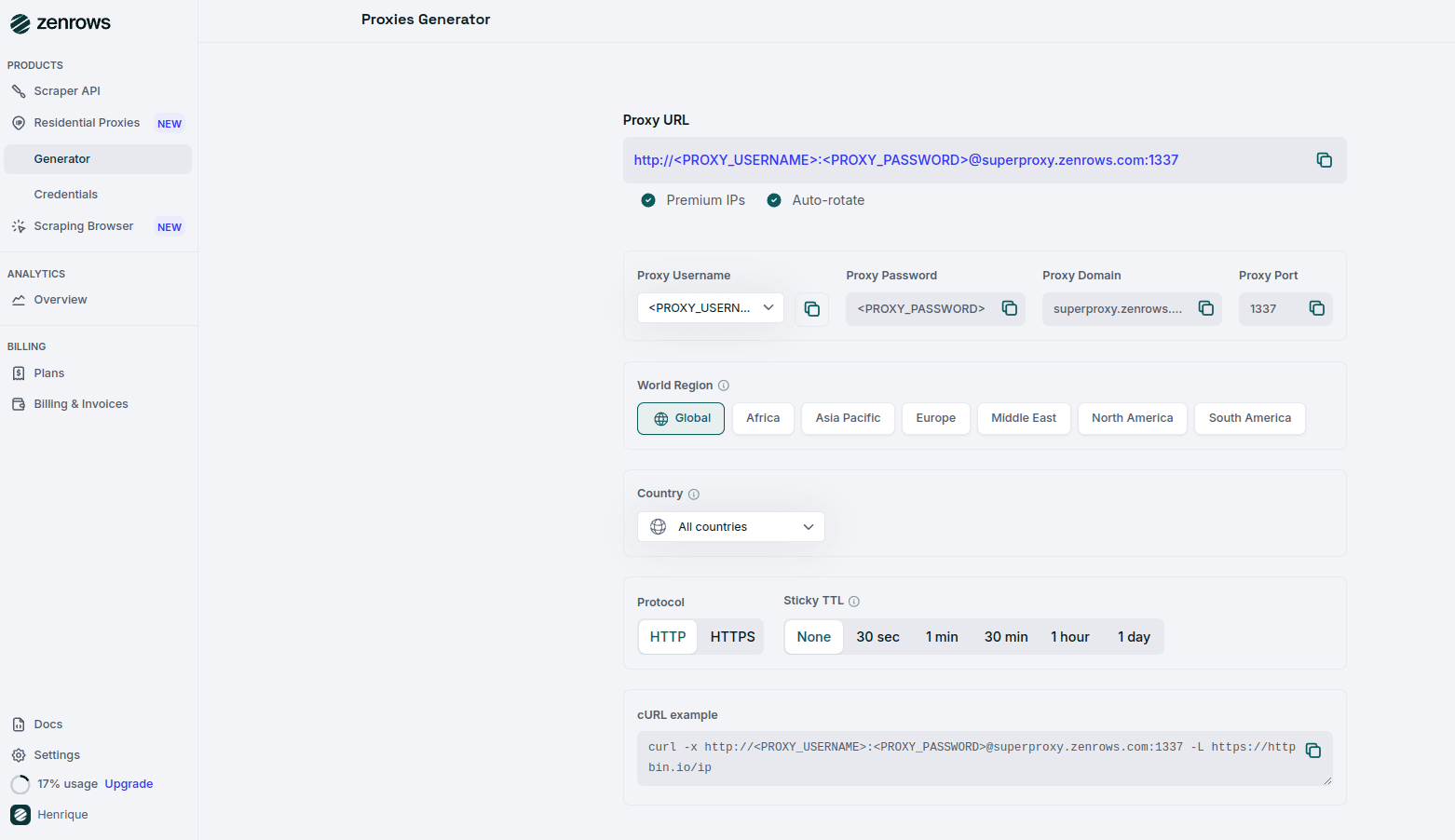

ZenRows offers residential proxies in 190+ countries that auto-rotate the IP address for you and offer Geolocation and http/https protocols. Integrate them into Playwright to appear as a different user every time so that your chances of getting blocked are reduced exponentially. You have three ways to get a proxy with ZenRows, one is via Residential Proxies, where you get our proxy, and it’s charged by the bandwidth; the other way is via the Universal Scraper API’s Premium Proxy, which is our residential proxy for the API, and you are charged by the request, depending on the params you choose; and the third is by using the Scraping Browser where can integrate into your code with just one line of code. After logging in, you’ll get redirected to the Request Builder page, then go to the Proxies Generator page and create your proxy:

scraper.py

Configure your Residential Proxy in Playwright

Make sure you have Playwright installed and in yourscraper.py file and add the following code:

scraper.py

Pricing

ZenRows operates on a pay-per-success model on the Universal Scraper API (that means you only pay for requests that produce the desired result); on the Residential Proxies, it’s based on bandwidth use. To optimize your scraper’s success rate, fully replace Playwright with ZenRows. Different pages on the same site may have various levels of protection, but using the parameters recommended above will ensure that you are covered. ZenRows offers a range of plans, starting at just $69 monthly. For more detailed information, please refer to our pricing page.Frequently Asked Questions (FAQs)

Why do I need a proxy for Playwright?

Why do I need a proxy for Playwright?

Playwright is widely recognized by websites’ anti-bot systems, which can block your requests. Using residential proxies from ZenRows allows you to rotate IP addresses and appear as a legitimate user, helping to bypass these restrictions and reduce the chances of being blocked.

How do I know if my proxy is working?

How do I know if my proxy is working?

You can test the proxy connection by running the script provided in the tutorial and checking the output from

httpbin.io/ip. If the proxy is working, the response will display a different IP address than your local machine’s.What should I do if my requests are blocked?

What should I do if my requests are blocked?

Many websites employ advanced anti-bot measures, such as CAPTCHAs and Web Application Firewalls (WAFs), to prevent automated scraping. Simply using proxies may not be enough to bypass these protections.Instead of relying solely on proxies, consider using ZenRows’ Universal Scraper API, which provides:

- JavaScript Rendering and Interaction Simulation: Optimized with anti-bot bypass capabilities.

- Comprehensive Anti-Bot Toolkit: ZenRows offers advanced tools to overcome complex anti-scraping solutions.